Robohub.org

To err is human: raising the bar on AI

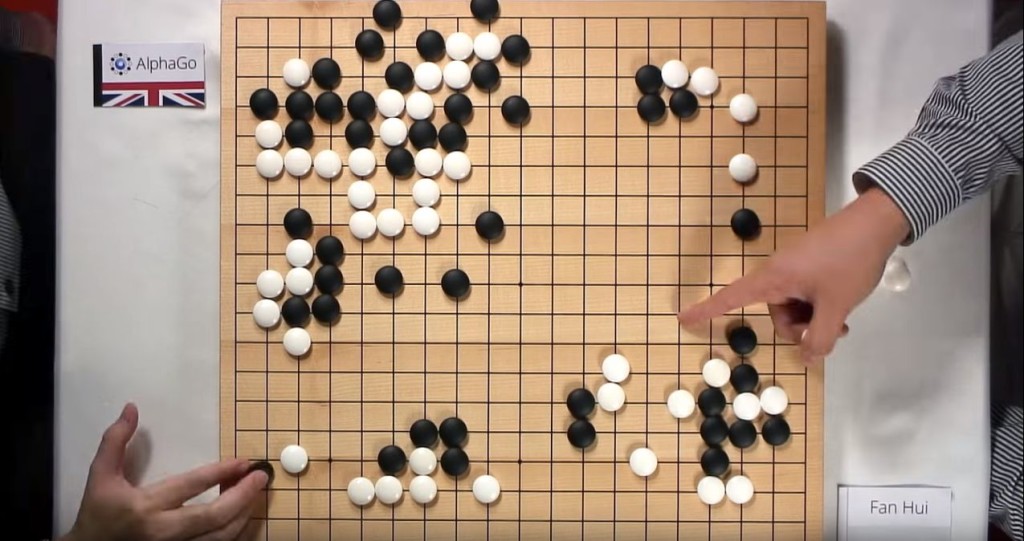

AlphaGo playing challenger Fan Hui. Source: Google DeepMind

Last week, media outlets reported about the latest ‘sure sign’ of an imminent robopocalypse. Google-backed DeepMind created an AI sophisticated enough to beat a human champion in the Chinese board game Go. AlphaGo defeated European champion Fan Hiu, surpassing IBM’s Deep Blue chess-playing victory over Gary Kasparov in 1997, marking another milestone of development and progress in AI research. Go is a more difficult game for humans to master – and it was thought also computers – because of its complexity and need for players to recognise intricate patterns.

When I set off to write a note about this achievement I expected to find the usual sources in popular press with their characteristically subtle declarations, heralding the End of the Human Race is Nigh! However, thankfully, responses seem to be more sanguine and muted. The British tabloids even avoided using that picture of Terminator that invariably accompanies their reports on new developments in AI and robotics.

Perhaps this is a sign things are changing, and that the popular press are becoming more sensible and responsible in their technology reporting. Well, maybe lets see how many weeks – or days – we can go without this sort of thing before claiming victory, or remarking we’ve turned a significant corner.

From a cultural perspective, there is a lot of interest about DeepMind’s success, even if it hasn’t stirred the usual panic about the robopocalypse. It made me recall a conversation from an EURobotics event in Bristol in November. We humans like to think that we’re special. And maybe the possibility that robots or AI are a threat to that special status is another reason why we are so afraid of them. Maybe we fear another blow to our narcissism, like when that crazy astronomer Copernicus spoiled things by showing that Earth wasn’t the centre of the Universe. Or when Victorian poo-pooer Darwin demonstrated we evolved on Earth and weren’t placed here at the behest of some Divine Creator. Perhaps we don’t really fear that robots and AI will destroy all of humanity – well maybe we fear that, too – but perhaps part of the fear is that robots and AI will destroy another of those special things we reserve for ourselves as unique beings amidst creation.

Yet, our scientists aren’t going to let us sit wrapped in the warmth of our unique being. They keep pushing and developing more and more sophisticated AI that threatens our… specialness. How do we as a culture respond to this persistent challenge? Like any good politician, it seems we have decided to confront the inevitability of our failure by constantly changing the rules. Choose your sporting metaphor such as: we ‘move the goalposts‘ or we ‘raise the bar’.

Once upon a time it was enough to think of ourselves as the rational animal, the sole species on Earth bequeathed with the capacity for reason. Then, as evidence we could reason as the basis for unique status for humanity crumbled – both thanks to proof that animals were capable of sophisticated thought and the lack of proof that humans were rational – we shifted those goalposts. We transformed ourselves into the symbolic animal, the sole species on Earth endowed with the capacity to manipulate signs and represent.

We learned that whales, dolphins and other animals were communicating with each other all the time even if we weren’t listening. And that’s before we taught chimps how to use sign language (for which Charleton Heston will never thank us).

Then computers arrived to make things even worse. After some early experiments with hulking machines that struggled to add 2 + 2, computers eventually progressed to leave us in their wake. Computers can think more accurately and quickly than any human. They can solve complex mathematical equations therfore demonstrating they are pretty adept with symbols.

Ah, BUT…

Supercomputer Watson and human challengers on Jeopardy. Source: youtube

Humans could find solace in the comforting thought that computers were good at some things, yes, but they weren’t so smart. A computer could never beat a human at chess, for example. That is until May 1997 when chess champion Gary Kasparov lost to IBM’s Deep Blue. But, that was always going to happen. A computer could never, we consoled ourselves, win at a game that required linguistic dexterity. Until 2011, when IBM’s Watson beat Ken Jennings and Brad Rutter at Jeopardy! the hit US game show. And now, Google’s DeepMind has conquered all, winning the most difficult strategy game we can imagine.

What is interesting about DeepMind’s victory is how human beings have responded again to the challenges of our self-conception posed by robots and AI. If we were under any illusion that we were special and alone among Gods’ creations as a thinking animal, a symbolising animal, or a playing animal, that status as been usurped by our own progeny again and again, in that all too familiar Greek-Frankenstein-Freudian way.

Animal rationabile had to give way to animal symbolicum, that in turn gave way to animal ludens. What’s left now for poor, biologically-limited humanity?

Lieutenant Commander Data in Star Trek. Source: youtube

A glimpse to this latest provocation can be seen in Star Trek: The Next Generation. Lieutenant Commander Data is a self-aware android with cognitive abilities far beyond any human being. Despite these tremendous capabilities, Data is always regarded – by himself and all the humans around him – as tragically, inevitably inferior, less than human. Despite lessons in Shakespeare and sermons in human romantic ideals from his mentor Captain Jean-Luc Picard, Data is doomed to be forever inferior to humans.

It seems now that AI can think and solve problems as well as humans, we’ve raised the bar again, changing the definition of ‘human’ to preserve our unique, privileged status.

We might now be animal permotionem – the emotional animal – except while that would be fine for distinguishing between us and robots at least until we upload the elusive ‘consciousness.dat’ file (as in Neill Blomkamp’s recent film, Chappie) this new moniker won’t help us remain distinct from the rest of the animals. Because to be an emotional animal and a creature ruled by impulse and feeling is.. to just be an animal, according to all of our previous definitions.

We might find some refuge following Gene Roddenberry’s example in the notion of humans as unique animal artis: the animals that creates and engages in artistic work. The clever among you will have realised some time ago I’m not a classical scholar and my attempts to feign Latin fell apart some time ago. Artis seems to imply something more akin to ‘skill’, which robots arguably have already achieved; ars simply means ‘technique’ or ‘science’. Neither really capture what I’m trying to get at, suggestions are welcome.

The idea that human beings are defined by a particular creative impulse is not terribly new. Attempts to redefine ‘the human’ along these lines have been evident since the latter half of the twentieth century. For example if we flip back one hundred years, we might see Freud defining human beings (civilised human beings to clarify) as uniquely able to follow rules. But by the late 1960s, Freud’s descendants, like British psychoanalyst D. W. Winnicott, are arguing the exact opposite – what makes us human is creativity and the ability to fully participate in our being in an engaged, productive way.

What’s a poor AI to do? It was once enough for artificial intelligence to be sufficiently impressive maybe even deemed ‘human’ if it could prove capable of reason or symbolic representations, win at chess, Jeopardy!, or Go. Now we expect nothing less than Laurence Olivier, Lord Byron, and Jackson Pollack all in one.

This reminds me of Chris Columbus’s 1999 film Bicentennial Man (based on a story by Isaac Asimov). Robin Williams’s Andrew Martin begins his life, for lack of a better word, as a simple robot. Over the decades becomes more like a human and sentient, demonstrating artistic skill and feeling genuine emotion. At each stage he hopes to be recognised as a being on par with humans. No, he’s told, you’re not sentient. When he’s sentient he’s told he cannot feel. He’s told he cannot love. No achievement is enough.

Even once he has achieved everything and become like a human in every respect, perhaps even superhuman, he is told it is too much and has to be less. In a reversal of the Aristotelian notion of the thinking, superior animal Andrew is told to make mistakes. He is too perfect. He cannot be homo sapien, he needs to be homo errat, the man that screws up. It is not until Andrew is on his deathbed that the Speaker of the World Congress declares world will recognise Andrew as human.

Perhaps this will be the final line and definition of human that will endure every challenge posed by robots and artificial intelligence. No matter the level of technological progress, regardless how far artificial life leaves human beings behind: we will be homo mortuum. The rational animal that can die.

If Singularity enthusiasts and doomsayers are to be believed, this inevitable self-conception is not long off. Perhaps humans’ strength in our ability to adapt and the talent to re-invent ourselves, could mean there’s life in the old species yet. Regardless, it will serve us very well to create a conception of both ourselves and of artificial life forms that try to demarcate the boundaries and decide when these boundaries might be crossed. And what the implications will be for crossing that line.

This post originally appeared in Dreaming Robots.

tags: AI, alphago, cx-Politics-Law-Society, DeepMind