Robohub.org

Facebook – Our ffriendly automated identity bender

I like Star Wars. I like technology. I like philosophy. I like teaching. I like the occasional meme. I like robots. I like a lot of things.

But if I were to give an accurate account of the things that define me, that truly make up my identity, I would focus on my liking philosophy, the way it informs my thoughts about technology and the world. I would probably describe some of the research I do. There’s a good chance I’d talk about one of the courses I teach. If the conversation went long enough, I just might mention something about Star Wars.

My liking philosophy and technology translates into my spending a great deal of my daily waking hours thinking, reading, and writing about philosophy and technology. My liking Star Wars translates into my buying a LEGO kit once in a while and building it with my daughters, or spending a couple of hours now and again watching a movie I’ve seen a few dozen times.

Philosophy and technology are a big part of my identity. Star Wars is not.

But to our Facebook friends, lets call them our ffriends for short, none of us can honestly know how we appear.

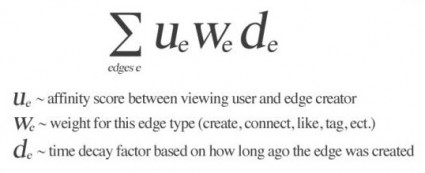

Facebook uses automated filtering algorithms, little snippets of software code, to decide which of your posts will be made visible on your ffriends’ news feeds.

Facebook uses automated filtering algorithms, little snippets of software code, to decide which of your posts will be made visible on your ffriends’ news feeds.Facebook uses automated filtering algorithms, little snippets of software code, to decide which of your posts will be made visible on your ffriends’ news feeds. The algorithms work in the background, like little robots, constantly monitoring user activity and making automated decisions about each post on the system. But how those little automated bots select and filter your posts is not clear: Facebook doesn’t publish the details of the algorithms. Yet, the details can make a big splash.

Many of you will have read about the public outrage surrounding Facebook’s latest ethics scandal (if you haven’t, here is a fantastic summary in The Atlantic). It involves a study Facebook conducted (perhaps using you as a participant) to see if they could establish a link between the “emotional content” contained in a user’s news feed, and that user’s level of activity on Facebook. In short, they wanted to see if being exposed to more happy, upbeat news items would cause you to spend more time posting on Facebook than sad ones.

To test their hypothesis, they tinkered with their automated filtering algorithms during one week in 2012. The filters were set to bias your news feed: depending on the study category you were in you might have seen more “emotionally positive” posts than usual, or more “emotionally negative” posts. Their findings (sort of) confirmed a link between the emotional content in a user’s news feed and a user’s posting habits. Happy posts in a news feed appear to make you post more to Facebook.

Facebook recently published their findings. Users are outraged. But why?

Much of the commentary on the outrage has focused on the fact that Facebook appears to be attempting to actively manipulate its users by engaging them emotionally, and on the fact that Facebook conducted its research unethically. Danah Boyd has written about the reaction.

But, hold on. That shouldn’t surprise us, should it? On the one hand, Facebook, like so many other organizations whose mission is to manipulate you into doing this or that, purchasing this or that, or thinking this or that, is just another one of those kinds of organizations. And, as Danah Boyd points out, the research ethics issues at play are being a bit overstated. There may be some problems with the way the ethics of this study were handled, but they are minor and not at all unique to Facebook or this study.

There is another, more problematic reason to feel bothered, even outraged, at Facebook’s current design practices.

For many of us Facebook has become the de facto tool used to communicate with friends (and ffriends). Indeed, like credit cards, it has become increasingly difficult to avoid using Facebook. Oh sure, you can opt-out. But that puts you at a certain social disadvantage. You’ll miss out on conversations, events, and other goings on. Because it is such a central actor in so many of our communications, Facebook is now the thing many of us use to sculpt our image. Put another way, Facebook has become the primary conversational and interactional medium through which a vast number of us express and shape our identities.

Because so many people, rightly or wrongly, use the platform as their primary communication medium, Facebook’s news item filtering algorithms can have a direct impact on what kind of person your ffriends think you are. How does this happen? Let’s say you post 10 news items in a day, eight of them having to do with serious topics like NSA spying or the current uprising in the Ukraine, and two of them containing memes. If the algorithm is designed to systematically “demote” your posts about world affairs and “promote” your meme posts, over time you start to look more like a person obsessed with memes and less like a person interested in world affairs. In my case, it’s entirely possible many of my ffriends think I spend most of my day thinking about Star Wars.

That’s a problem.

The net result is that Facebook’s algorithms, those little bots made up of so many lines of code, actively bend your identity based on conditions completely invisible to you, by making decisions on your behalf about which of your posts to place on your ffriends’ news feeds.

[ASIDE: At this point I have to apologize to all of my ffriends that I may have unfairly “silenced” in my news feed over the years. It is entirely possible that you are the victim of Facebook’s identity bending algorithms, and that you are not actually obsessed with LOLZ cats, socially awkward penguins, or motivational jpgs meant to inspire me to be a better person. Though you might have posted numerous interesting and thought-provoking comments to your feed, it might be the case that I was simply not allowed to see you for who you really are. Then again, maybe I was. We may never know.]

I have written about the ethics of automation, and some of the issues that can arise when automation technology is designed to make decisions on behalf of users. When decisions are designed into technologies that provide material answers to moral questions, those technologies can subject users to a form of paternalism that is ethically problematic. In this case, Facebook’s algorithms are making decisions about what kind of person you appear to be to your ffriends. Your identity is a deeply personal moral issue.

Promoting your posts based on invisible criteria is ethically different than promoting your posts based on transparent criteria. The two are not ethically on a par with one another. When filtering criteria are made transparent you can make an informed decision about using the platform. For example, if Facebook explains to users that the system is designed to promote posts that are most popular among your ffriends, you will have a relatively accurate idea of how “visible” your posts are to your ffriends and why their posts are showing up in your feed.

Furthermore, when you have no idea how you are being made to appear to your ffriends, you are hardly the author of your identity. If Facebook continues to apply filtering algorithms based on invisible criteria, you will never be more than the co-author of your identity.

This, I think, helps to explain our negative gut reaction to Facebook’s “emotional manipulation” study much more than any actual claim to emotional manipulation can. After all, there are just so many similar examples of emotional manipulation out there that we tacitly accept. That Facebook is willing to make decisions about your outward appearance, however, that they are willing to manipulate your identity without telling you how, gives you good reason to distrust Facebook and its identity bending algorithms.

Robohub is an online platform that brings together leading communicators in robotics research, start-ups, business, and education from around the world. Learn more about us here. If you liked this article, you may also be interested in:

- Is surveillance the new business model for consumer robotics?

- DARPA’s Gill Pratt on Google’s robotics investments

- The robots behind your Internet shopping addiction: How your wallet is driving retail automation

- Big deals: What it means to have the giants investing in robotics

- An ethical dilemma: When robot cars must kill, who should pick the victim?

- Robots Podcast: A Code of Ethics for HRI Practitioners

- Kids with wheels: Should the unlicensed be allowed to ‘drive’ autonomous cars?

- What should a robot do? Designing robots that know right from wrong

See all the latest robotics news on Robohub, or sign up for our weekly newsletter.

tags: algorithms, c-Politics-Law-Society, Culture and Philosophy, ethics