Robohub.org

Overview of the International Conference on Robot Ethics and Safety Standards – with survey on autonomous cars

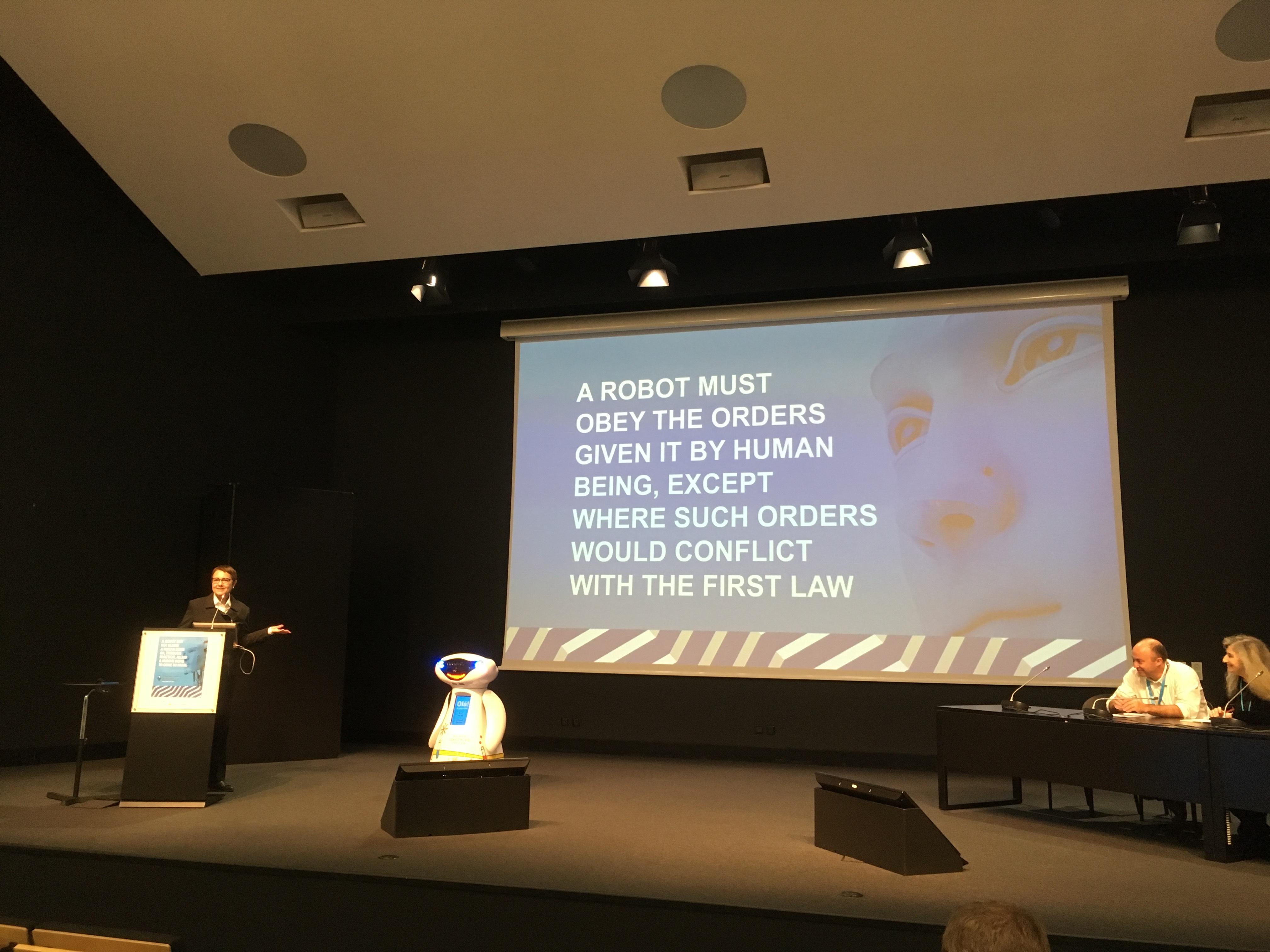

The International Conference on Robot Ethics and Safety Standards (ICRESS-2017) took place in Lisbon, Portugal, from 20th to 21st October 2017. Maria Isabel Aldinhas Ferreira and João Silva Sequeira coordinated the conference with the aim to create a vibrant multidisciplinary discussion around pressing safety, ethical, legal and societal issues of the rapid introduction of robotic technology in many environments.

The International Conference on Robot Ethics and Safety Standards (ICRESS-2017) took place in Lisbon, Portugal, from 20th to 21st October 2017. Maria Isabel Aldinhas Ferreira and João Silva Sequeira coordinated the conference with the aim to create a vibrant multidisciplinary discussion around pressing safety, ethical, legal and societal issues of the rapid introduction of robotic technology in many environments.

There were several fascinating keynote presentations. Mathias Scheutz’ inaugural speech highlighted the need for robots to act in a way that would be perceived as using moral principles and judgement. While enabling autonomous reasoning on ethical decisions is a difficult research problem, researchers should continue to pursue this. One avenue is enabling reasoning systems to examine obligations and permissions and on the basis of such analysis, robots would perform sophisticated choices between actions and plans to achieve correct behaviour. It was refreshing to see that we could potentially have autonomous robots that arrive at appropriate decisions that would be seen as “right” or “wrong” by an external observer.

On the other hand, Rodolphe Gélin provided the perspective of robot manufacturers, and how difficult the issues of safety have become. The expectations of the public regarding robots seem to go beyond other conventional machines. The discussion was very diverse, and some suggested schemes similar to licensing would be required to qualify humans to operate robots (as they re-train them or re-program them). Other schemes for insurance and liabilities were suggested.

Professional bodies, experts and standards were discussed by the other two keynote presentations. Raja Chatila from the IEEE Global AI Ethics Initiative perspective, and Gurvinder Singh Virk from that of several ISO robot standardisation groups.

The conference also hosted a panel discussion, where interesting issues were debated like the challenges posed by the proliferation of drones in the general public. Such a topic has characteristics different from many other problems societies have faced with the introduction of new technologies. Drones can be 3D printed from many designs with potentially no liability to the designer, they can be operated with virtually no complex training, and they can be controlled from sufficient long distances that recuperating the drone would be insufficient to track the operator/owner. Their cameras and data recording can potentially be used to what some would consider privacy breaches, and they could compete for the space of already operating commercial aviation. It seems unclear what regulations and what bodies are to intervene and even so, how to enforce them. Would something similar happen when the public acquires pet-robots or artificial companions?

The presentations of accepted papers raised many issues, including the difficulties to create legal foundations for liability schemes and the responsibilities attributed to machines and operators. Particular aspects included the fact that for specific tasks, computers do significantly better than the average person (examples are driving a car and negotiation a curve). Other challenges are that humans will be in the proximity of robots on a regular basis in manufacturing or office environments with many new potential risks.

The vibrant nature of the conference concluded that the challenges are emerging much more rapidly than the answers.

We’ve also just launched a survey on software behaviours that an autonomous car should have when faced with difficult decisions. Just click here. Participants may win a prize, participation is completely voluntary and anonymous.

tags: c-Politics-Law-Society