Robohub.org

Robots hallucinate humans to aid in object recognition | IEEE Spectrum

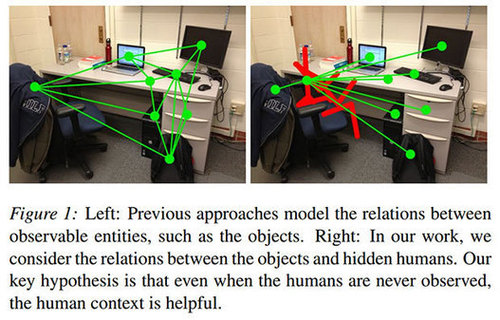

“Almost exactly a year ago, we posted about how Ashutosh Saxena’s lab at Cornell was teaching robots to use their “imaginations” to try to picture how a human would want a room organized. The research was successful, with algorithms that used hallucinated humans (which are the best sort of humans) to influence the placement of objects performing significantly better than other methods. Cool stuff indeed, and now comes the next step: labeling 3D point-clouds obtained from RGB-D sensors by leveraging contextual hallucinated people.”

See on spectrum.ieee.org

John Payne

Related posts :

Robot Talk Episode 143 – Robots for children, with Elmira Yadollahi

Robot Talk

06 Feb 2026

In the latest episode of the Robot Talk podcast, Claire chatted to Elmira Yadollahi from Lancaster University about how children interact with and relate to robots.

New frontiers in robotics at CES 2026

Henry Hickson

03 Feb 2026

Henry Hickson reports on the exciting developments in robotics at Consumer Electronics Show 2026.

Robot Talk Episode 142 – Collaborative robot arms, with Mark Gray

Robot Talk

30 Jan 2026

In the latest episode of the Robot Talk podcast, Claire chatted to Mark Gray from Universal Robots about their lightweight robotic arms that work alongside humans.

Robot Talk Episode 141 – Our relationship with robot swarms, with Razanne Abu-Aisheh

Robot Talk

23 Jan 2026

In the latest episode of the Robot Talk podcast, Claire chatted to Razanne Abu-Aisheh from the University of Bristol about how people feel about interacting with robot swarms.

Vine-inspired robotic gripper gently lifts heavy and fragile objects

MIT News

23 Jan 2026

The new design could be adapted to assist the elderly, sort warehouse products, or unload heavy cargo.

Robot Talk Episode 140 – Robot balance and agility, with Amir Patel

Robot Talk

16 Jan 2026

In the latest episode of the Robot Talk podcast, Claire chatted to Amir Patel from University College London about designing robots with the agility and manoeuvrability of a cheetah.

Taking humanoid soccer to the next level: An interview with RoboCup trustee Alessandra Rossi

AIhub and Lucy Smith

14 Jan 2026

Find out more about the forthcoming changes to the RoboCup soccer leagues.

Robots to navigate hiking trails

Christopher Tatsch

12 Jan 2026

Find out more about work presented at IROS 2025 on autonomous hiking trail navigation via semantic segmentation and geometric analysis.