Robohub.org

Touch sensing: An important tool for mobile robot navigation

In mammals, the touch modality develops earlier than the other senses, yet it is a less studied sensory modality than the visual and auditory counterparts. It not only allows environmental interactions, but also, serves as an effective defense mechanism.

Figure 1: Rat using the whiskers to interact with environment via touch

The role of touch in mobile robot navigation has not been explored in detail. However, touch appears to play an important role in obstacle avoidance and pathfinding for mobile robots. Proximal sensing often is a blind spot for most long range sensors such as cameras and lidars for which touch sensors could serve as a complementary modality.

Overall, touch appears to be a promising modality for mobile robot navigation. However, more research is needed to fully understand the role of touch in mobile robot navigation.

Role of touch in nature

The touch modality is paramount for many organisms. It plays an important role in perception, exploration, and navigation. Animals use this mode of navigation extensively to explore their surroundings. Rodents, pinnipeds, cats, dogs, and fish use this mode differently than humans. While humans primarily use touch sense for prehensile manipulation, mammals such as rats and shrews rely on touch sensing for exploration and navigation due to their poor visual system via the vibrissa mechanism. This vibrissa mechanism is essential for short-range sensing, which works in tandem with the visual system.

Artificial touch sensors for robots

Artificial touch sensor design has evolved over the last four decades. However, these sensors are not as widely used in mobile robot systems as cameras and lidars. Mobile robots usually employ these long range sensors, but short range sensing receives relatively less attention.

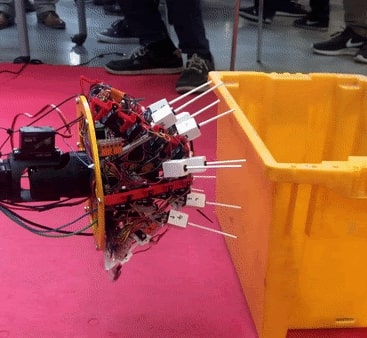

When designing the artificial touch sensors for mobile robot navigation, we typically draw inspiration from nature, i.e., biological whiskers to derive bio-inspired artificial whiskers. One such early prototype is shown in figure below.

Figure 2: Bioinspired artificial rat whisker array prototype V1.0

However, there is no reason for us to limit the design innovations to 100% accurately mimicking biological whisker-like touch sensors. While some researchers are attempting to perfect the tapering of whiskers [1], we are currently investigating abstract mathematical models that can further inspire a whole array of touch sensors [2].

Challenges with designing touch sensors for robots

There are many challenges when designing touch sensors for mobile robots. One key challenge is the trade-off between weight, size, and power consumption. The power consumption of the sensors can be significant, which can limit their applicability in mobile robot applications.

Another challenge is to find the right trade-off between touch sensitivity and robustness. The sensors need to be sensitive enough to detect small changes in the environment, yet robust enough to handle the dynamic and harsh conditions in most mobile robot applications.

Future directions

There is a need for more systematic studies to understand the role of touch in mobile robot navigation. The current studies are mostly limited to specific applications and scenarios geared towards dexterous manipulation and grasping. We need to understand the challenges and limitations of using touch sensors for mobile robot navigation. We also need to develop more robust and power-efficient touch sensors for mobile robots.

Logistically, another factor that limits the use of touch sensors is the lack of openly available off the shelf touch sensors. Few research groups around the world are working towards their own touch sensor prototype, biomimetic or otherwise, but all such designs are closed and extremely hard to replicate and improve.

References

- Williams, Christopher M., and Eric M. Kramer. “The advantages of a tapered whisker.” PLoS one 5.1 (2010): e8806.

- Tiwari, Kshitij, et al. “Visibility-Inspired Models of Touch Sensors for Navigation.” 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2022