Robohub.org

Robo-Insight #3

Source: OpenAI’s DALL·E 2 with prompt “a hyperrealistic picture of a robot reading the news on a laptop at a coffee shop”

Welcome to the third edition of Robo-Insight, a biweekly robotics news update! In this post, we are excited to share a range of new advancements in the field and highlight progress in areas like motion, unfamiliar navigation, dynamic control, digging, agriculture, surgery, and food sorting.

A bioinspired robot masters 8 modes of motion for adaptive maneuvering

In a world of constant motion, a newly developed robot named M4 (Multi-Modal Mobility Morphobot) has demonstrated the ability to switch between eight different modes of motion, including rolling, flying, and walking. Designed by researchers from Caltech’s Center for Autonomous Systems and Technologies (CAST) and Northeastern University, the robot can autonomously adapt its movement strategy based on its environment. Created by engineers Mory Gharib and Alireza Ramezani, the M4 project aims to enhance robot locomotion by utilizing a combination of adaptable components and artificial intelligence. The potential applications of this innovation range from medical transport to planetary exploration.

The robot switches from its driving to its walking state. Source.

New navigation approach for robots aiding visually impaired individuals

Speaking of movement, researchers from the Hamburg University of Applied Sciences have presented an innovative navigation algorithm for a mobile robot assistance system based on OpenStreetMap data. The algorithm addresses the challenges faced by visually impaired individuals in navigating unfamiliar routes. By employing a three-stage process involving map verification, augmentation, and generation of a navigable graph, the algorithm optimizes navigation for this user group. The study highlights the potential of OpenStreetMap data to enhance navigation applications for visually impaired individuals, carrying implications for the advancement of robotics solutions that can cater to specific user requirements through data verification and augmentation.

This autonomous vehicle aims to guide visually impaired individuals. Source.

A unique technique enhances robot control in dynamic environments

Along the same lines as new environments, researchers from MIT and Stanford University have developed a novel machine-learning technique that enhances the control of robots, such as drones and autonomous vehicles, in rapidly changing environments. The approach leverages insights from control theory to create effective control strategies for complex dynamics, like wind impacts on flying vehicles. This technique holds potential for a range of applications, from enabling autonomous vehicles to adapt to slippery road conditions to improving the performance of drones in challenging wind conditions. By integrating learned dynamics and control-oriented structures, the researchers’ approach offers a more efficient and effective method for controlling robots, with implications for various types of dynamical systems in robotics.

Robot that could have improved control in different environments. Source.

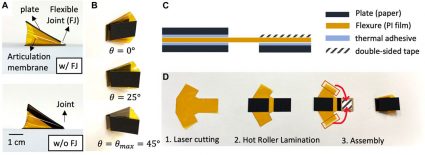

Burrowing robots with origami feet

Robots have been improving in areas above ground for a while but are now also advancing in underground spaces, researchers from the University of California Berkeley and the University of California Santa Cruz have unveiled a new robotics approach that utilizes origami-inspired foldable feet to navigate granular environments. Drawing inspiration from biological systems and their anisotropic forces, this approach harnesses reciprocating burrowing techniques for precise directional motion. By employing simple linear actuators and leveraging passive anisotropic force responses, this study paves the way for streamlined robotic burrowing, shedding light on the prospect of simplified yet effective underground exploration and navigation. This innovative integration of origami principles into robotics opens the door to enhanced subterranean applications.

The prototype for the foot and its method for fabrication. Source.

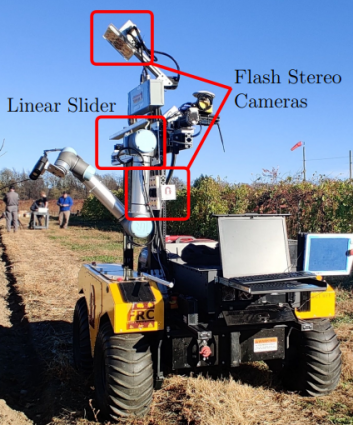

Innovative processes in agricultural robotics

In the world of agriculture, a researcher from Carnegie Mellon University recently explored the synergy between scientific phenotyping and agricultural robotics in a Master’s Thesis. Their study delved into the vital role of accurate plant trait measurement in developing improved plant varieties, while also highlighting the promising realm of robotic plant manipulation in agriculture. Envisioning advanced farming practices, the researcher emphasizes tasks like pruning, pollination, and harvesting carried out by robots. By proposing innovative methods such as 3D cloud assessment for seed counting and vine segmentation, the study aims to streamline data collection for agricultural robotics. Additionally, the creation and use of 3D skeletal vine models exhibit the potential for optimizing grape quality and yield, paving the way for more efficient agricultural practices.

A Robotic data capture platform that was introduced. Source.

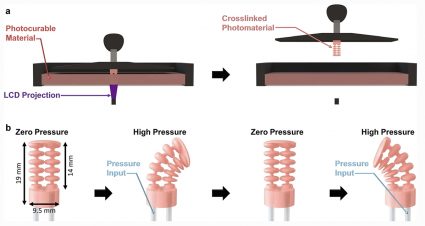

Soft robotic catheters could help improve minimally invasive surgery

Shifting our focus to surgery, a team of mechanical engineers and medical researchers from the University of Maryland, Johns Hopkins University, and the University of Maryland Medical School has developed a pneumatically actuated soft robotic catheter system to enhance control during minimally invasive surgeries. The system allows surgeons to insert and bend the catheter tip with high accuracy simultaneously, potentially improving outcomes in procedures that require navigating narrow and complex body spaces. The researchers’ approach simplifies the mechanical and control architecture through pneumatic actuation, enabling intuitive control of both bending and insertion without manual channel pressurization. The system has shown promise in accurately reaching cylindrical targets in tests, benefiting both novice and skilled surgeons.

Figure showing manufacturing and operation of soft robotic catheter tip using printing process for actuator and pneumatic pressurization to control catheter bending. Source.

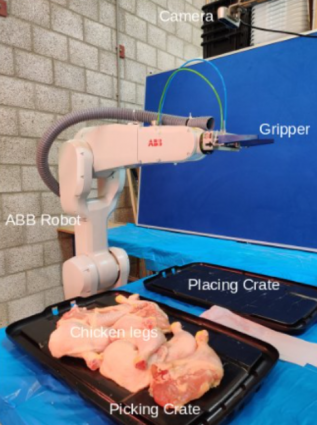

Robotic system enhances poultry handling efficiency

Finally, in the food world, researchers have introduced an innovative robotic system designed to efficiently pick and place deformable poultry pieces from cluttered bins. The architecture integrates multiple modules, enabling precise manipulation of delicate poultry items. A comprehensive evaluation approach is proposed to assess the system’s performance across various modules, shedding light on successes and challenges. This advancement holds the potential to revolutionize meat processing and the broader food industry, addressing demands for increased automation.

An experimental setup. Source.

This array of recent developments spanning various fields shows the versatile and ever-evolving character of robotics technology, unveiling fresh avenues for its integration across different sectors. The steady evolution in robotics exemplifies the ongoing endeavors and the potential ramifications these advancements could have in the times ahead.

Sources:

- New Bioinspired Robot Flies, Rolls, Walks, and More. (2023, June 27). Center for Autonomous Systems and Technologies. Caltech University.

- Application of Path Planning for a Mobile Robot Assistance System Based on OpenStreetMap Data. Stahr, P., Maaß, J., & Gärtner, H. (2023). Robotics, 12(4), 113.

- A simpler method for learning to control a robot. (2023, July 26). MIT News | Massachusetts Institute of Technology.

- Efficient reciprocating burrowing with anisotropic origami feet. Kim, S., Treers, L. K., Huh, T. M., & Stuart, H. S. (2023, July 3). Frontiers.

- Phenotyping and Skeletonization for Agricultural Robotics. The Robotics Institute Carnegie Mellon University. (n.d.). Retrieved August 10, 2023.

- Pneumatically controlled soft robotic catheters offer accuracy, flexibility. (n.d.). Retrieved August 10, 2023.

- Advanced Robotic System for Efficient Pick-and-Place of Deformable Poultry in Cluttered Bin: A Comprehensive Evaluation Approach. Raja, R., Burusa, A. K., Kootstra, G., & van Henten, E. (2023, August 7). TechRviv.