Robohub.org

CoRL2025 – RobustDexGrasp: dexterous robot hand grasping of nearly any object

The dexterity gap: from human hand to robot hand

Observe your own hand. As you read this, it’s holding your phone or clicking your mouse with seemingly effortless grace. With over 20 degrees of freedom, human hands possess extraordinary dexterity, which can grip a heavy hammer, rotate a screwdriver, or instantly adjust when something slips.

With a similar structure to human hands, dexterous robot hands offer great potential:

Universal adaptability: Handling various objects from delicate needles to basketballs, adapting to each unique challenge in real time.

Fine manipulation: Executing complex tasks like key rotation, scissor use, and surgical procedures that are impossible with simple grippers.

Skill transfer: Their similarity to human hands makes them ideal for learning from vast human demonstration data.

Despite this potential, most current robots still rely on simple “grippers” due to the difficulties of dexterous manipulation. The pliers-like grippers are capable only of repetitive tasks in structured environments. This “dexterity gap” severely limits robots’ role in our daily lives.

Among all manipulation skills, grasping stands as the most fundamental. It is the gateway through which many other capabilities emerge. Without reliable grasping, robots cannot pick up tools, manipulate objects, or perform complex tasks. Therefore, we focus on equipping dexterous robots with the capability to robustly grasp diverse objects in this work.

The challenge: why dexterous grasping remains elusive

While humans can grasp almost any object with minimal conscious effort, the path to dexterous robotic grasping is fraught with fundamental challenges that have stymied researchers for decades:

High-dimensional control complexity. With 20+ degrees of freedom, dexterous hands present an astronomically large control space. Each finger’s movement affects the entire grasp, making it extremely difficult to determine optimal finger trajectories and force distributions in real-time. Which finger should move? How much force should be applied? How to adjust in real-time? These seemingly simple questions reveal the extraordinary complexity of dexterous grasping.

Generalization across diverse object shapes. Different objects demand fundamentally different grasp strategies. For example, spherical objects require enveloping grasps, while elongated objects need precision grips. The system must generalize across this vast diversity of shapes, sizes, and materials without explicit programming for each category.

Shape uncertainty under monocular vision. For practical deployment in daily life, robots must rely on single-camera systems—the most accessible and cost-effective sensing solution. Furthermore, we cannot assume prior knowledge of object meshes, CAD models, or detailed 3D information. This creates fundamental uncertainty: depth ambiguity, partial occlusions, and perspective distortions make it challenging to accurately perceive object geometry and plan appropriate grasps.

Our approach: RobustDexGrasp

To address these fundamental challenges, we present RobustDexGrasp, a novel framework that tackles each challenge with targeted solutions:

Teacher-student curriculum for high-dimensional control. We trained our system through a two-stage reinforcement learning process: first, a “teacher” policy learns ideal grasping strategies with privileged information (full object shape and tactile sensors) through extensive exploration in simulation. Then, a “student” policy learns from the teacher using only real-world perception (single-view point cloud, noisy joint positions) and adapts to real-world disturbances.

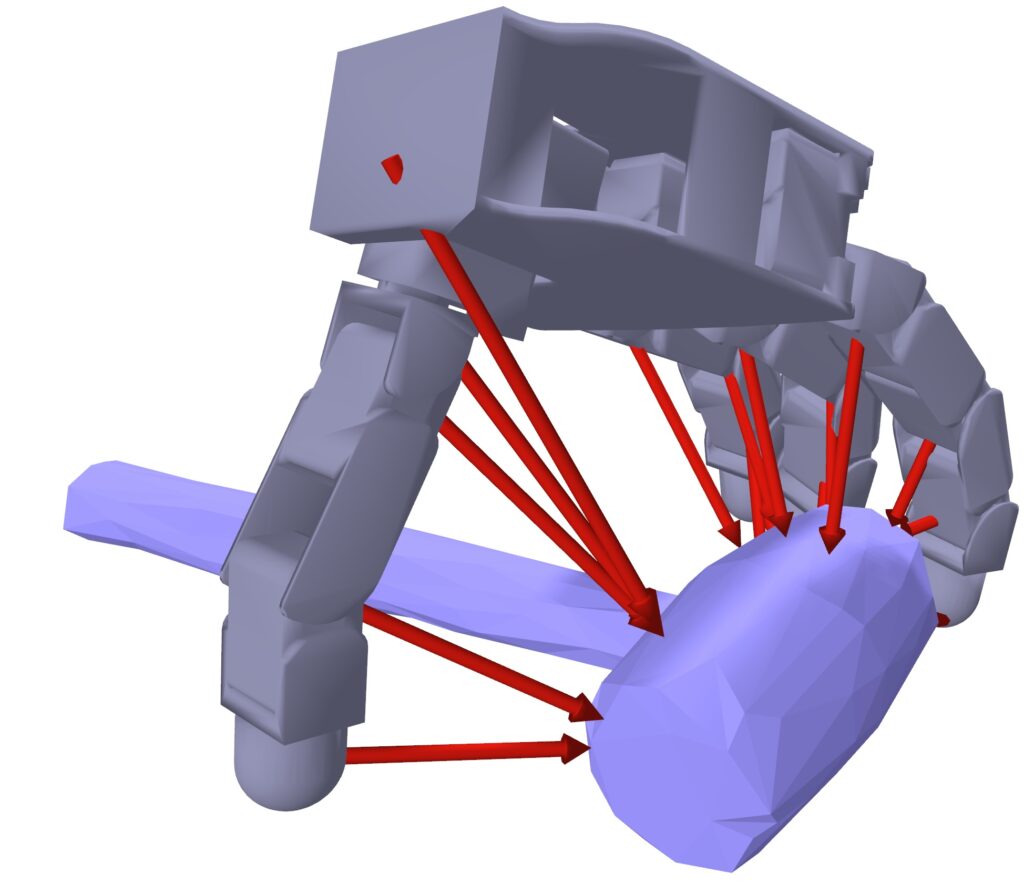

Hand-centric “intuition” for shape generalization. Instead of capturing complete 3D shape features, our method creates a simple “mental map” that only answers one question: “Where are the surfaces relative to my fingers right now?” This intuitive approach ignores irrelevant details (like color or decorative patterns) and focuses only on what matters for the grasp. It’s the difference between memorizing every detail of a chair versus just knowing where to put your hands to lift it—one is efficient and adaptable, the other is unnecessarily complicated.

Multi-modal perception for uncertainty reduction. Instead of relying on vision alone, we combine the camera’s view with the hand’s “body awareness” (proprioception—knowing where its joints are) and reconstructed “touch sensation” to cross-check and verify what it’s seeing. It’s like how you might squint at something unclear, then reach out to touch it to be sure. This multi-sense approach allows the robot to handle tricky objects that would confuse vision-only systems—grasping a transparent glass becomes possible because the hand “knows” it’s there, even when the camera struggles to see it clearly.

The results: from laboratory to reality

Trained on just 35 simulated objects, our system demonstrates excellent real-world capabilities:

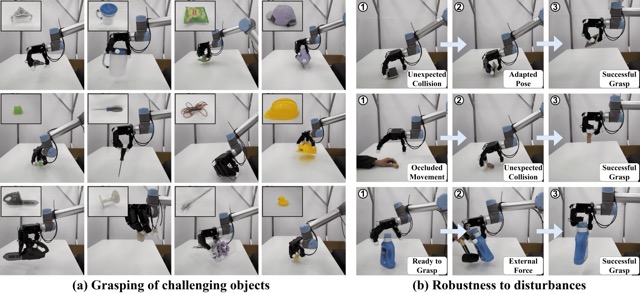

Generalization: It achieved a 94.6% success rate across a diverse test set of 512 real-world objects, including challenging items like thin boxes, heavy tools, transparent bottles, and soft toys.

Robustness: The robot could maintain a secure grip even when a significant external force (equivalent to a 250g weight) was applied to the grasped object, showing far greater resilience than previous state-of-the-art methods.

Adaptation: When objects were accidentally bumped or slipped from its grasp, the policy dynamically adjusted finger positions and forces in real-time to recover, showcasing a level of closed-loop control previously difficult to achieve.

Beyond picking things up: enabling a new era of robotic manipulation

RobustDexGrasp represents a crucial step toward closing the dexterity gap between humans and robots. By enabling robots to grasp nearly any object with human-like reliability, we’re unlocking new possibilities for robotic applications beyond grasping itself. We demonstrated how it can be seamlessly integrated with other AI modules to perform complex, long-horizon manipulation tasks:

Grasping in clutter: Using an object segmentation model to identify the target object, our method enables the hand to pick a specific item from a crowded pile despite interference from other objects.

Task-oriented grasping: With a vision language model as the high-level planner and our method providing the low-level grasping skill, the robot hand can execute grasps for specific tasks, such as cleaning up the table or playing chess with a human.

Dynamic interaction: Using an object tracking module, our method can successfully control the robot hand to grasp objects moving on a conveyor belt.

Looking ahead, we aim to overcome current limitations, such as handling very small objects (which requires a smaller, more anthropomorphic hand) and performing non-prehensile interactions like pushing. The journey to true robotic dexterity is ongoing, and we are excited to be part of it.

Read the work in full

- RobustDexGrasp: Robust Dexterous Grasping of General Objects, Hui Zhang, Zijian Wu, Linyi Huang, Sammy Christen, Jie Song.