Robohub.org

Healthcare’s regulatory AI conundrum

It was the last question of the night and it hushed the entire room. An entrepreneur expressed his aggravation about the FDA’s antiquated regulatory environment for AI-enabled devices to Dr. Joel Stein of Columbia University. Stein a leader in rehabilitative robotic medicine, sympathized with the startup knowing full well that tomorrow’s exoskeletons will rely heavily on machine intelligence. Nodding her head in agreement, Kate Merton of JLabs shared the sentiment. Her employer, Johnson & Johnson, is partnered with Google to revolutionize the operating room through embedded deep learning systems. In many ways this astute observation encapsulated RobotLab this past Tuesday with our topic being “The Future Of Robotic Medicine,” the paradox of software-enabled therapeutics offering a better quality of life and the societal, technological and regulatory challenges ahead.

To better understand the frustration expressed at RobotLab, a review of the policies of the Food & Drug Administration (FDA) relative to medical devices and software is required. Most devices fall within a criteria that was established in the 1970s. The “build and freeze” model whereby a product filed doesn’t change overtime and currently excludes therapies that rely on neural networks and deep learning algorithms that evolve with use. Charged with progressing its regulatory environment, the Obama Administration established a Digital Health Program tasked with implementing new regulatory guidance for software and mobile technology. This initiative eventually led Congress to pass the 21st Century Cures Act (“Cures Act”) in December 2016. An important aspect of the Cures Act is its provisions for digital health products, medical software, and smart devices. The legislators singled out AI for its unparalleled ability to be used in supporting human decision making referred to as “Clinical Decision Support” (“CDS”) with examples like Google and IBM Watson. Last year, the administration updated the Cures Act with a new framework that included a Digital Health Innovation Action Plan. These steps have been leading a change in the the FDA’s attitude towards mechatronics, updating its traditional approach to devices to include software and hardware that iterates with cognitive learning. The Action Plan states “an efficient, risk-based approach to regulating digital health technology will foster innovation of digital health products.” In addition, the FDA has been offering tech partners the ability of filing a Digital Health Software Pre-Certification (“Pre-Cert”) to fast track the evaluation and approval process, current Pre-Cert pilot filings include Apple, Fitbit, Samsung and other leading technology companies.

Another way for AI and robotic devices to receive approval from the FDA is through their “De Novo premarket review pathway.” According to the FDA’s website, the De Novo program is designed for “medical devices that are low to moderate risk and have no legally marketed predicate device to base a determination of substantial equivalence.” Many computer vision systems fall into the De Novo category using their sensors to provide “triage” software to efficiently identify disease markers based upon its training data of radiology images. As an example, last month the FDA approved Viz.ai a new type of “clinical decision support software designed to analyze computed tomography (CT) results that may notify providers of a potential stroke in their patients.”

Dr. Robert Ochs of the FDA’s Center for Devices and Radiological Health explains, “The software device could benefit patients by notifying a specialist earlier thereby decreasing the time to treatment. Faster treatment may lessen the extent or progression of a stroke.” The Viz.ai algorithm has the ability to change the lives of the nearly 800,000 annual stroke victims in the USA. The data platform will enable clinicians to quickly identify patients at risk for stroke by analyzing thousands of CT brain scans for blood vessel blockages and then automatically send alerts via text messages to neurovascular specialists. Viz.AI promises to streamline the diagnosis process by cutting the traditional time it takes for radiologists to review, identify and escalate cases to specialists for high-risk patients.

Dr. Chris Mansi, Viz.ai CEO, says “The Viz.ai LVO Stroke Platform is the first example of applied artificial intelligence software that seeks to augment the diagnostic and treatment pathway of critically unwell stroke patients. We are thrilled to bring artificial intelligence to healthcare in a way that works alongside physicians and helps get the right patient, to the right doctor at the right time.” According to the FDA’s statement, Mansi’s company “submitted a study of only 300 CT scans that assessed the independent performance of the image analysis algorithm and notification functionality of the Viz.ai Contact application against the performance of two trained neuro-radiologists for the detection of large vessel blockages in the brain. Real-world evidence was used with a clinical study to demonstrate that the application could notify a neurovascular specialist sooner in cases where a blockage was suspected.”

Dr. Chris Mansi, Viz.ai CEO, says “The Viz.ai LVO Stroke Platform is the first example of applied artificial intelligence software that seeks to augment the diagnostic and treatment pathway of critically unwell stroke patients. We are thrilled to bring artificial intelligence to healthcare in a way that works alongside physicians and helps get the right patient, to the right doctor at the right time.” According to the FDA’s statement, Mansi’s company “submitted a study of only 300 CT scans that assessed the independent performance of the image analysis algorithm and notification functionality of the Viz.ai Contact application against the performance of two trained neuro-radiologists for the detection of large vessel blockages in the brain. Real-world evidence was used with a clinical study to demonstrate that the application could notify a neurovascular specialist sooner in cases where a blockage was suspected.”

Viz.ai joins a market for AI diagnosis software that is growing rapidly and projected to eclipse six billion by 2021 (Frost & Sullivan), an increase of more than forty percent since 2014. According to the study, AI has the ability to reduce healthcare costs by nearly half, while at the same time improving the outcomes for a third of all US healthcare patients. However, diagnosis software is only part of the AI value proposition, adding learning algorithms throughout the entire ecosystem of healthcare could provide new levels of quality of care.

At the same time, the demand for AI treatment is taking its toll on an underfunded FDA which is having difficulty keeping up with the new filings to review computer-aided therapies from diagnosis to robotic surgery to invasive therapeutics. In addition, many companies are currently unable to afford the seven-figure investment required to file with the FDA, leading to missed opportunities to find cures for the most plaguing diseases. The Atlantic reported last fall about a Canadian company, Cloud DX, that is still waiting for approval for its AI software that analyzes coughing data via audio wavelengths to detect lung-based diseases (i.e., asthma, tuberculosis, and pneumonia). Cloud DX’s founder, Robert Kaul, shared wth the magazine, “There’s a reason that tech companies like Google haven’t been going the FDA route [of clinical trials aimed at diagnostic certification]. It can be a bureaucratic nightmare, and they aren’t used to working at this level of scrutiny and slowness.” It took Cloud DX two years and close to a million dollars to achieve the basic ISO 13485 certification required to begin filing with the agency. Kaul, questioned, “How many investors are going to give you that amount of money just so you can get to the starting line?”

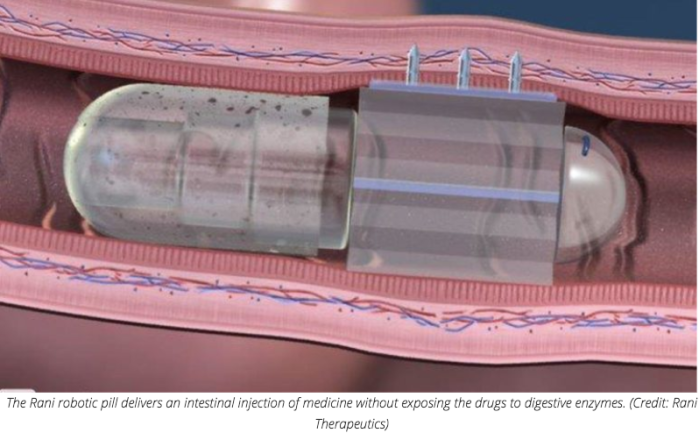

Last month, Rani Therapeutics raised $53 million to begin clinical trials for its new robotic pill. Rani’s solution could usher in a new paradigm of needle-free therapy, whereby drugs are mechanically delivered to the exact site of infection. Unfortunately, innovations like Rani’s are getting backlogged with a shortage of knowledgable examiners able review the clinical data. Bakul Patel, the FDA’s New Associate Center Director For Digital Health, describes that one of his top priorities is hiring, “Yes, it’s hard to recruit people in AI right now. We have some understanding of these technologies. But we need more people. This is going to be a challenge.” Patel is cautiously optimistic, “We are evolving… The legacy model is the one we know works. But the model that works continuously—we don’t yet have something to validate that. So the question is [as much] scientific as regulatory: How do you reconcile real-time learning [with] people having the same level of trust and confidence they had yesterday?”

As I concluded my discussion with Stein, I asked if he thought disabled people will eventually be commuting to work wearing robotic exoskeletons as easily as they do in electric wheelchairs? He answered that it could come within the next decade if society changes its mindset on how we distribute and pay for such therapies. To quote the President, “Nobody knew health care could be so complicated.”

As I concluded my discussion with Stein, I asked if he thought disabled people will eventually be commuting to work wearing robotic exoskeletons as easily as they do in electric wheelchairs? He answered that it could come within the next decade if society changes its mindset on how we distribute and pay for such therapies. To quote the President, “Nobody knew health care could be so complicated.”