Robohub.org

An origami robot for touching virtual reality objects

A group of EPFL researchers have developed a foldable device that can fit in a pocket and can transmit touch stimuli when used in a human-machine interface.

A group of EPFL researchers have developed a foldable device that can fit in a pocket and can transmit touch stimuli when used in a human-machine interface.

When browsing an e-commerce site on your smartphone, or a music streaming service on your laptop, you can see pictures and hear sound snippets of what you are going to buy. But sometimes it would be great to touch it too – for example to feel the texture of a garment, or the stiffness of a material. The problem is that there are no miniaturized devices that can render touch sensations the way screens and loudspeakers render sight and sound, and that can easily be coupled to a computer or a mobile device.

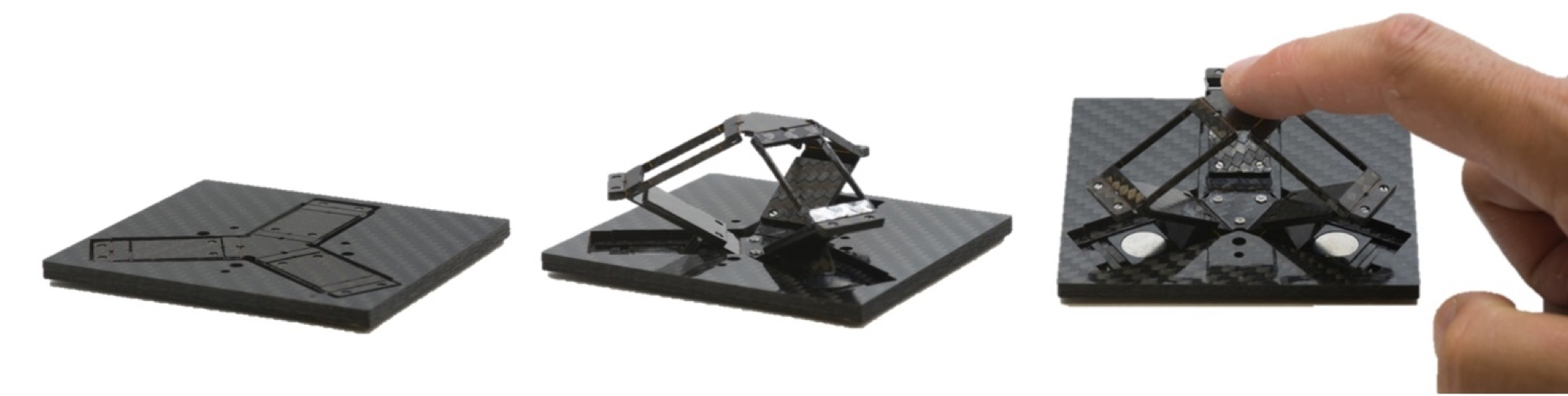

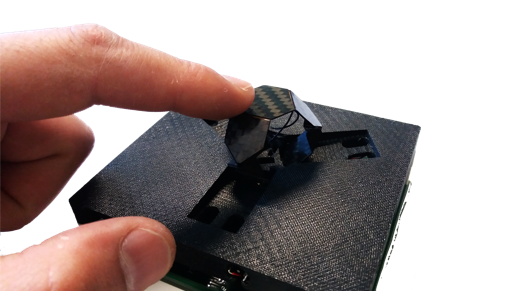

Researchers in Professor Jamie Paik’s lab at EPFL have made a step towards creating just that – a foldable device that can fit in a pocket and can transmit touch stimuli when used in a human-machine interface. Called Foldaway, this miniature robot is based on the origami robotic technology, which makes it easy to miniaturize and manufacture. Because it starts off as a flat structure, it can be printed with a technique similar to the one employed for electronic circuits, and can be easily stored and transported. At the time of deployment, the flat structure folds along a pre-defined pattern of joints to take the desired 3D, button-like shape. The device includes three actuators that generate movements, forces and vibrations in various directions; a moving origami mechanism on the tip that transmit sensations to the user’s finger; sensors that track the movements of the finger and electronics to control the whole system. This way the device can render different touch sensations that reproduce the physical interaction with objects or forms.

The Foldaway device, that is described in an article in the December issue of Nature Machine Intelligence and is featured on the journal’s cover, comes in two versions, called Delta and Pushbutton. “The first one is more suitable for applications that require large movements of the user’s finger as input” says Stefano Mintchev, a member of Jamie Paik’s lab and co-author of the paper. “The second one is smaller, therefore pushing portability even further without sacrificing the force sensations transmitted to the user’s finger”.

Education, virtual reality and drone control

The researchers have tested their devices in three situations. In an educational context, they have shown that a portable interface, measuring less than 10 cm in length and width and 3 cm in height, can be used to navigate an atlas of human anatomy: the Foldaway device gives the user different sensations upon passing on various organs: the different stiffness of soft lungs and hard bones at the rib cage; the up-and-down movement of the heartbeat; sharp variations of stiffness on the trachea.

As a virtual reality joystick, the Foldaway can give the user the sensation of grasping virtual objects and perceiving their stiffness, modulating the force generated when the interface is pressed

As a control interface for drones, the device can contribute to solve the sensory mismatch created when users control the drone with their hands, but can perceive the response of drones only through visual feedback. Two Pushbuttons can be combined, and their rotation can be mapped into commands for altitude, lateral and forward/backward movements. The interface also provides force feedback to the user’s fingertips in order to increase the pilot’s awareness on drone’s behaviour and of the effects of wind or other environmental factors.

The Foldaway device was developed by the Reconfigurable Robotics Lab at EPFL, and is currently being developed for commercialisation by a spin-off (called FOLDAWAY Haptics) that was supported by NCCR Robotics’s Spin Fund grant.

“Now that computing devices and robots are more ubiquitous than ever, the quest for human machine-interactions is growing rapidly” adds Marco Salerno, a member of Jamie Paik’s lab and co-author of the paper. “The miniaturization offered by origami robots can finally allow the integration of rich touch feedback into everyday interfaces, from smartphones to joysticks, or the development of completely new ones such as interactive morphing surfaces”.

Literature

Mintchev, S., Salerno, M., Cherpillod, A. et al., “A portable three-degrees-of-freedom force feedback origami robot for human–robot interactions“, Nature Machine Intelligence 1, 584–593 (2019) doi:10.1038/s42256-019-0125-1