Robohub.org

AWE2013: What is the augmented world?

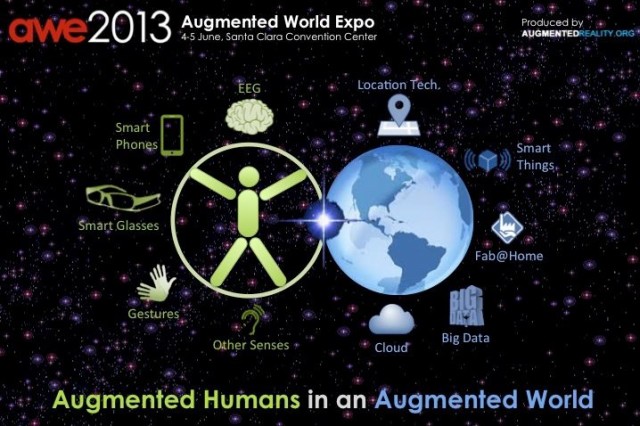

Augmented reality and virtual reality have been overhyped and underdelivering for years but there are many indications that things are changing. It’s not just that Google Glass has been on the streets for a year now. Well, a select few have had Google Glass for a year already and the cut down consumer version is predicted for 2013/2014. There are also about 10 other versions on display here. I’m at the Augmented World Expo in Santa Clara with 1000+ AR professionals from 30+ countries, seeing 100+ demos and hearing 110 speakers discuss what is happening for augmented humans in the augmented world.

So while this is important to us all personally and socially, this overlaps with robotics in several ways. Robotics research, particularly computer vision, underpins a lot of AR progress to date, especially as we transition from using markers to keypoints or natural markers. But it’s really all about the numbers. In the same way that Kinect is an affordable consumer off the shelf (COTS) product which impacts far more than just the gaming community. Gaming, entertainment, retail and AR are all contributing to the digitization of the physical world. Every speaker shared big numbers on AR uptake that parallel the uptake of computers or the internet moving into early majority stage, beyond early adopters.

This on its own is important for robotics, but can also be seen in conjunction with the potential for robotics to be incorporated as a mechanism in the multitude of new devices and displays. As we create larger and more varied interactive environments, we will increasingly require these environments to move with us, to sense and respond. As we become used to new interfaces with our devices, we will develop more object to object grammars. I find the ability of AR to imbue dumb objects with smarts fascinating, this goes beyond visual trickery and allows us to turn any object into something that has a digital or control relationship with any other object or machine.

A clear message from every speaker was a maturation of AR away from adding visual trickery and towards augmented perception, or providing a data visualization tool for our increasingly digitized quantified lives. This more subtle AR explores the layers of information we already have in the real world and allows for a more ambient or intuitive interface. One example is a startup demoing in the hardware alley, Lightup.io.

LightUp is both a real electronics kit, using magnetic snap together construction, and an app that shows you the flow of electrons when your circuit is working and what’s wrong if your circuit isn’t working. These guys didn’t even think they were AR, they just used tools at hand to build a good teaching experience and are demoing because they’re halfway through their Kickstarter campaign and want exposure. LightUp are Haxlr8r startup accelerator veterans and have put a lot of customer testing into creating a great educational electronics product. I’ve often wished that I could see the electricity. It will be interesting to see their next iteration which may be more robotic.

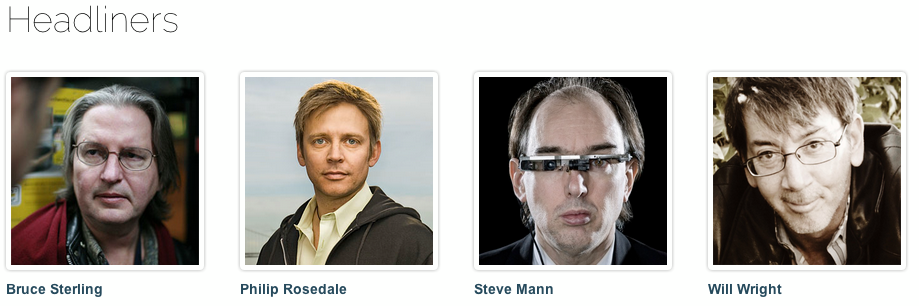

The Day One keynote speakers opened with Bruce Sterling, science fiction writer and AR visionary. Sterling shared his awe for a world in which our reality was 4 billion tweets a day and the economic colussi were tech companies. He said that people always asked him if it was the dawn of AR yet. “It’s not the dawn of AR, it’s 10.45 am on what’s turning out to be a hot and turbulent summers day.”

Sterling said that we’re on the brink of breakthroughs that will make the last 5 years of AR look like SIGGRAPH in the 80s. Most people I talked to were saying variations of “Some very big companies are about to come out with… I can’t say more but I’ve seen the product roadmap.”

Bruce also gave a hat tip to Gang Huang’s lensless compressive camera from Bell Labs, the launch of Processing 2.0, the launch of Intel Capital’s $100 million perceptual computing fund, and the teaser for Intel’s ‘answer to Kinect and Leap’, not one but two 3D depth cameras.

Tomi Ahonen, mobile industry analyst, shared some numbers on AR uptake in comparison to mobile and is bullishly predicting 1 billion users by 2020. He points out that we’re only just accepting that mobile is no longer a subset of telecommunications or internet but a media and industry of its own. That mobile was not commercialized until 1998 and yet in 2012 there were 7 billion mobile phone accounts, 2.5 billion consumers of paid mobile media.

in 2001, 3 years from launch, mobile had 10 million paid consumers. AR is 3 years from first commercialization and now also has approx 10 million paying consumers. iButterfly in Hong Kong has 7% of smartphone users and is expanding to Singapore, Thailand and the Philippines. In the Netherlands, 14% of phone users have downloaded Layar. With three years of data in hand, Ahonen is confident that AR is on track to be twice the size of combined free and paid newspaper circulation with 1 billion users by 2020.

There was an interesting presentation on augmented perception as giving us super powers from Avi Bar-Zeev, followed by Amber Case calling us all cyborgs already. Case has done a lot of interesting work in geolocation and AR, which she sees as a natural partner to the quantified self or ‘big mother’ movement. Case is looking for technology that is improving our lives while getting out of our way. Being invisible.

Ben Cerveny challenged our understanding of space from an ontological level downwards and described seeing a comparative return to narrative based interactions as opposed to graphical ones. Tish Shute identified some other trends; the rise of wearables, crowdfunding creating a ‘new kind of store’ that gives fast feedback on what people want and projects like the Eidos Masks altering human perception.

Mike Kuniavsky of PARC, Natan Linder of MIT Media Lab and Peter Meier of metaio, spoke about form factors in an augmented world. The LuminAR robotic projector from the MIT Media Lab’s fluid interfaces group “reinvents the traditional incandescent bulb and desk lamp, evolving them into a new category of robotic, digital information devices. The LuminAR Bulb combines a Pico-projector, camera, and wireless computer in a compact form factor. This self-contained system enables users with just-in-time projected information and a gestural user interface, and it can be screwed into standard light fixtures everywhere. The LuminAR Lamp is an articulated robotic arm, designed to interface with the LuminAR Bulb. Both LuminAR form factors dynamically augment their environments with media and information, while seamlessly connecting with laptops, mobile phones, and other electronic devices.”

Will Wright, creator of the Sims and now Stupid Fun Club, spoke about how much data is coming in through our senses, yet how little we make use of. He theorizes that intelligence is a filtering function and that maybe what we want out of augmented reality is really more of a ‘decimated reality’. Wright is looking for AR that not only creates more layers in our world but that actively filters out unwanted information. He calls it ‘customized reality’. Wright is adamant that AR is not just a better internet browser, but something that has a different perceptual interface and that can and should create emotional interactions, if we choose.

Philip Rosedale, cofounder of Second Life and now founder of highfidelity.io, spoke about how we’ve got the speed and scale problems close to solved. That they’ve got latency down to a point where virtual face to face communication is as natural as physical – atleast for talking and looking. Kissing requires the fastest response. Rosedale gave us the first public demo of highfidelity’s work with Rosedale’s real time head movements superimposed on an avatar. Rosedale’s also thinking a lot about Minecraft and turning the world into small cubes of data.

If this short summary of Day One of AWE2013 leaves you wanting more, there are supplementary videos up on the AugmentedReality.org channel.

tags: events