Robohub.org

BDD100K: A large-scale diverse driving video database

By Fisher Yu

TL;DR, we released the largest and most diverse driving video dataset with richannotations called BDD100K. You can access the data for research now at http://bdd-data.berkeley.edu. We haverecently released an arXivreport on it. And there is still time to participate in our CVPR 2018 challenges!

Large-scale, Diverse, Driving, Video: Pick Four

Autonomous driving is poised to change the life in every community. However,recent events show that it is not clear yet how a man-made perception system canavoid even seemingly obvious mistakes when a driving system is deployed in thereal world. As computer vision researchers, we are interested in exploring thefrontiers of perception algorithms for self-driving to make it safer. To designand test potential algorithms, we would like to make use of all the informationfrom the data collected by a real driving platform. Such data has four majorproperties: it is large-scale, diverse, captured on the street, and withtemporal information. Data diversity is especially important to test therobustness of perception algorithms. However, current open datasets can onlycover a subset of the properties described above. Therefore, with the help of Nexar, we are releasing the BDD100Kdatabase, which is the largest and most diverse open driving video dataset sofar for computer vision research. This project is organized and sponsored by Berkeley DeepDrive IndustryConsortium, which investigates state-of-the-art technologies in computer visionand machine learning for automotive applications.

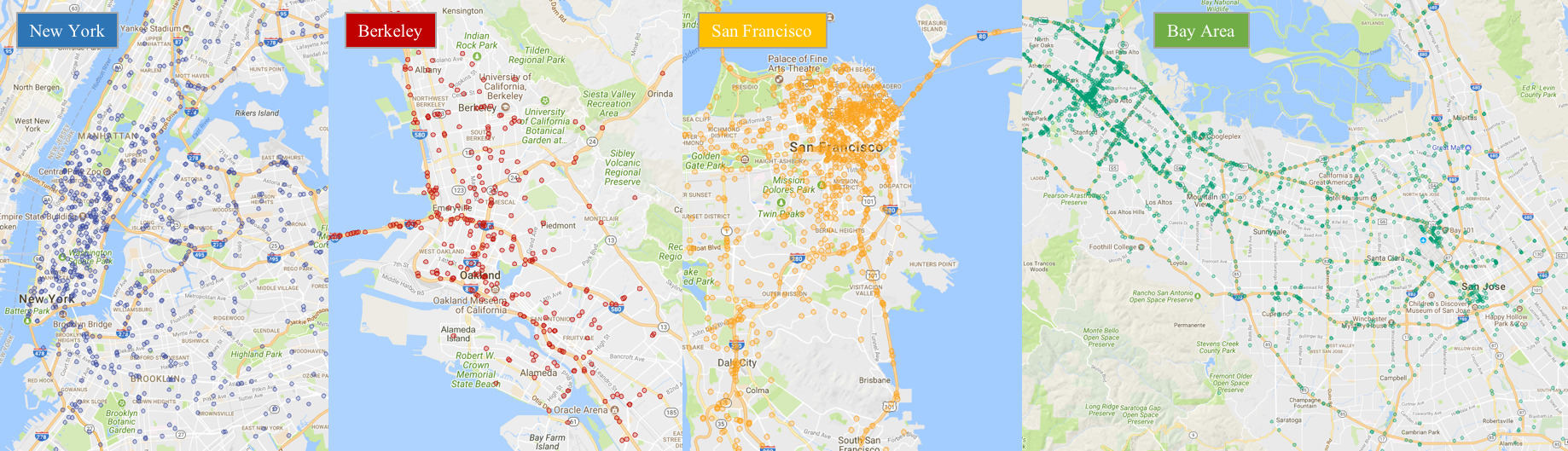

Locations of a random video subset.

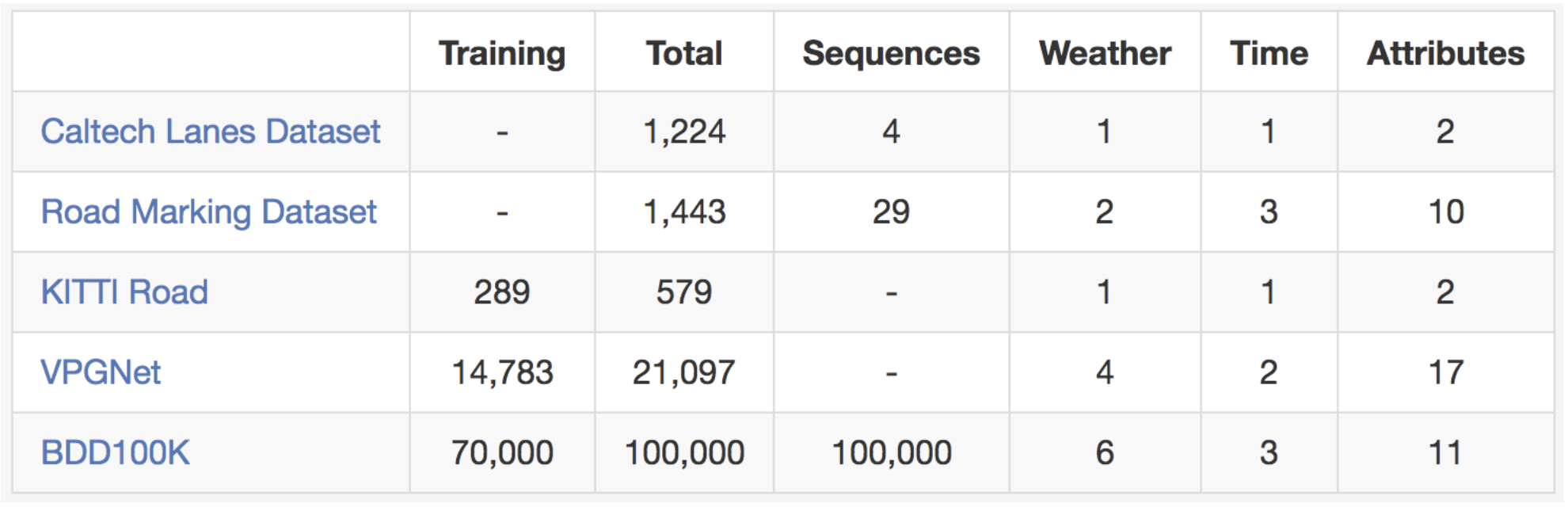

As suggested in the name, our dataset consists of 100,000 videos. Each video isabout 40 seconds long, 720p, and 30 fps. The videos also come with GPS/IMUinformation recorded by cell-phones to show rough driving trajectories. Ourvideos were collected from diverse locations in the United States, as shown inthe figure above. Our database covers different weather conditions, includingsunny, overcast, and rainy, as well as different times of day including daytimeand nighttime. The table below summarizes comparisons with previous datasets,which shows our dataset is much larger and more diverse.

Comparisons with some other street scene datasets. It is hard to fairly compare# images between datasets, but we list them here as a rough reference.

The videos and their trajectories can be useful for imitation learning ofdriving policies, as in our CVPR 2017paper. To facilitate computer vision research on our large-scale dataset, wealso provide basic annotations on the video keyframes, as detailed in the nextsection. You can download the data and annotations now at http://bdd-data.berkeley.edu.

Annotations

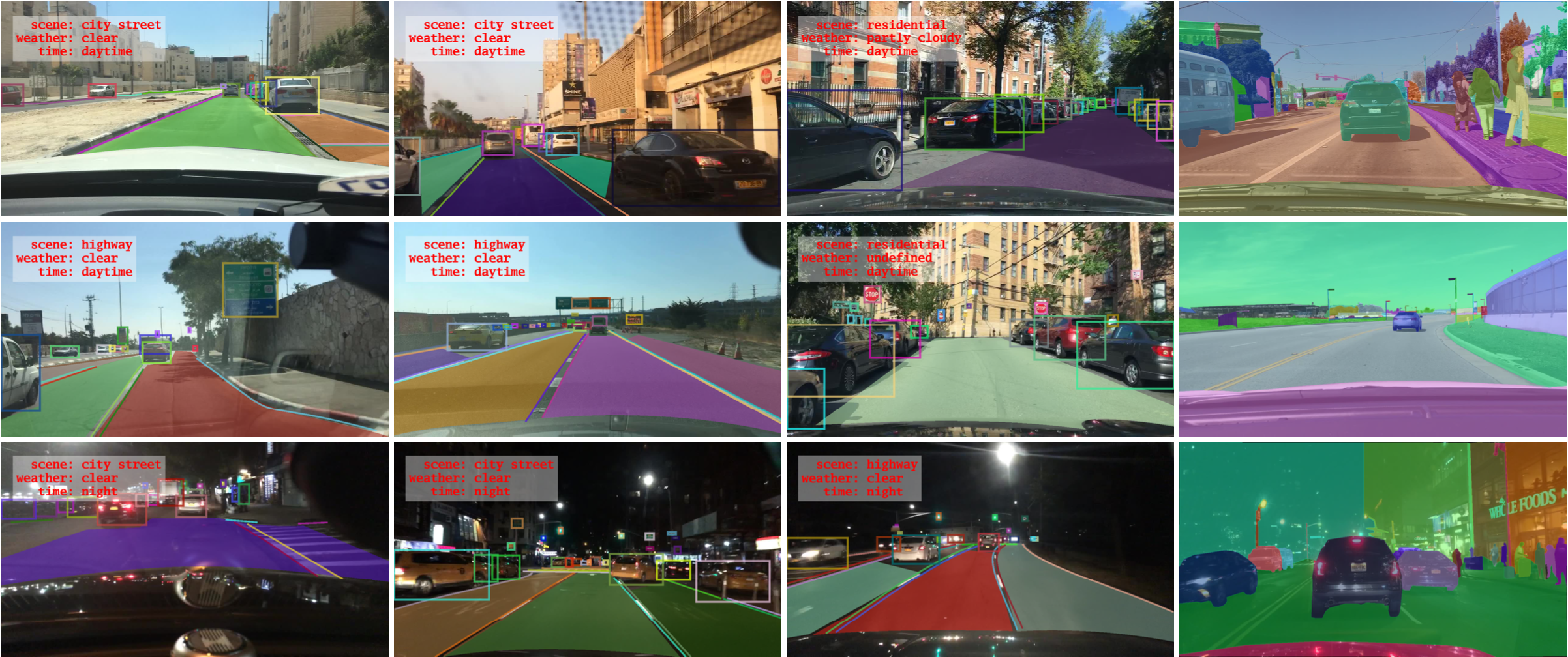

We sample a keyframe at the 10th second from each video and provide annotationsfor those keyframes. They are labeled at several levels: image tagging, roadobject bounding boxes, drivable areas, lane markings, and full-frame instancesegmentation. These annotations will help us understand the diversity of thedata and object statistics in different types of scenes. We will discuss thelabeling process in a different blog post. More information about theannotations can be found in our arXivreport.

Overview of our annotations.

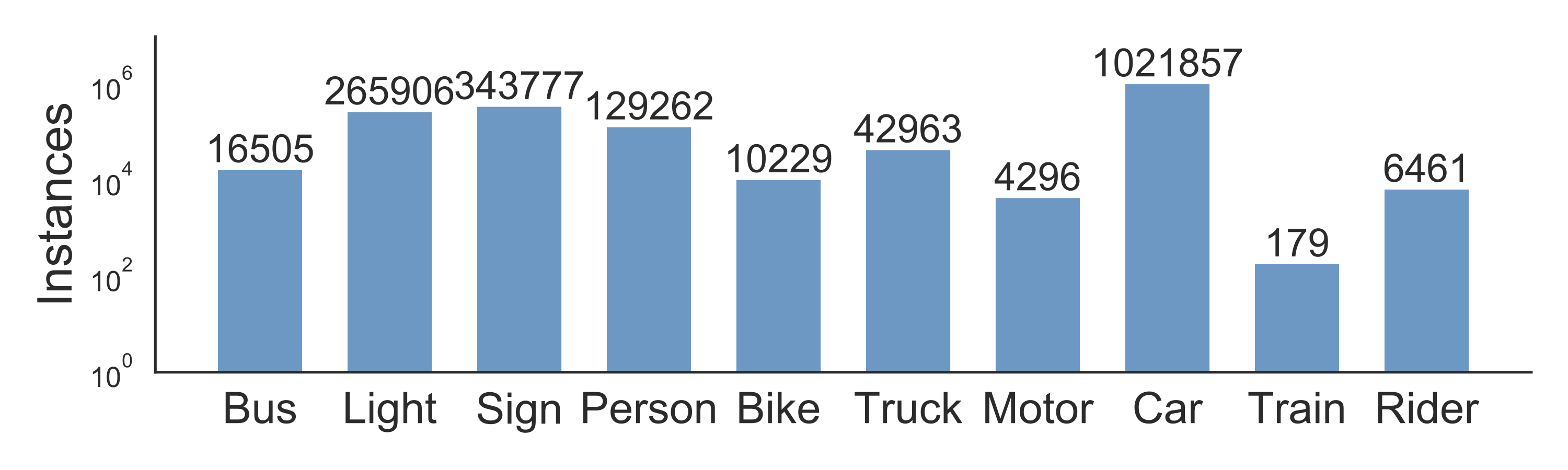

Road Object Detection

We label object bounding boxes for objects that commonly appear on the road onall of the 100,000 keyframes to understand the distribution of the objects andtheir locations. The bar chart below shows the object counts. There are alsoother ways to play with the statistics in our annotations. For example, we cancompare the object counts under different weather conditions or in differenttypes of scenes. This chart also shows the diverse set of objects that appear inour dataset, and the scale of our dataset – more than 1 million cars. Thereader should be reminded here that those are distinct objects with distinctappearances and contexts.

Statistics of different types of objects.

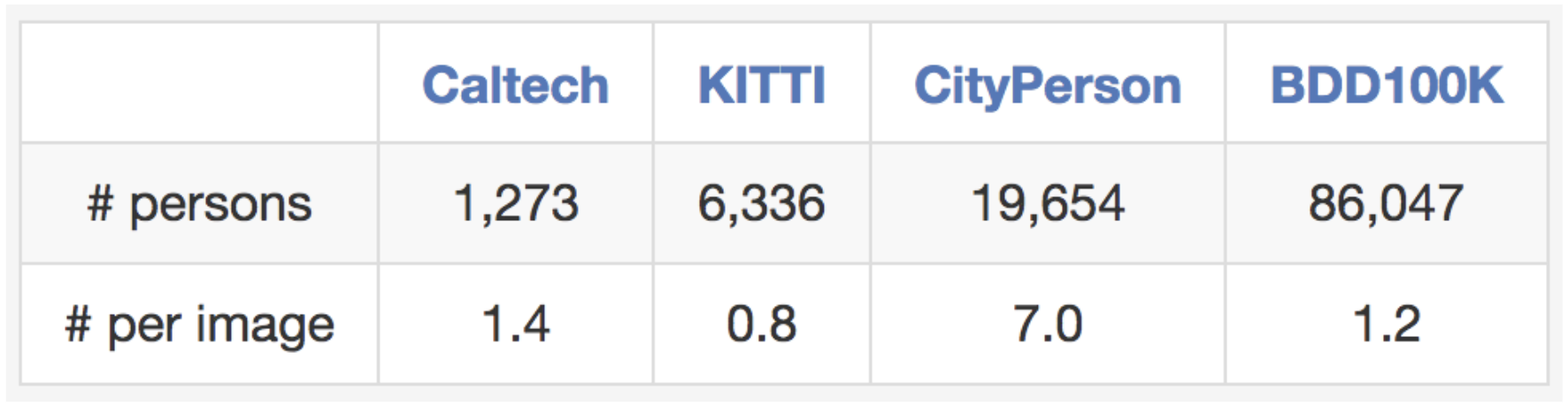

Our dataset is also suitable for studying some particular domains. For example,if you are interested in detecting and avoiding pedestrians on the streets, youalso have a reason to study our dataset since it contains more pedestrianinstances than previous specialized datasets as shown in the table below.

Comparisons with other pedestrian datasets regarding training set size.

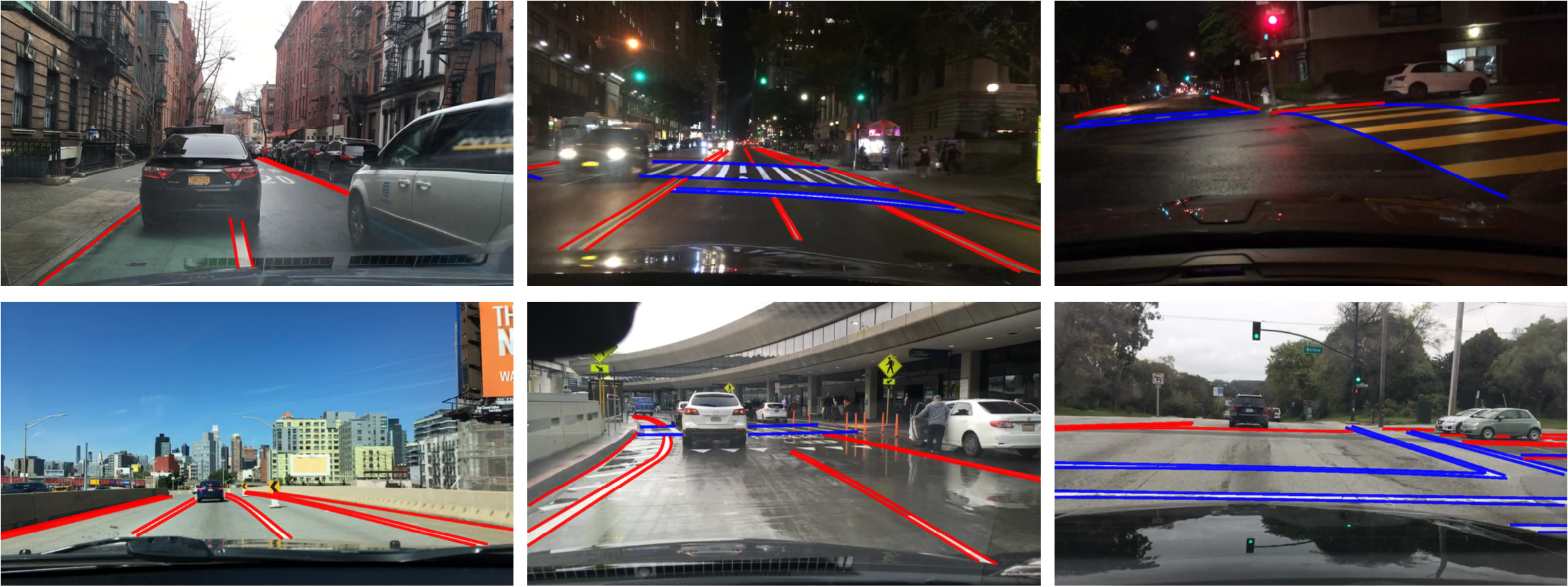

Lane Markings

Lane markings are important road instructions for human drivers. They are alsocritical cues of driving direction and localization for the autonomous drivingsystems when GPS or maps does not have accurate global coverage. We divide thelane markings into two types based on how they instruct the vehicles in thelanes. Vertical lane markings (marked in red in the figures below) indicatemarkings that are along the driving direction of their lanes. Parallel lanemarkings (marked in blue in the figures below) indicate those that are for thevehicles in the lanes to stop. We also provide attributes for the markings suchas solid vs. dashed and double vs. single.

If you are ready to try out your lane marking prediction algorithms, please lookno further. Here is the comparison with existing lane marking datasets.

Drivable Areas

Whether we can drive on a road does not only depend on lane markings and trafficdevices. It also depends on the complicated interactions with other objectssharing the road. In the end, it is important to understand which area can bedriven on. To investigate this problem, we also provide segmentation annotationsof drivable areas as shown below. We divide the drivable areas into twocategories based on the trajectories of the ego vehicle: direct drivable, andalternative drivable. Direct drivable, marked in red, means the ego vehicle hasthe road priority and can keep driving in that area. Alternative drivable,marked in blue, means the ego vehicle can drive in the area, but has to becautious since the road priority potentially belongs to other vehicles.

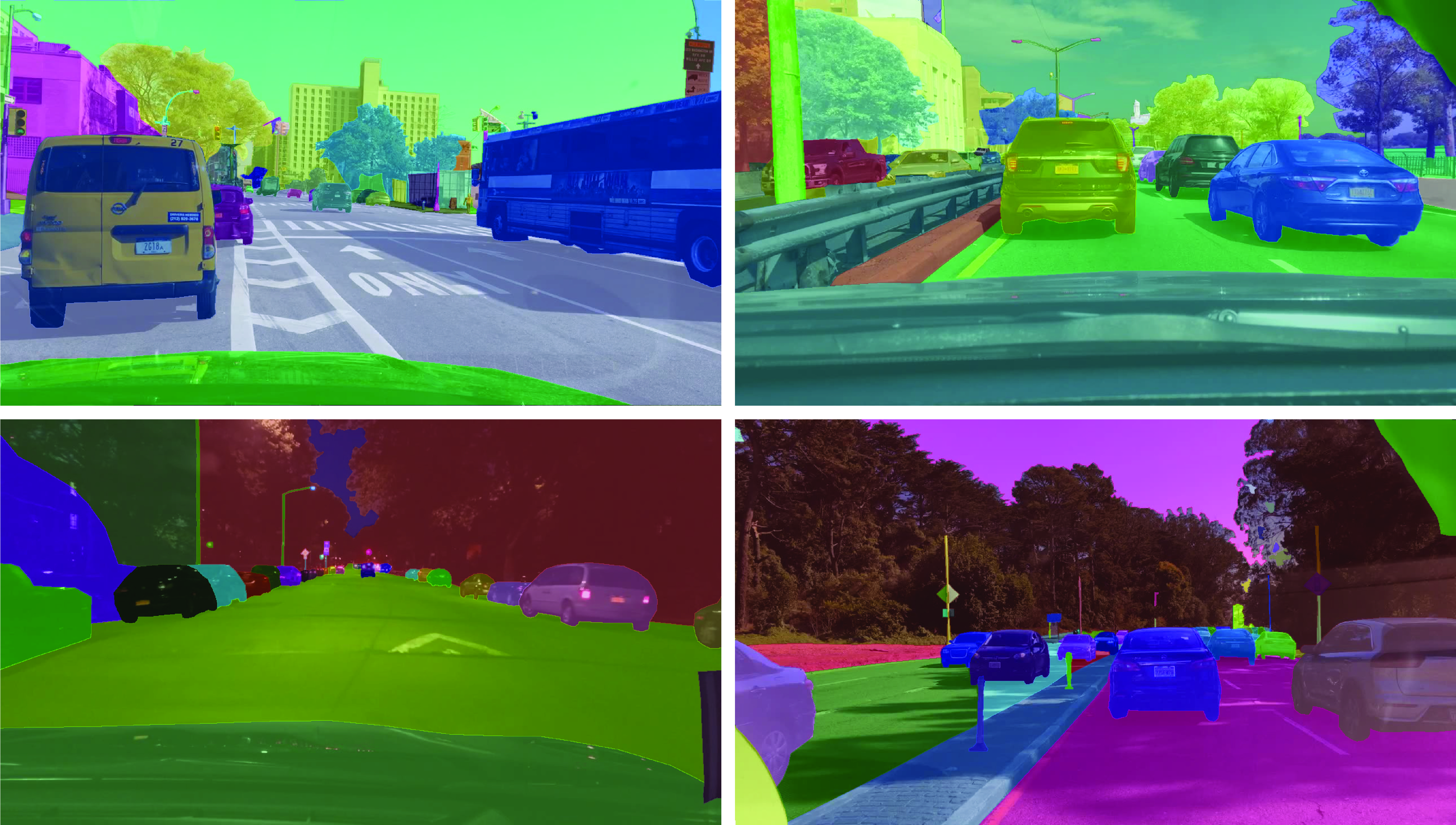

Full-frame Segmentation

It has been shown on Cityscapes dataset that full-frame fine instancesegmentation can greatly bolster research in dense prediction and objectdetection, which are pillars of a wide range of computer vision applications. Asour videos are in a different domain, we provide instance segmentationannotations as well to compare the domain shift relative by different datasets.It can be expensive and laborious to obtain full pixel-level segmentation.Fortunately, with our own labeling tool, the labeling cost could be reduced by50%. In the end, we label a subset of 10K images with full-frame instancesegmentation. Our label set is compatible with the training annotations inCityscapes to make it easier to study domain shift between the datasets.

Driving Challenges

We are hosting threechallenges in CVPR 2018 Workshop on Autonomous Driving based on our data:road object detection, drivable area prediction, and domain adaptation ofsemantic segmentation. The detection task requires your algorithm to find all ofthe target objects in our testing images and drivable area prediction requiressegmenting the areas a car can drive in. In domain adaptation, the testing datais collected in China. Systems are thus challenged to get models learned in theUS to work in the crowded streets in Beijing, China. You can submit your resultsnow after logging in ouronline submission portal. Make sure to check out our toolkit to jump start yourparticipation.

Join our CVPR workshop challenges to claim your cash prizes!!!

Future Work

The perception system for self-driving is by no means only about monocularvideos. It may also include panorama and stereo videos as well as other typesof sensors like LiDAR and radar. We hope to provide and study thosemulti-modality sensor data as well in the near future.

Reference Links

Caltech, KITTI, CityPerson, Cityscapes, ApolloScape, Mapillary, Caltech Lanes Dataset, Road Marking Dataset, KITTI Road, VPGNet

This article was initially published on the BAIR blog, and appears here with the authors’ permission.