Robohub.org

California’s AV testing rules apply to Tesla’s “FSD”

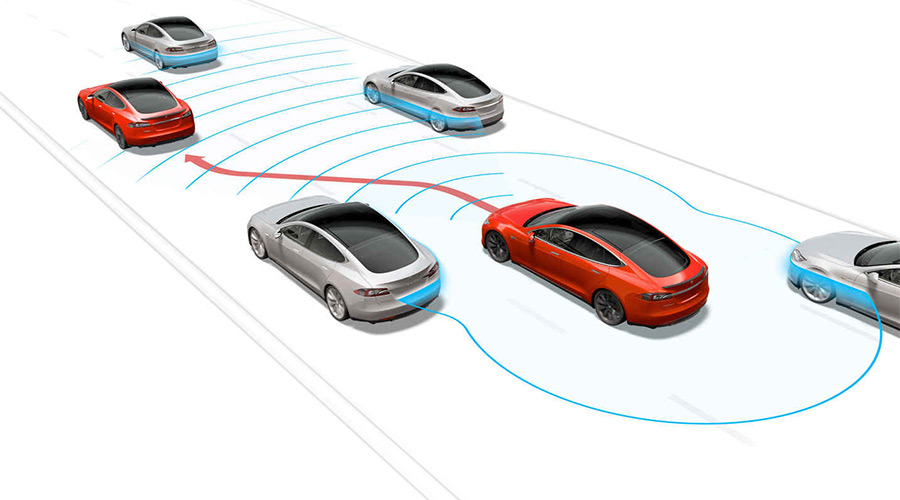

Tesla Motors autopilot (photo:Tesla)

Five years to the day after I criticized Uber for testing its self-proclaimed “self-driving” vehicles on California roads without complying with the testing requirements of California’s automated driving law, I find myself criticizing Tesla for testing its self-proclaimed “full self-driving” vehicles on California roads without complying with the testing requirements of California’s automated driving law.

As I emphasized in 2016, California’s rules for “autonomous technology” necessarily apply to inchoate automated driving systems that, in the interest of safety, still use human drivers during on-road testing. “Autonomous vehicles testing with a driver” may be an oxymoron, but as a matter of legislative intent it cannot be a null set.

There is even a way to mortar the longstanding linguistic loophole in California’s legislation: Automated driving systems undergoing development arguably have the “capability to drive a vehicle without the active physical control or monitoring by a human operator” even though they do not yet have the demonstrated capability to do so safely. Hence the human driver.

(An imperfect analogy: Some kids can drive vehicles, but it’s less clear they can do so safely.)

When supervised by that (adult) human driver, these nascent systems function like the advanced driver assistance features available in many vehicles today: They merely work unless and until they don’t. This is why I distinguish between the aspirational level (what the developer hopes its system can eventually achieve) and the functional level (what the developer assumes its system can currently achieve).

(SAE J3016, the source for the (in)famous levels of driving automation, similarly notes that “it is incorrect to classify” an automated driving feature as a driver assistance feature “simply because on-road testing requires” driver supervision. The version of J3016 referenced in regulations issued by the California Department of Motor Vehicles does not contain this language, but subsequent versions do.)

The second part of my analysis has developed as Tesla’s engineering and marketing have become more aggressive.

Back in 2016, I distinguished Uber’s AVs from Tesla’s Autopilot. While Uber’s AVs were clearly on the automated-driving side of a blurry line, the same was not necessarily true of Tesla’s Autopilot:

In some ways, the two are similar: In both cases, a human driver is (supposed to be) closely supervising the performance of the driving automation system and intervening when appropriate, and in both cases the developer is collecting data to further develop its system with a view toward a higher level of automation.

In other ways, however, Uber and Tesla diverge. Uber calls its vehicles self-driving; Tesla does not. Uber’s test vehicles are on roads for the express purpose of developing and demonstrating its technologies; Tesla’s production vehicles are on roads principally because their occupants want to go somewhere.

Like Uber then, Tesla now uses the term “self-driving.” And not just self-driving: full self-driving. (This may have pushed Waymo to call its vehicles “fully driverless“—a term that is questionable and yet still far more defensible. Perhaps “fully” is the English language’s new “very.”)

Tesla’s use of “FSD” is, shall we say, very misleading. After all, its “full self-driving” cars still need human drivers. In a letter to the California DMV, the company characterized “FSD” as a level two driver assistance feature. And I agree, to a point: “FSD” is functionally a driver assistance system. For safety reasons, it clearly requires supervision by an attentive human driver.

At the same time, “FSD” is aspirationally an automated driving system. The name unequivocally communicates Tesla’s goal for development, and the company’s “beta” qualifier communicates the stage of that development. Tesla intends for its “full self-driving” to become, well, full self-driving, and its limited beta release is a key step in that process.

And so while Tesla’s vehicles are still on roads principally because their occupants want to go somewhere, “FSD” is on a select few of those vehicles because Tesla wants to further develop—we might say “test”—it. In the words of Tesla’s CEO: “It is impossible to test all hardware configs in all conditions with internal QA, hence public beta.”

Tesla’s instructions to its select beta testers show that Tesla is enlisting them in this testing. Since the beta software “may do the wrong thing at the worst time,” drivers should “always keep your hands on the wheel and pay extra attention to the road. Do not become complacent…. Use Full Self-Driving in limited Beta only if you will pay constant attention to the road, and be prepared to act immediately….”

California’s legislature envisions a similar role for the test drivers of “autonomous vehicles”: They “shall be seated in the driver’s seat, monitoring the safe operation of the autonomous vehicle, and capable of taking over immediate manual control of the autonomous vehicle in the event of an autonomous technology failure or other emergency.” These drivers, by the way, can be “employees, contractors, or other persons designated by the manufacturer of the autonomous technology.”

Putting this all together:

- Tesla is developing an automated driving system that it calls “full self-driving.”

- Tesla’s development process involves testing “beta” versions of “FSD” on public roads.

- Tesla carries out this testing at least in part through a select group of designated customers.

- Tesla instructs these customers to carefully supervise the operation of “FSD.”

Tesla’s “FSD” has the “capability to drive a vehicle without the active physical control or monitoring by a human operator,” but it does not yet have the capability to do so safely. Hence the human drivers. And the testing. On public roads. In California. For which the state has a specific law. That Tesla is not following.

As I’ve repeatedly noted, the line between testing and deployment is not clear—and is only getting fuzzier in light of over-the-air updates, beta releases, pilot projects, and commercial demonstrations. Over the last decade, California’s DMV has performed admirably in fashioning rules, and even refashioning itself, to do what the state’s legislature told it to do. The issues that it now faces with Tesla’s “FSD” are especially challenging and unavoidably contentious.

But what is increasingly clear is that Tesla is testing its inchoate automated driving system on California roads. And so it is reasonable—and indeed prudent—for California’s DMV to require Tesla to follow the same rules that apply to every other company testing an automated driving system in the state.

tags: Automotive

Related posts :

Robot Talk Episode 126 – Why are we building humanoid robots?

Gearing up for RoboCupJunior: Interview with Ana Patrícia Magalhães

Robot Talk Episode 125 – Chatting with robots, with Gabriel Skantze

Preparing for kick-off at RoboCup2025: an interview with General Chair Marco Simões

Interview with Amar Halilovic: Explainable AI for robotics

Robot Talk Episode 124 – Robots in the performing arts, with Amy LaViers

Robot Talk Episode 123 – Standardising robot programming, with Nick Thompson

Congratulations to the #AAMAS2025 best paper, best demo, and distinguished dissertation award winners

©2025.05 - Association for the Understanding of Artificial Intelligence