Robohub.org

How will robots and AI change our way of life in 2030?

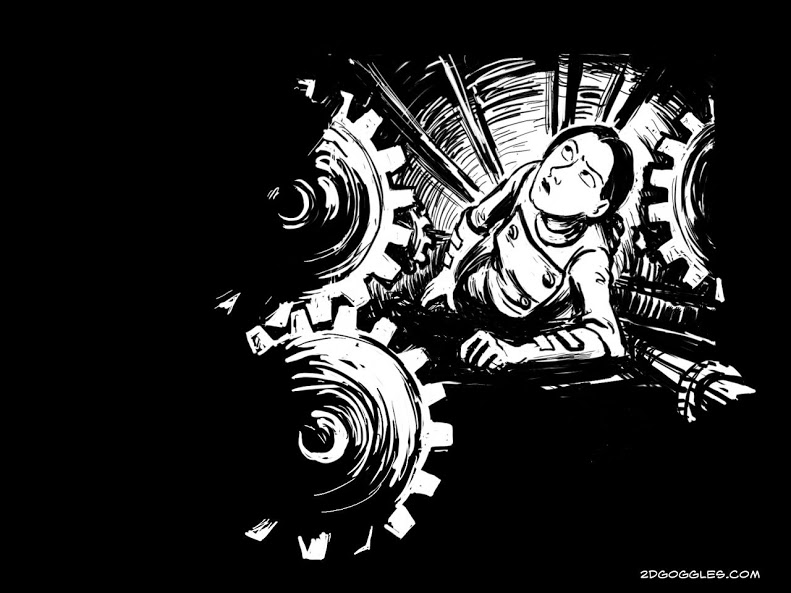

Sydney Padua’s Ada Lovelace is a continual inspiration.

At #WebSummit 2017, I was part of a panel on what the future will bring in 2030 with John Vickers from Blue Abyss, Jacques Van den Broek from Randstad and Stewart Rogers from Venture Beat. John talked about how technology will allow humans to explore amazing new places. Jacques demonstrated how humans were more complex than our most sophisticated AI and thus would be an integral part of any advances. And I focused on how the current technological changes would look amplified over a 10–12 year period.

After all, 2030 isn’t that far off, so we have already invented all the tech, but it isn’t widespread yet and we’re only guessing what changes will come about with the network effects. As William Gibson said, “The future is here, it’s just not evenly distributed yet.”

What worries me is that right now we’re worried about robots taking jobs. And yet the jobs at most risk are the ones in which humans are treated most like machines. So I say, bring on the robots! But what also worries me is that the current trend towards a gig economy and micro transactions powered by AI, ubiquitous connectivity and soon blockchain, will mean that we turn individuals back into machines. Just part of a giant economic network, working in fragments of gigs not on projects or jobs. I think that this inherent ‘replaceability’ is ultimately inhumane.

When people say they want jobs, they really mean they want a living wage and a rewarding occupation. So let’s give the robots the gigs.

Here’s the talk: “Life in 2030”

It’s morning, the house gently blends real light tones and a selection of bird song to wake me up. Then my retro ‘Teasmade’ serves tea and the wall changes from sunrise to news channels and my calendar for today. I ask the house to see if my daughter’s awake and moving. And to remind her that the clothes only clean themselves if they’re in the cupboard, not on the floor.

Affordable ‘Pick up’ bots are still no good at picking up clothing although they’re good at toys. In the kitchen I spend a while recalibrating the house farm. I’m enough of a geek to put the time into growing legumes and broccoli. It’s pretty automatic to grow leafy greens and berries, but larger fruits and veg are tricky. And only total hippies spend the time on home grown vat meat or meat substitutes.

I’m proud of how energy neutral our lifestyle is, although humans always seem to need more electricity than we can produce. We still have our own car, which shuttles my daughter to school in remote operated semi autonomous mode where control is distributed between the car, the road network and a dedicated 5 star operator. Statistically it’s the safest form of transport, and she has the comfort of traveling in her own family vehicle.

Whereas I travel in efficiency mode — getting whatever vehicle is nearby heading to my destination. I usually pick the quiet setting. I don’t mind sharing my ride with other people or drivers but I like to work or think as I travel.

I work in a creative collective — we provide services and we built the collective around shared interests like historical punk rock and farming. Branding our business or building our network isn’t as important as it used to be because our business algorithms adjust our marketing strategies and bid on potential jobs faster than we could.

The collective allows us to have better health and social plans than the usual gig economy. Some services, like healthcare or manufacturing still have to have a lot of infrastructure, but most information services can cowork or remote work and our biggest business expense is data subscriptions.

This is the utopic future. For the poor, it doesn’t look as good. Rewind..

It’s morning. I’m on Basic Income, so to get my morning data & calendar I have to listen to 5 ads and submit 5 feedbacks. Everyone in our family has to do some, but I do extra so that I get parental supervision privileges and can veto some of the kid’s surveys.

We can’t afford to modify the house to generate electricity, so we can’t afford decent house farms. I try to grow things the old way, in dirt, but we don’t have automation and if I’m busy we lose produce through lack of water or bugs or something. Everyone can afford Soylent though. And if I’ve got some cash we can splurge on junk food, like burgers or pizza.

My youngest still goes to a community school meetup but the older kids homeschool themselves on the public school system. It’s supposed to be a personalized AI for them but we still have to select which traditional value package we subscribed to.

I’m already running late for work. I see that I have a real assortment of jobs in my queue. At least I’ll be getting out of the house driving people around for a while, but I’ve got to finish more product feedbacks while I drive and be on call for remote customer support. Plus I need to do all the paperwork for my DNA to be used on another trial or maybe a commercial product. Still, that’s how you get health care — you contribute your cells to the health system.

We also go bug catching, where you scrape little pieces of lichen, or dog poo, or insects into the samplers, anything that you think might be new to the databases. One of my friends hit jackpot last year when their sample was licensed as a super new psychoactive and she got residuals.

I can’t afford to go online shopping so I’ll have to go to a mall this weekend. Physical shopping is so exhausting. There are holo ads and robots everywhere spamming you for feedback and getting in your face. You might have some privacy at home but in public, everyone can eye track you, emote you and push ads. It’s on every screen and following you with friendly robots.

It’s tiring having to participate all the time. Plus you have to take selfies and foodies and feedback and survey and share and emote. It used to be ok doing it with a group of friends but now that I have kids ….

Robots and AI make many things better although we don’t always notice it much. But they also make it easier to optimize us and turn us into data, not people.