Very important to the rulings would be an understanding of how certain requirements could slow down robocar development or raise costs. For example, a ruling that car must make a decision based on the number of pedestrians it might hit demands it be able to count pedestrians. Today’s robocars may often be unsure whether a blob is 2 or 3 pedestrians, and nobody cares because generally the result is the same — you don’t want to hit any number of pedestrians. Likeways, requirements to know the age of people on the road demands a great deal more of the car’s perception system than anybody would normally develop, particularly if you imagine you will ask it to tell a dwarf adult from a child.

Writers in this space have proposed questions like “How do you choose between one motorcyclist wearing a helmet and another not wearing one?” (You are less likely to kill the helmet wearer, but the bareheaded rider is the one who accepted greater risk and broke the helmet law.) Hidden in this question is the idea that the car would need to be able to tell whether somebody is wearing a helmet or not — a much bigger challenge for a computer than for a human. If a ruling demanded the car be able to figure this out, it makes developing the car harder just to solve an extremely rare problem.

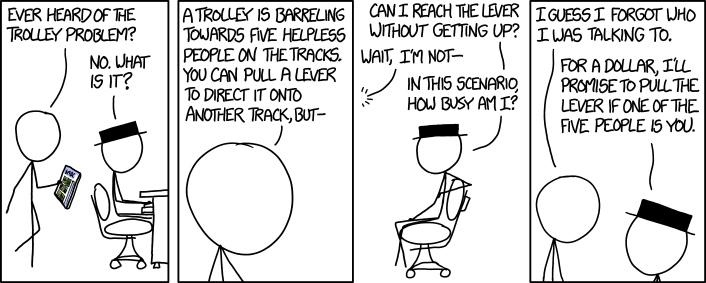

This invokes the “meta-trolley problem.” In this case, we see a life-saving technology like robocars made more difficult, and thus delayed, to solve a rare philosophical problem. That delay means more people die because the robocar technology which could have saved them was not yet available. The panels would be expected to consider this. As such, problems sent to them would not be expressed in absolutes. You might ask, “If the system assigns an 80% probability that rider 1 is wearing a helmet, do I do X or Y” after you have determined that that level of confidence is technically doable.

This is important because a lot of the “trolley problem” questions involve the car departing its right-of-way to save the life of somebody in that path. 99% of the effort going into developing robocars is devoted to making them drive safely where they are supposed to be. There will always be less effort put into making sure the car can do a good job veering off the road and onto the sidewalk. It will not be as well trained and tested identifying obstacles and hazards of the sidewalk. Its maps will not be designed for driving there. Any move out of normal driving situations increases the risk and the difficulty of the driving task. People are “general purpose” thinking machines, we can adapt to what we have never done before. Robots are not.

I believe vendors would embrace this idea because they don’t want to be making these decisions themselves, and they don’t want to be held accountable for them if they turn out to be wrong (or even if they turn out to be right.) Society is quite uncomfortable with machines deliberately hurting anybody, even if it’s to save others. Even the panel members would not be thrilled with the job, but they would not have personal responsibility.

Neural Networks

It must be noted that all these ideas (and all other conventional ideas on ethical calculus for robots) are turned upside-down if cars are driven by neural networks trained by machine learning. Some developers hope to run the whole process this way. Some may wish to only do the “judgment on where to go” part that way. Almost everybody will use them in perception and classification. You don’t program neural networks, and you don’t know why they do what they do — you only know that when you test them, they perform well, and they also are often better and dealing with unforeseen situations than traditional approaches.

As such, you can’t easily program a rule (including a ruling from the panel) into such a car. You can show it examples of humans following the rule as you train it, but that’s about it. Because many of the situations above involve dangerous and even fatal situations, you clearly can’t show it real world examples easily, though there are some tricks you can do with robotic inflatable dummies and radio controlled cars. You may need to train it in simulation (which is useful but runs the risk of it latching onto the artifacts of simulation not seen in the real world.)

Neural network systems are currently the AI technology most capable of human-like behaviour. As such, it is suggested they could be a good choice for ethical decisions, though it is sure they would surprise everybody in certain situations, and not always in a good way. They will sometimes do things that are quite inhuman.

It has been theorized they have a perverse advantage in the legal system because they are not understood. If you can’t point to a specific reason the car did something (such as running over a group of 2 people instead of a single person) you can’t easily show the developers were negligent in court. The vehicle “went with its gut” just like a human being.

Everyday ethical situations and the vehicle code

The panels would actually be far more useful not in solving the very rare questions, but the common questions. Real driving today in most countries involves constantly breaking or bending the rules. Most people speed. People constantly cut other people off. It is often impossible to get through traffic without technically cutting people off, which is to say moving into their path and expecting them to brake. Google caused its first accident by moving into the path of a bus it thought would brake and let them into the lane. In some of the more chaotic places of the world, a driver adhering strictly to the law would never get out of their driveway.

The panels could be asked questions like this.

- “If 80% of cars are going 10mph over the speed limit, can we do that?” I think that yes would be a good answer here.

- “If a stalled car is blocking the lane, can we go slightly over the double-yellow line to get around that car if the oncoming lane is sufficiently clear?” Again, we need the cars to know that the answer is also yes.

- “If nobody will let me into a lane, when can I aggressively push my way in even though the car I move in front of would hit me if it maintains its speed?”

- “If I decide, one time in 100, to keep going and gently bump somebody who cuts me off capriciously in order to stop drivers from treating me like I’m not there, is that OK?”

- “If I need to make a quick 3 point turn to turn around, how much delay can I cause for oncoming traffic?”

- “If it allows left turn only on a green arrow, but my sensors give me 99.99999% confidence the turn is safe, can I make it anyway?” (This actually makes a lot of sense.)

- “Is it OK for me to park in front of a hydrant, knowing I will leave the spot at the first sound, sight or electronic message about fire crews?”

- “Can I make a rolling stop at a stop sign if my systems can do it with 99.999999% safety at that sign?”

There are plenty more of such situations. Cars need answers to these today because they will encounter these problems every day. The existing vehicle code was written with a strong presumption that human drivers are unreliable. We see many places where things like left turns are prohibited even though they would almost always be safe, but humans can’t be trusted to have highly reliable judgement. In some cases, the code has to assume human drivers will be greedy and obstruct traffic if they are not forbidden from certain activities, where robocars can be trusted to promise better behaviour. In fact, in many ways, the entire vehicle code is wrong for robocars and should be completely replaced, but since that won’t happen for a long time, the panels could rule on reasonable exceptions which promote robocars and improve traffic.

How often for the “big” questions?

Above, I put a (*) next to the statement that the “who do I kill?” question comes up once in a billion miles. I don’t actually know how often it comes up, but I know it’s very rare, probably much more rare than this. For example, human drivers only kill 12 people total in a billion miles of driving. Most fatalities are single-vehicle accident (the car ran off the road, often because the driver fell asleep or was drunk.) If I had to guess, I would suspect real “who do I kill?” questions come up after more like 100 billion miles, which is to say, 200,000 lifetimes of human driving — a typical person will drive around 500,000 miles in their life. But at 100 billion miles it would still mean it happens 30 times/year in the USA, and frankly you don’t see this on the news or in fatality reports very often.

There are arguments that put the number at a more frequent level when you consider an unmanned car’s ability to do something a human driven car can’t do — namely, drive off the road and crash without hurting anybody. In that case, I don’t think the programmers need a lot of guidance — the path with zero injuries is generally an easy one, though driving off the road is never risk-free. It’s also true that robocars would find themselves able to make these decisions in places where we never would imagine a human doing so, or even being able to do so.

Jurisdictions

These panels would probably exist at many levels. Rules of the road are a state matter in the USA, but safety standards for car hardware and software are a federal matter. Certainly it’s easier for developers to have only national rulings to worry about, but it’s also not tremendously hard to load in different modules when you move from one state to another. As is the case in many other areas of law, states and countries have ways to get together to normalize the laws for practical reasons like this. It’s not nearly as much of a problem when there are hardware requirements in the cars. (Though it’s not out of the question a panel might want to indirectly demand a superior sensor to help a car make its determinations.)