Robohub.org

ManipulaTHOR: a framework for visual object manipulation

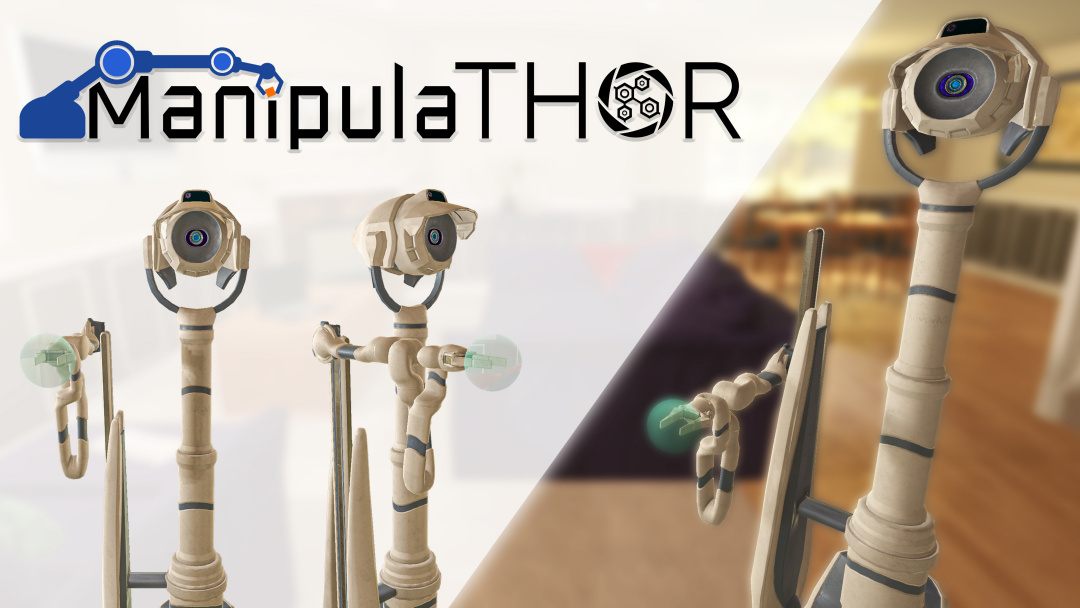

The Allen Institute for AI (AI2) announced the 3.0 release of its embodied artificial intelligence framework AI2-THOR, which adds active object manipulation to its testing framework. ManipulaTHOR is a first of its kind virtual agent with a highly articulated robot arm equipped with three joints of equal limb length and composed entirely of swivel joints to bring a more human-like approach to object manipulation.

AI2-THOR is the first testing framework to study the problem of object manipulation in more than 100 visually rich, physics-enabled rooms. By enabling the training and evaluation of generalized capabilities in manipulation models, ManipulaTHOR allows for much faster training in more complex environments as compared to current real-world training methods, while also being far safer and more cost-effective.

“Imagine a robot being able to navigate a kitchen, open a refrigerator and pull out a can of soda. This is one of the biggest and yet often overlooked challenges in robotics and AI2-THOR is the first to design a benchmark for the task of moving objects to various locations in virtual rooms, enabling reproducibility and measuring progress,” said Dr. Oren Etzioni, CEO at AI2. “After five years of hard work, we can now begin to train robots to perceive and navigate the world more like we do, making real-world usage models more attainable than ever before.”

Despite being an established research area in robotics, the visual reasoning aspect of object manipulation has consistently been one of the biggest hurdles researchers face. In fact, it’s long been understood that robots struggle to correctly perceive, navigate, act, and communicate with others in the world. AI2-THOR solves this problem with complex simulated testing environments that researchers can use to train robots for eventual activities in the real world.

With the pioneering of embodied AI through AI2-THOR, the landscape has changed for the common good. AI2-THOR enables researchers to efficiently devise solutions that address the object manipulation issue, and also other traditional problems associated with robotics testing.

“In comparison to running an experiment on an actual robot, AI2-THOR is incredibly fast and safe,” said Roozbeh Mottaghi, Research Manager at AI2. “Over the years, AI2-THOR has enabled research on many different tasks such as navigation, instruction following, multi-agent collaboration, performing household tasks, reasoning if an object can be opened or not. This evolution of AI2-THOR allows researchers and scientists to scale the current limits of embodied AI.”

In addition to the 3.0 release, the team is hosting the RoboTHOR Challenge 2021 in conjunction with the Embodied AI Workshop at this year’s Conference on Computer Vision and Pattern Recognition (CVPR). AI2’s challenges cover RoboTHOR object navigation; ALFRED (instruction following robots); and Room Rearrangement.

To read AI2-THOR’s ManipulaTHOR paper: ai2thor.allenai.org/publications

tags: Manipulation