Robohub.org

Robo-picker grasps and packs

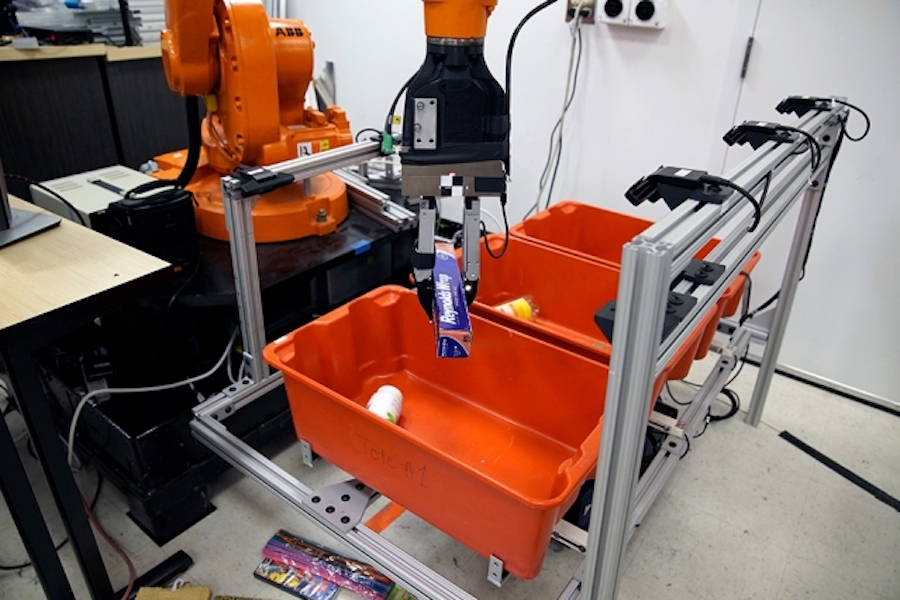

The “pick-and-place” system consists of a standard industrial robotic arm that the researchers outfitted with a custom gripper and suction cup. They developed an “object-agnostic” grasping algorithm that enables the robot to assess a bin of random objects and determine the best way to grip or suction onto an item amid the clutter, without having to know anything about the object before picking it up.

Image: Melanie Gonick/MIT

Unpacking groceries is a straightforward albeit tedious task: You reach into a bag, feel around for an item, and pull it out. A quick glance will tell you what the item is and where it should be stored.

Now engineers from MIT and Princeton University have developed a robotic system that may one day lend a hand with this household chore, as well as assist in other picking and sorting tasks, from organizing products in a warehouse to clearing debris from a disaster zone.

The team’s “pick-and-place” system consists of a standard industrial robotic arm that the researchers outfitted with a custom gripper and suction cup. They developed an “object-agnostic” grasping algorithm that enables the robot to assess a bin of random objects and determine the best way to grip or suction onto an item amid the clutter, without having to know anything about the object before picking it up.

Once it has successfully grasped an item, the robot lifts it out from the bin. A set of cameras then takes images of the object from various angles, and with the help of a new image-matching algorithm the robot can compare the images of the picked object with a library of other images to find the closest match. In this way, the robot identifies the object, then stows it away in a separate bin.

In general, the robot follows a “grasp-first-then-recognize” workflow, which turns out to be an effective sequence compared to other pick-and-place technologies.

“This can be applied to warehouse sorting, but also may be used to pick things from your kitchen cabinet or clear debris after an accident. There are many situations where picking technologies could have an impact,” says Alberto Rodriguez, the Walter Henry Gale Career Development Professor in Mechanical Engineering at MIT.

Rodriguez and his colleagues at MIT and Princeton will present a paper detailing their system at the IEEE International Conference on Robotics and Automation, in May.

Building a library of successes and failures

While pick-and-place technologies may have many uses, existing systems are typically designed to function only in tightly controlled environments.

Today, most industrial picking robots are designed for one specific, repetitive task, such as gripping a car part off an assembly line, always in the same, carefully calibrated orientation. However, Rodriguez is working to design robots as more flexible, adaptable, and intelligent pickers, for unstructured settings such as retail warehouses, where a picker may consistently encounter and have to sort hundreds, if not thousands of novel objects each day, often amid dense clutter.

The team’s design is based on two general operations: picking — the act of successfully grasping an object, and perceiving — the ability to recognize and classify an object, once grasped.

The researchers trained the robotic arm to pick novel objects out from a cluttered bin, using any one of four main grasping behaviors: suctioning onto an object, either vertically, or from the side; gripping the object vertically like the claw in an arcade game; or, for objects that lie flush against a wall, gripping vertically, then using a flexible spatula to slide between the object and the wall.

Rodriguez and his team showed the robot images of bins cluttered with objects, captured from the robot’s vantage point. They then showed the robot which objects were graspable, with which of the four main grasping behaviors, and which were not, marking each example as a success or failure. They did this for hundreds of examples, and over time, the researchers built up a library of picking successes and failures. They then incorporated this library into a “deep neural network” — a class of learning algorithms that enables the robot to match the current problem it faces with a successful outcome from the past, based on its library of successes and failures.

“We developed a system where, just by looking at a tote filled with objects, the robot knew how to predict which ones were graspable or suctionable, and which configuration of these picking behaviors was likely to be successful,” Rodriguez says. “Once it was in the gripper, the object was much easier to recognize, without all the clutter.”

From pixels to labels

The researchers developed a perception system in a similar manner, enabling the robot to recognize and classify an object once it’s been successfully grasped.

To do so, they first assembled a library of product images taken from online sources such as retailer websites. They labeled each image with the correct identification — for instance, duct tape versus masking tape — and then developed another learning algorithm to relate the pixels in a given image to the correct label for a given object.

“We’re comparing things that, for humans, may be very easy to identify as the same, but in reality, as pixels, they could look significantly different,” Rodriguez says. “We make sure that this algorithm gets it right for these training examples. Then the hope is that we’ve given it enough training examples that, when we give it a new object, it will also predict the correct label.”

Last July, the team packed up the 2-ton robot and shipped it to Japan, where, a month later, they reassembled it to participate in the Amazon Robotics Challenge, a yearly competition sponsored by the online megaretailer to encourage innovations in warehouse technology. Rodriguez’s team was one of 16 taking part in a competition to pick and stow objects from a cluttered bin.

In the end, the team’s robot had a 54 percent success rate in picking objects up using suction and a 75 percent success rate using grasping, and was able to recognize novel objects with 100 percent accuracy. The robot also stowed all 20 objects within the allotted time.

For his work, Rodriguez was recently granted an Amazon Research Award and will be working with the company to further improve pick-and-place technology — foremost, its speed and reactivity.

“Picking in unstructured environments is not reliable unless you add some level of reactiveness,” Rodriguez says. “When humans pick, we sort of do small adjustments as we are picking. Figuring out how to do this more responsive picking, I think, is one of the key technologies we’re interested in.”

The team has already taken some steps toward this goal by adding tactile sensors to the robot’s gripper and running the system through a new training regime.

“The gripper now has tactile sensors, and we’ve enabled a system where the robot spends all day continuously picking things from one place to another. It’s capturing information about when it succeeds and fails, and how it feels to pick up, or fails to pick up objects,” Rodriguez says. “Hopefully it will use that information to start bringing that reactiveness to grasping.”

This research was sponsored in part by ABB Inc., Mathworks, and Amazon.