Robohub.org

SMEs need robots that know their limitations and ask for help

Socrates famously said that “the only true wisdom is in knowing you know nothing.” Yet while we often equate human intelligence with the ability to recognize when help is needed and where to seek it out, most robots are simply not aware enough of their own actions to assess them, let alone ask for help — resulting in task execution failures that shut down production lines, require human intervention and reduce productivity. While occasional robot failures can be tolerated, relying on humans to clean up the mess does not make for a viable business model, especially for small production batch operations or non-repetitive tasks. If robots are to be successfully deployed outside large factory settings, and into small to medium sized enterprises (SMEs), they will have to get smarter and learn to ask for help when they are stuck.

Socrates famously said that “the only true wisdom is in knowing you know nothing.” Yet while we often equate human intelligence with the ability to recognize when help is needed and where to seek it out, most robots are simply not aware enough of their own actions to assess them, let alone ask for help — resulting in task execution failures that shut down production lines, require human intervention and reduce productivity. While occasional robot failures can be tolerated, relying on humans to clean up the mess does not make for a viable business model, especially for small production batch operations or non-repetitive tasks. If robots are to be successfully deployed outside large factory settings, and into small to medium sized enterprises (SMEs), they will have to get smarter and learn to ask for help when they are stuck.

Many robots are capable of picking up a part if the part is presented to the robot at a particular location. However, if the part has shifted from the expected location, the robot may no longer be able to grasp it … and if the part is sufficiently distant from its expected location, the robot could – as it attempts to grasp it – bump into it, push it further away, and jam the material handling system. This can, in turn, trigger a system fault and shut down the whole line — all because the robot does not know where the boundary between success and failure lies.

Robots function well in large scale industrial applications when reliability is designed into the system. This is accomplished by designing specialized hardware and software, extensively testing the system to identify potential failure spots, and developing contingency plans to handle failures when they occur. When a robot fails at a task, it often requires human intervention to clear the fault and restart the process, and this can be expensive. As such, robots are not typically used to execute a task until extremely high levels of reliability can be achieved — this is why customized hardware and software costs are typically only justified when the production volume is sufficiently high and the tasks are repetitive (such as in automotive assembly lines).

If we want to be able to use robots in small production batch operations or for non-repetitive tasks, however, we will need to prevent major system failures from occurring in the first place. Imagine a robot that could estimate the likelihood of completing its task before it even begins, and that calls for a human to help it figure out the problem when it foresees failure. A human operator could then provide the robot with assistance with the portion of the task that it finds challenging (for example, determining the orientation of a part, or finding a grasping strategy), leaving the robot to execute the remainder of the task itself.

In most situations, human intervention — at the right time, before the problem occurs — is far less costly than recovering from a system shutdown.

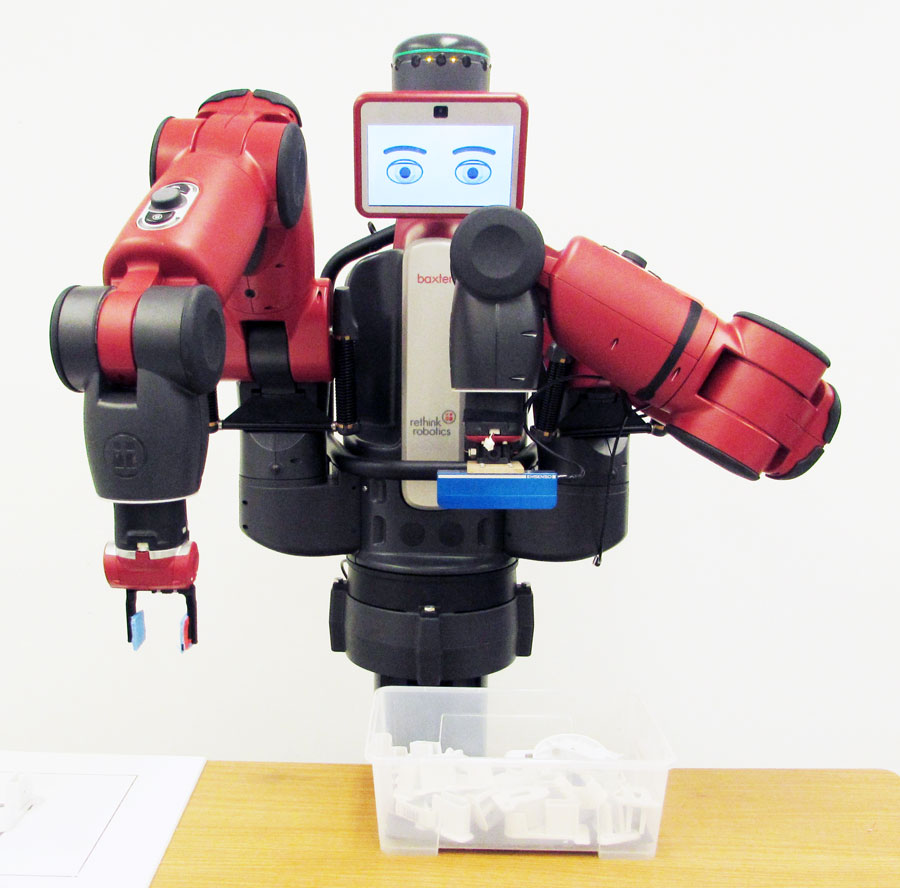

My students have been building a robot, called RoboSAM (ROBOtic Smart Assistant for Manufacturing), to demonstrate this concept in bin picking applications, which challenge a robot’s ability to perceive the desired object in the environment and to successfully pick it up and deliver it in a known pose. If RoboSAM is not sure whether it can pick the desired part from a bin containing many different parts, then it calls a remotely located human operator for help. The “human-on-call” concept, as we call it, is fundamentally different from the human-in-the-loop approach, which requires the human operator to actively monitor the manufacturing cell and take control away from the robot when the robot is about to make a mistake. “Human-on-call” requires the robot to call the human operator when it decides that it needs help.

This model allows a single remotely situated human operator to help multiple robots on an “as need” basis. It also enables robots to be deployed on more difficult tasks. For the foreseeable future, many tasks in small and medium manufacturing companies fall into this category, making the human-on-call concept the right economic model for deploying robots in these contexts.

People often ask what humans will do when robots become more widespread. In my opinion, humans will be needed to teach robots how to do different tasks and bail robots out when they are confused. The key will be to develop technologies that allow robots to ask for help when needed. Recent work in our lab is a step in that direction.

If you like this post, you may also be interested in:

- Factory-in-a-Day: EU FP7 invests €7.9M to make robotics affordable for SMEs

- Low-cost robots like Baxter, UR5 and UR10 successfully entering small and medium enterprises (SMEs)

- Takeoff in robotics will power the next productivity surge in manufacturing

- What’s hot in robotics? Four trends to watch

- ABB launches YuMi and acquires gomTec

- Team RBO from Berlin wins Amazon Picking Challenge convincingly

- People and Robots: UC’s new multidisciplinary CITRIS initiative wants humans in the loop

- National Science Foundation and federal partners award $31.5M to advance co-robots in US

tags: c-Industrial-Automation, collaborative robotics, cx-Research-Innovation, HRI, human-robot interaction