Robohub.org

The world’s smallest autonomous racing drone

Racing team 2018-2019: Christophe De Wagter, Guido de Croon, Shuo Li, Phillipp Dürnay, Jiahao Lin, Simon Spronk

Autonomous drone racing

Drone racing is becoming a major e-sports. Enthusiasts – and now also professionals – transform drones into seriously fast racing platforms. Expert drone racers can reach speeds up to 190 km/h. They fly by looking at a first-person view (FPV) of their drone, which has a camera transmitting images mounted on the front.

In recent years, the advance in areas such as artificial intelligence, computer vision, and control has raised the question whether drones would not be able to fly faster than humans. The advantage for the drone could be that it can sense much more than the human pilot (like accelerations and rotation rates with its inertial sensors) and process all image data quicker on board of the drone. Moreover, its intelligence could be shaped purely for only one goal: racing as fast as possible.

In the quest for a fast-flying, autonomous racing drone, multiple autonomous racing drone competitions have been organized in the academic community. These “IROS” drone races (where IROS stands for one of the most well-known world-wide robotics conferences) have been held from 2016 on. Over these years, the speed of the drones has been gradually improving, with the faster drones in the competition now moving at ~2 m/s.

Smaller

Most of the autonomous racing drones are equipped with high-performance processors, with multiple, high-quality cameras and sometimes even with laser scanners. This allows these drones to use state-of-the-art solutions to visual perception, like building maps of the environment or tracking accurately how the drone is moving over time. However, it also makes the drones relatively heavy and expensive.

At the Micro Air Vehicle laboratory (MAVLab) of TU Delft, we have as aim to make light-weight and cheap autonomous racing drones. Such drones could be used by many drone racing enthusiasts to train with or fly against. If the drone becomes small enough, it could even be used for racing at home. Aiming for “small” means serious limitations to the sensors and processing that can be carried onboard. This is why in the IROS drone races we have always focused on monocular vision (a single camera) and on software algorithms for vision, state estimation, and control that are computationally highly efficient.

With its 72 grams and 10 cm diameter, the modified “Trashcan” drone is currently the smallest autonomous racing drone in the world. In the background, Shuo Li, PhD student at working on autonomous drone racing at the MAVLab.

A 72-gram autonomous racing drone

Here, we report on how we made a tiny autonomous racing drone fly through a racing track with on average 2 m/s, which is competitive with other, larger state-of-the-art autonomous racing drones.

The drone, which is a modified Eachine “Trashcan”, is 10 cm in diameter and weighs 72 grams. This weight includes a 17-gram JeVois smart-camera, which consists of a single, rolling shutter CMOS camera, a 4-core ARM v7 1.34 GHz processor with 256 MB RAM, and a 2-core Mali GPU. Although limited compared to the processors used on other drones, we consider it as more than powerful enough: With the algorithms we explain below, the drone actually only uses a single CPU core. The JeVois camera communicates with a 4.2gram Crazybee F4 Pro Flight Controller running Paparazzi autopilot, via the MAVLink communication protocol. Both the JeVois code and Paparazzi code is open source and available to the community.

An important characteristic of our approach to drone racing is that we do not rely on accurate, but computationally expensive methods for visual Simultaneous Localization And Mapping (SLAM) or Visual Inertial Odometry (VIO). Instead, we focus on having the drone predict its motion as good as possible with an efficient prediction model and correct any drift of the model with vision-based gate detections.

Prediction

A typical prediction model would involve the integration of the accelerometer readings. However, on small drones the Inertial Measurement Unit (IMU) is subject to a lot of vibration, leading to noisier accelerometer readings. Integrating such noisy measurements quickly leads to an enormous drift in both the velocity and position estimates of the drone. Therefore, we have opted for a simpler solution, in which the IMU is only used to determine the attitude of the drone. This attitude can then be used to predict the forward acceleration, as illustrated in the figure below. If one assumes the drone to fly at a constant height, the force in the z-direction has to equal the gravity force. Given a specific pitch angle, this relation leads to a specific forward force due to the thrust. The prediction model then updates the velocity based on this predicted forward force and the expected drag force given the estimated velocity.

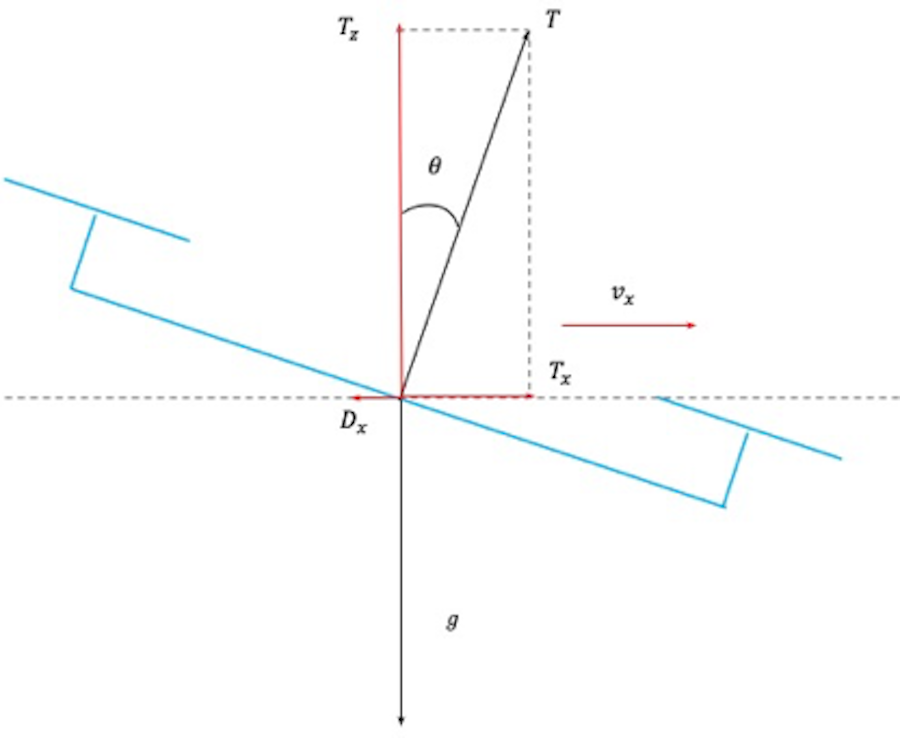

Prediction model for the tiny drone. The drone has an estimate of its attitude, including the pitch angle (ѳ). Assuming the drone to fly at a constant height, the force straight up (Tz) should equal gravity (g). Together, these two pieces allow us to calculate the thrust force that should be delivered by the drone’s four propellers (T), and, consequently also the force that is exerted forwards (Tx). The model uses this forward force, and resulting (backward) drag force (Dx), to update the velocity (vx) and position of the drone when not seeing gates.

Vision-based corrections

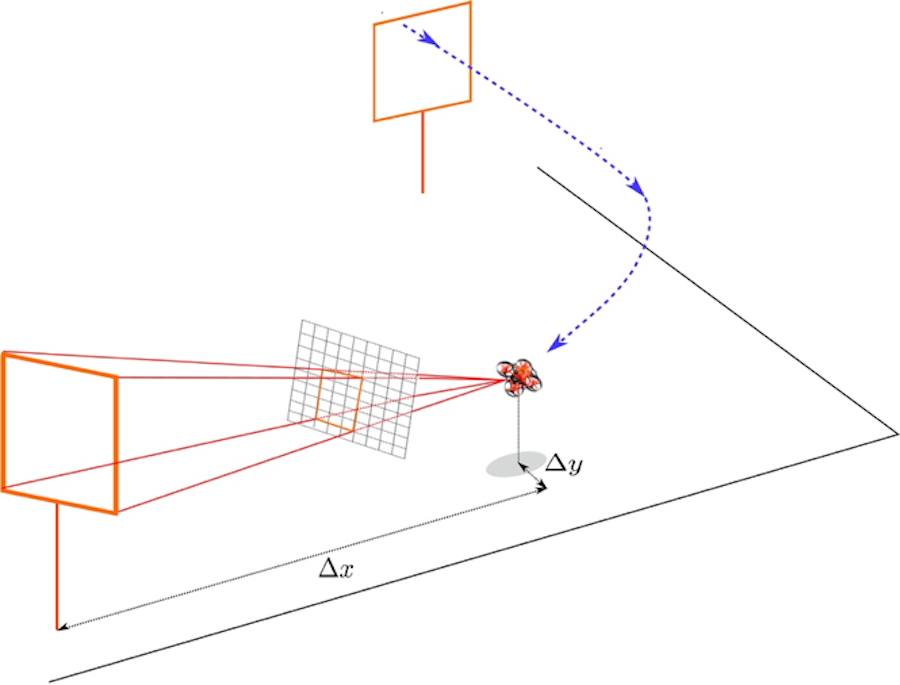

The prediction model is corrected with the help of vision-based position measurements. First, a snake-gate algorithm is used to detect the colored gate in the image. This algorithm is extremely efficient, as it only processes a small portion of the image’s pixels. It samples random image locations and when it finds the right color, it starts following it around to determine the shape. After a detection, the known size of the gate is used to determine the drone’s relative position to the gate (see the figure below). This is a standard perspective-N-point problem. The output of this process is a relative position to a gate. Subsequently, we figure out which gate on the racing track is most likely in our view, and transform the relative position to the gate to a global position measurement. Since our vision process often outputs quite precise position estimates but sometimes also produces significant outliers, we do not use a Kalman filter but a Moving Horizon Estimator for the state estimation. This leads to much more robust position and velocity estimates in the presence of outliers.

The gates and their sizes are known. When the drone detects a gate, it can use this knowledge to calculate its relative position to a gate. The global layout of the track and current supposed position of the drone are used to determine which gate the drone is most likely looking at. This way, the relative position can be transformed to a global position estimate.

Racing performance and future steps

The drone used the newly developed algorithms to race along a 4-gate race track in TU Delft’s Cyberzoo. It can fly multiple laps at an average speed of 2 m/s, which is competitive with larger, state-of-the-art autonomous racing drones (see the video at the top). Thanks to the central role of gate detections in the drone’s algorithms, the drone can cope with moderate displacements of the gates.

Possible future directions of research are to make the drone smaller and fly faster. In principle, being small is an advantage, since the gates are relatively bigger. This allows the drone to choose its trajectory more freely than a big drone, which may allow for faster trajectories. In order to better exploit this characteristic, we would have to fit optimal control algorithms into the onboard processing. Moreover, we want to make the vision algorithms more robust – as the current color-based snake gate algorithm is quite dependent on lighting conditions. An obvious option here is to start using deep neural networks, which would have to fit within the dual-core Mali GPU on the JeVois.

Arxiv article: Visual Model-predictive Localization for Computationally Efficient Autonomous Racing of a 72-gram Drone, Shuo Li, Erik van der Horst, Philipp Duernay, Christophe De Wagter, Guido C.H.E. de Croon.