Robohub.org

Why Tesla’s purported liability ‘fix’ is technically and legally questionable

Tesla Motors autopilot (photo:Tesla)

An interesting article in last week’s Wall Street Journal spawned a series of unfortunate headlines (in a variety of publications) suggesting that Tesla had somehow “solved” the “problem” of “liability” by requiring that human drivers manually instruct the company’s autopilot to complete otherwise-automated lane changes.

(I have not asked Tesla what specifically it plans for its autopilot or what technical and legal analyses underlie its design decisions. The initial report may not and should not be the full story.)

For many reasons, these are silly headlines.

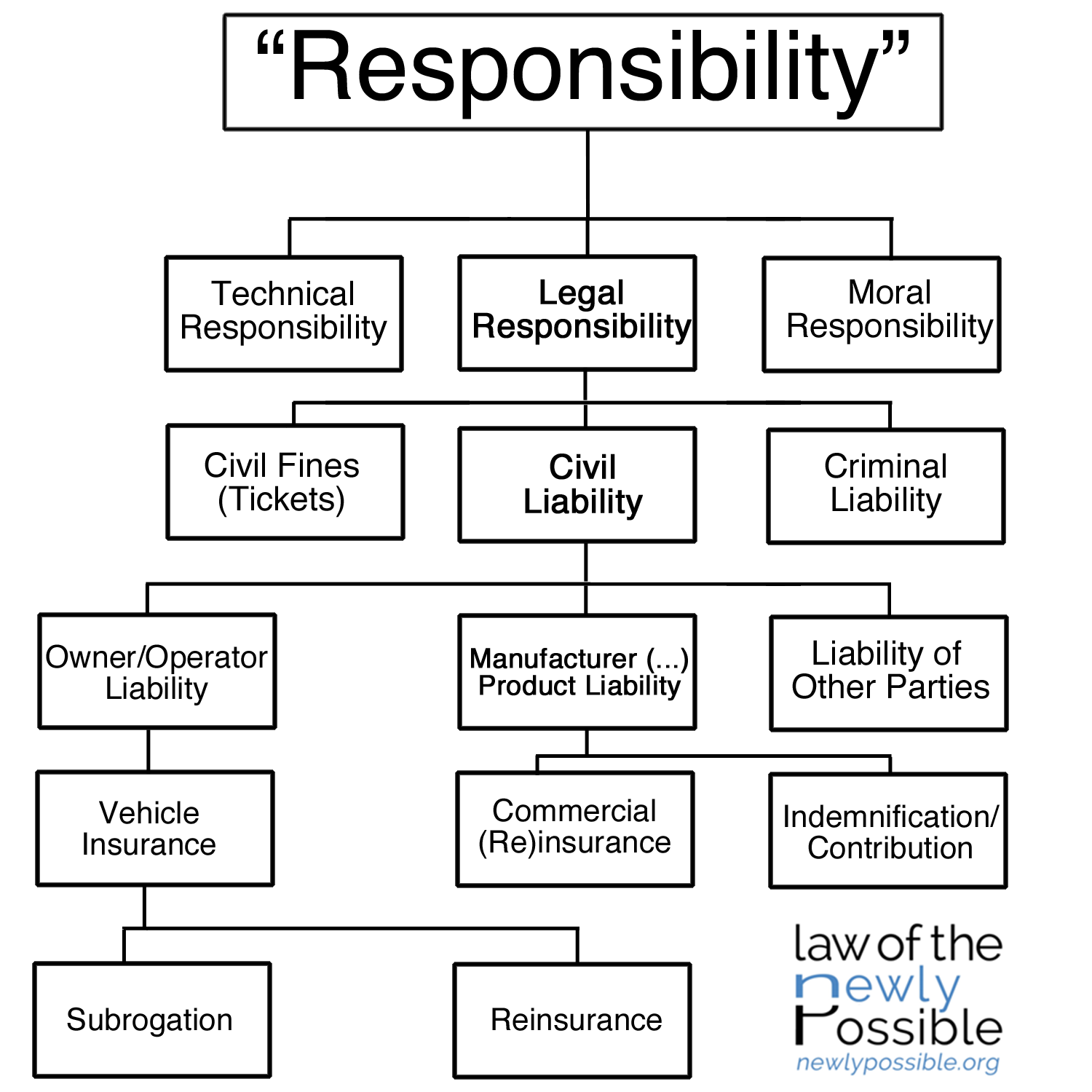

First, “liability” is a word that nonlawyers often use in clumsy reference to either (a) the existence of vague legal or policy questions related to the increasing automation of driving or (b) the specific but unhelpful question of “who is liable when an automated vehicle crashes.”

If this term is actually meant to refer, however imperfectly, to “driver compliance with the rules of the road,” then it is not clear to what problem or for what reason this lane-change requirement is a “solution.” For a foundational analysis of legality, including my recommendation that both developers and regulators carefully examine applicable vehicle codes, please see Automated Vehicles Are Probably Legal in the United States.

This term probably refers instead to some concept of fault following a crash. As a forthcoming paper explains, liability (or the broader notion of “responsibility”) is multifaceted:

Moreover, although liability is frequently posited as an either/or proposition (“either the manufacturer or the driver”), it is rarely binary. In terms of just civil (that is, noncriminal) liability, in a single incident a vehicle owner could be liable for failing to properly maintain her vehicle, a driver could be liable for improperly using the vehicle’s automation features, a manufacturer could be liable for failing to adequately warn the user, a sensor supplier could be liable for poorly manufacturing a spotty laser scanner, and a mapping data provider could be liable for providing incorrect roadway data. Or not.

The relative liability of various actors will depend on the particular facts of the particular incident. Although automation will certainly impact civil liability in both theory and practice, merely asking “who is liable in tomorrow’s automated crashes” in the abstract is like asking “who is liable in today’s conventional crashes.” The answer is: “It depends.”

Second, the only way to truly “solve” civil liability is to prevent the underlying injuries from occurring. If automated features actually improve traffic safety, they will help in this regard. As a technical matter, however, it is doubtful that requiring drivers merely to initiate lane changes will ensure that they are engaged enough in driving that they are able to quickly respond to the variety of bizarre situations that routinely occur on our roads. This is one of the difficult human-factors issues present in what I call the “mushy middle” of automation, principally SAE levels 2 and 3.

These levels of automation help ground discussions about the respective roles of the human driver and the automated driving system.

At SAE level 2, the human driver is still expected, as a technical matter, to monitor the driving environment and to immediately respond as needed. If this is Tesla’s expectation as well, then the lane-change requirement alone is manifestly inadequate: Many drivers will almost certainly consciously or unconsciously disengage from actively driving for long stretches without lane changes.

At SAE level 3, the human driver is no longer expected, as a technical matter, to monitor the driving environment but is expected to resume actively driving within an appropriate time after the automated system requests that she do so. This level raises difficult questions about ensuring and managing this human-machine transition. But it is technically daunting in another way as well: The vehicle must be able to drive itself for the several seconds it may take the human driver to effectively reengage. If this is ultimately Tesla’s claim, then it represents a significant technological breakthrough—and one reasonably subject to requests for substantiation.

Third, these two levels of automation raise specific liability issues that are not necessarily clarified by a lane-change requirement.

At SAE level 2, driver distraction is entirely foreseeable, and in some states Tesla might be held civilly liable for facilitating this distraction, for inadequately warning against it, or for inadequately designing against it. Victims of a crash involving a distracted Tesla driver would surely point to more robust (though hardly infallible) ways of monitoring driver attention—like requiring occasional contact with the steering wheel or monitoring eye movements—as proof that arguably safer designs are readily available.

At SAE level 3, Tesla would be asserting that its autopilot system could handle the demands of driving—from the routine to the rare—without a human driver monitoring the roadway or intervening immediately when needed. If this is the case, then Tesla may nonetheless face significant liability, particularly in the event of crashes that could have been prevented either by human drivers or by better automated systems.

Fourth, this potential liability does not preclude the development or deployment of these technologies—for reasons that I discuss here and here and here.

In summary, merely requiring drivers to initiate lane changes raises both technical and legal questions.

However, the idea that there can be technological fixes to legal quandaries is a powerful one that is explored in Part III of Proximity-Driven Liability.

A related example illustrates this idea. In the United States, automakers are generally required to design their vehicles to be reasonably safe for unbelted as well as belted occupants. (In contrast, European regulators assume that anyone who cares about their safety will wear their seatbelt.) In addition, some US states restrict whether or for what purpose a defendant automaker can introduce evidence that an injured plaintiff was not wearing her seatbelt.

An understandable legal response would be to update these laws for the 21st Century. Regardless, some developers of automated systems seem poised to adopt a technological response: Their automated systems will simply refuse to operate unless all of the vehicle’s occupants are wearing their seatbelts. Given that seatbelt use can cut the chance of severe injury or death by about half, this is a promising approach that must not be allowed to suffer the same fate as the interlocks of 40 years ago.

More broadly, any automaker must consider the complex interactions among design, law, use, and safety. As I argue in a forthcoming paper by the same name, Lawyers and Engineers Should Speak the Same Robot Language.

tags: Automotive, robohub focus on autonomous driving, Tesla