Robohub.org

Evolving robot swarm behaviour suggests forgetting may be important to cultural evolution

Our latest paper from the Artificial Culture project has just been published: On the Evolution of Behaviors through Embodied Imitation.

Here is the abstract:

This article describes research in which embodied imitation and behavioral adaptation are investigated in collective robotics. We model social learning in artificial agents with real robots. The robots are able to observe and learn each others’ movement patterns using their on-board sensors only, so that imitation is embodied. We show that the variations that arise from embodiment allow certain behaviors that are better adapted to the process of imitation to emerge and evolve during multiple cycles of imitation. As these behaviors are more robust to uncertainties in the real robots’ sensors and actuators, they can be learned by other members of the collective with higher fidelity. Three different types of learned-behavior memory have been experimentally tested to investigate the effect of memory capacity on the evolution of movement patterns, and results show that as the movement patterns evolve through multiple cycles of imitation, selection, and variation, the robots are able to, in a sense, agree on the structure of the behaviors that are imitated.

Let me explain.

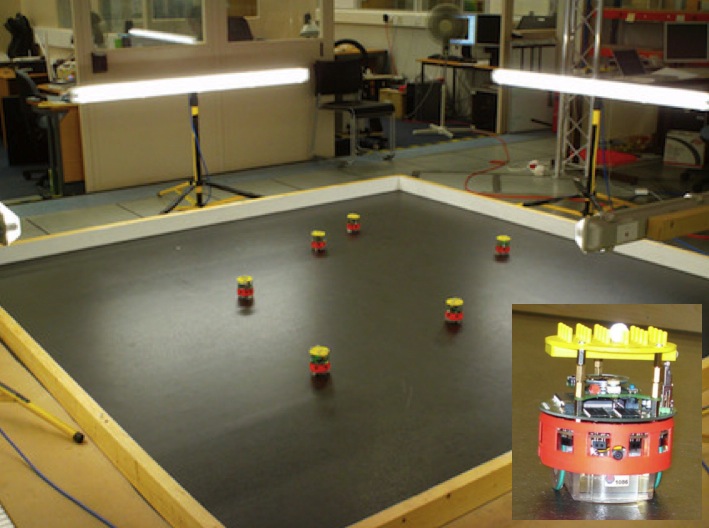

In the artificial culture project (above) we implemented social learning in a group of robots. Robots were programmed to learn from each other, by imitation. Imitation was strictly embodied, so robots observed each other using their onboard sensors and, on the basis of only visual sense data from a robot’s own camera and perspective, the learner robot inferred another robot’s physical behaviour. Here is a quick 5 minute intro to the project:

Not surprisingly embodied robot-robot imitation is imperfect. A combination of factors including the robots’ relatively low-resolution onboard camera, variations in lighting, small differences between robots, multiple robots sometimes appearing within a learner robot’s field of view, and of course having to infer a robot’s movements by tracking the relative size and position of that robot in the learner’s field of view, all lead to imitation errors. And some movement patterns are easier to imitate than others (think of how much easier it is to learn the steps of a slow waltz than the samba by watching your dance teacher). The fidelity of embodied imitation for robots, just as for animals, is a complex function of four factors: (1) the behaviours being learned, (2) the robots’ sensorium and morphology, (3) environmental noise and (4) the inferential learning algorithm.

But rather than being a problem, noisy social learning was our aim. We are interested in the dynamics of social learning, and in particular the way that behaviours evolve as they propagate through the group. Noisy social learning means that behaviours are subject to variation as they are copied from one robot to another. Multiple cycles of imitation (robot B learns behaviour m from A, then robot C learns the same behaviour m′ (m mutated), from robot B, and so on), gives rise to behavioural heredity. And if robots are able to select which learned behaviours to enact we have the three Darwinian operators for evolution, except that this is behavioural, or memetic, evolution.

These experiments show that embodied behavioural evolution really does take place. If selection is random, that is robots select which behaviour to enact from those already learned – with equal probability, then we see several interesting findings.

- If by chance one or more high fidelity copies follow a poor fidelity imitation, the large variation in the initial noisy learning can lead to a new behavioural species, or traditions. Thus showing that noisy social learning can play a role in the emergence of novelty in behavioural (i.e. cultural) evolution. That was written up in Winfield and Erbas, 2011.But it is the second and third findings that we describe in our new paper.

- We see that behaviours appear to adapt to be easier to learn, i.e. better ‘fitted’ to the robot swarm. The way to think about this is that the robots’ sensors and bodies, and physical environment of the arena with several robots (including lighting), together comprise the ‘ecological niche’ for behavioural evolution. Behaviours mutate but the ones better fitted to that niche survive.

- The third finding from this series of experiments is perhaps the most unexpected and the one I want to outline in a bit more detail here. We ran the same embodied behavioural evolution with three memory sizes: no memory, limited memory and unlimited memory.

In the unlimited memory trials each robot saved every learned meme, so the meme pool across the whole robot population (of four robots) grew as the trial progressed. Thus all learned memes were available to be selected for enaction. In the limited memory trials each robot had a memory capacity of only five learned memes, so that when a new meme was learned the oldest one in the robot’s memory was deleted.

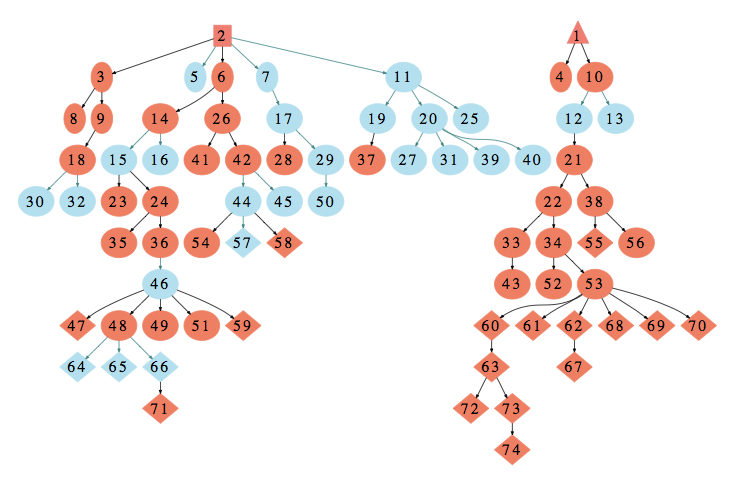

The diagram below shows the complete family tree of evolved memes, for one typical run of the limited memory case. At the start of the run the robots were seeded with two memes, shown as 1 and 2 at the top of the diagram. Behaviour 1 was a movement pattern in which the robot traces a triangle path, behaviour 2 a square. Because this was a limited memory trial the total meme pool has only 20 behaviours – these are shown below as diamonds. Notice the cluster of 11 closely related memes at the bottom right, all of which are 7th, 8th or 9th generation descendents of the triangle meme.

Behavioural evolution map following a 4-robot experiment with limited memory; each robot stores only the most recent 5 learned behaviours. Each behaviour is descended from two seed behaviours labelled 1 and 2. Orange nodes are high fidelity copies, blue nodes are low fidelity copies. The 20 behaviours in the memory of all 4 robots at the end of the experiment are highlighted as diamonds. Note the cluster of 11 closely-related behaviours at the bottom right.

When we ran multiple trials of the limited and unlimited memory cases, then analysed the number and sizes of the clusters of related memes in the meme pool, we saw that the limited memory trials showed a smaller number of larger clusters than the unlimited memory case. The difference was clear and significant; with limited memory an average of 2.8 clusters of average size 8.3, with unlimited memory 3.9 clusters of size 6.9.

Why is this clustering interesting? Well it’s because the number and size of clusters in the meme pool are good indicators of its diversity. Think of each cluster of related memes as a ‘tradition’. A healthy culture needs a balance between stability and diversity. Neither too much stability, i.e. a very small number (in the limit 1) of traditions, or too much diversity, i.e. clusters so small that there are no persistent traditions at all. Perhaps the ideal balance is a smallish number of somewhat persistent traditions.

So far I didn’t mention the no memory case. This was the least interesting of the three. Actually by no memory we mean a memory size of one; in other words a robot has no choice but to enact the last behaviour it learned. There is no selection, and no clusters can form. Traditions can never even get started, let alone persist.

Of course it would unwise to draw any big conclusions from this limited experimental study. But an intriguing possibility is that some forgetting (but not too much) may, just like noisy imitation, be a necessary condition for the emergence of culture in social agents.

Full reference:

Erbas MD, Bull L and Winfield AFT (2015), On the Evolution of Behaviors through Embodied Imitation, Artificial Life, 21 (2), pp 141-165. The full text (final draft) paper can be downloaded here.

Related blog posts:

Robot imitation as a method for modelling the foundations of social life

Open-ended Memetic Evolution, or is it?

If you liked this article, you may also be interested in:

- Robot bodies and how to evolve them

- Evolving swimming robots to study origins of extinct vertebrates

- What would be the energy cost of artificially evolving human-equivalent AI?

- New video series tracks dev’t of collective behaviour in autonomous underwater swarm

See all the latest robotics news on Robohub, or sign up for our weekly newsletter.

tags: c-Research-Innovation, Swarming