Robohub.org

Will robocars use V2V at all?

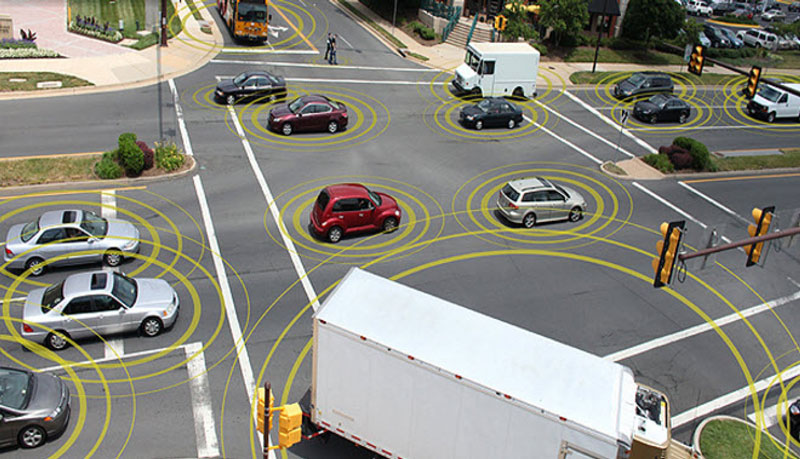

Source: US DOT

I commonly see statements from connected car advocates that vehicle to vehicle (V2V) and vehicle to infrastructure communications are an important, even essential technology for robocar development. Readers of this blog will know I disagree strongly, and while I think infrastructure to vehicle (I2V) will be important, and done primarily over the existing mobile data network, I suspect that V2V will only barely be useful, with minimal value cases that have a hard time justifying its cost.

Of late, though, my forecast for V2V grows even more dismal, because I wonder if robocars will implement V2V with human-driven cars at all, even if it becomes common for ordinary cars to have the technology because of a legal mandate.

The problem is security. A robocar is a very dangerous machine. Compromised, it can cause a lot of damage, even death. As such, security will have a very strong focus in development. You don’t want anybody breaking into the computer systems or your car or anybody else’s. You really don’t want it.

One clear fact that people in security know — a very large fraction of computer security breaches caused by software faults have come from programs that receive input data from external sources, in particular when you will accept data from anybody. Internet tools are the biggest culprits, and there is a long history of buffer overflows, injection attacks and other trouble that has fallen on tools which will accept a message from just anyone. Servers (which openly accept messages from outside) are at the greatest risk, but even client tools like web browsers run into trouble because they go to vast numbers of different web sites, and it’s not hard to trick people to sending them to a random web site.

We work very hard to remove these vulnerabilities, because when you’re writing a web tool, you have no choice. You must accept input from random strangers. Holes still get found, and we pay the price.

The simplest strategy to improve your chances is to go deaf. Don’t receive inputs from outside at all. You can’t do that in most products, but if you can close off a channel without impeding functionality it’s a good approach. Generally you will do the following to be more secure:

- Be a client, which means you make communications requests, you do not receive them.

- You only connect to places you trust. You avoid allowing yourself to be directed to connect to other things.

- You use digital signature and encryption to assure that you really are talking to your trusted server.

This doesn’t protect you perfectly. Your home server can be compromised — it often will be running in an environment not as locked down as this. In fact, if it becomes your relay for messages from outside, as it must, it has a vector for attack. Still, the extra layer adds some security.

There is data you want from outside sources. Traffic data. Map updates. Requests to travel to locations and pick up people. These all present potential risks, but you can take extra effort to assure this data is clean, converting it into less vulnerable formats that are not at risk for things like buffer overflows and injection attacks, among others. You can also vet the sources. There is a big difference between accepting data from a trusted source that might get compromised and accepting data from just anybody. Web servers accept requests from everywhere, and thanks to botnets and worms, every server is encountering large numbers of intrusion attempts every day on the internet. Directed attacks require much more effort and are harder to automate, and as such are fewer in number, though more sophisticated when they happen.

Could you work extra hard to be sure a V2V message does not contain an attack? Of course you can, and would, but so does every server author in the world. Just not taking the messages is a tempting way to avoid that question. And because your master server is not immune, and might pass you compromised data, you still want to maintain the best security hygiene even on data you get from it.

The risk of a V2V intrusion is huge, in particular because it could go viral. Ie. infect one car and it could infect other cars over V2V that have the same vulnerability. Not as fast as being on the internet, but pretty fast. The resulting infection could literally kill you, much faster than Ebola. It could also kill others.

So what are the benefits? Well, as outlined before, robocars need to see everything in their environment already with their sensors. V2V gains them very little. It only adds value if it tells you about vehicles you can’t see with your sensors. Primarily that’s things like vehicles coming around a blind corner, or vehicles blocked from your view hitting the brakes. It would be nice to get those signals, though deployment of V2V is some decades away. But would we accept this security risk for this? Certainly not in the early days of V2V. The DoT hopes for a legal mandate that would require V2V starting around 2019. In 2025, perhaps 60 million cars would then have it, or less than 1/4 of the cars on the road. Will you turn it on for very rare accidents with 1/4 of the cars? A tough question.

Trusting the data

There is another security issue with V2V — can you trust that the information you receive is true? It might be false for two reasons. One, the car might be mistaken about what it is reporting, such as its location. GPS based location will often be inaccurate. More disturbingly, it might be overtly lying, because somebody is trying to screw with things. Imagine kids on an overpass with a V2V unit reporting a stalled car in the lane under them. They don’t even have to compromise the unit, that’s what any unit pulled out of a junked car would report if you put it there. While the V2V community dreams of making a public key infrastructure so you can trust the units come from known vendors, they don’t understand just how difficult that is. (Here on the web, we’ve been pounding at it for 25 years and still have lots of problems.)

Imagine even a world where trust is working but somebody discovers a vulnerability in a particular system, say Ford’s. Do you start ignoring all V2V messages from Ford cars until they update? Is that the safe or dangerous thing to do?

This is why robocars love sensors. Your sensors might fail, but that’s your problem and under your control. Your sensors will not lie to you, not deliberately, and probably not by accident over things like where an obstacle is.

Talk to other Robocars

There is a better use case in talking to other Robocars. They have much more to tell you, because they have real sensors and could report data on all sorts of things on the road that you can’t see. A group of robocars could create a much more accurate perception map of the road all around them by sharing. It takes a while to get enough penetration for this to happen, but if it could, it’s worthwhile.

With higher density (the late 2020s I suspect) you could also see cars wishing to talk to cooperate. For example, a car seeing a problem ahead might want to swerve into the next lane. If it could talk to the robocar next to it, it could ask, “hey, I need to swerve where you are, can you swerve out, or ask the guy next to you to make room, so we can do that?” This ability to act in concert could solve problems in accident situations where traffic is dense and robocars are a large fraction of the cars.

Some of these benefits could come over the longer latency cellular data networks, and be vetted by servers. But low latency is a plus for the swerving application.

Robocars could know who the other robocars around are made by, and might decide to trust cars from the same vendor. There could even be cooperating among vendors. This does open up the virus opportunity, so you still want to make these messages simple and put a lot of scrutiny on them.

Talking through the servers

There can still be communication between cars, but unless it needs nearly zero latency, it can be done well, perhaps better, by using the public data network and having the car vendor’s servers be the intermediary and security gateway. For example, negotiations between cars in a parking lot can work fine this way. Sharing of data about road conditions, such as an icy patch or pothole, is better done through the data network as it doesn’t require the two cars be within 200 meters of one another at the time they want to exchange the information. This is particularly true about reports of accidents or stalls — you want to know about them well in advance, and they are only low latency for a tiny fraction of the time they exist.

If a special effort is made in concert with the mobile data networks, it is also possible to get a latency through those networks that’s very small, well under 20 milliseconds. Such roundtrip times are very common on the wired internet within a local region. (Try it yourself and you will see you get 10ms ping times to Google, for example, at a reliability rate far higher than expected for V2V communications, and that’s on the best-efforts internet, which has put no special work into delivering that reliability.)

CANBus

Cars use digital networks today — they have for years — and each car will have several controller network or CANBus links with it already. These were not designed with any security in mind, and even though their messages are simple and use a constant format, they have been found to be windows through which a car’s systems can be attacked. Because most cars don’t call out for updates, entry points into this are harder to find and we have not seen many real world attacks. In the lab, though people have found ways to take over a car’s systems (including safety ones in some cases) by inserting a particular CD into the player, or by sending a message over the telematics system (such as OnStar.) The most frightening attack used the radio messages that come from tire pressure sensors. This radio pathway into the car — the one I suspect some developers will want to avoid — would let an attacker get into a car that drives past, or parks in the wrong parking lot.

Future cars will get networks that have some level of security, so those attacks should go away, but the more ambitious V2V messages become, the more people will fear them.

Will they use it?

The answer is not certain. Developers will consider the trade-offs. They will look at what they can gain from V2V and the risk of receiving it. In the early days of V2V deployment, the gains will be extremely small, and the security risk highest, so it is likely they will wait until they see penetration at a level high enough to provide a value that overcomes the security concerns. When that flips, if ever, is harder to predict.

Robocars will probably still broadcast V2V information, especially if the law requires it. This might aid other cars around them know about a robocar ahead of them hitting the brakes. Hopefully no robocar will run a red light and need to tell others via V2V. The cars might also exchange packets with robocars made by the same vendor, but only after there is enough density of such cars to make it worthwhile.

A version of this article originally appeared on robocars.com.

tags: Automotive, autonomous driving, robocars