Robohub.org

Tracking 3D objects in real-time using active stereo vision

In a recent publications in the Journal Autonomous Robots, a team of researchers from the UK, Italy and France propose a new technique that allows an active binocular robot to fixate and track objects while performing 3D reconstruction in real-time.

In a recent publications in the Journal Autonomous Robots, a team of researchers from the UK, Italy and France propose a new technique that allows an active binocular robot to fixate and track objects while performing 3D reconstruction in real-time.

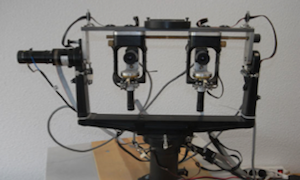

Humans have the ability to track objects by turning their head to and gazing at areas of interest. Integrating images from both eyes provides depth information that allows us to represent 3D objects. Such feats could prove useful in robotic systems with similar vision functionalities. POPEYE, shown in the image to the left, is able to independently move its head and two cameras used for stereo vision.

To perform 3D reconstruction of object features, the robot needs to know the spatial relationship between its two cameras. For this purpose, Sapienza et al. calibrate the robot vision system before the experiment by placing cards with known patterns in the environment and systematically moving the camera motors to learn how these motor changes impact the images captured. After calibration, and thanks to some math (homography-based method), the robot is able to measure how much its motors have moved and relate that to changes in the image features. Measuring motor changes is very fast, allowing for real-time 3D tracking.

Results show that the robot is able to keep track of a human face while performing 3D reconstruction. In the future, the authors hope to add zooming functionalities to their method.

tags: Vision