Robohub.org

Can I trust my robot? And should my robot trust me?

What are you thinking? Robots and humans working together need to understand – and even trust – each other. NASA Johnson/flickr

If we are serious about long-term human presence in space, such as manned bases on the moon or Mars, we must figure out how to streamline human-robot interactions.

Right now, even the most basic of robots seem to have impenetrable brains. When I bought an autonomous vacuum cleaner, one that roams the house on its own, I thought I was going to save time and be able to enjoy a book or a movie, or play longer with the kids. I ended up robot-proofing every room, making sure wires and cables are out of the way, closing doors, placing electronic signposts for the robot to follow and much more – often daily. I cannot fully understand or predict what the system will do, so I don’t trust it. As a result, I play it safe, and spend time doing things to accommodate the needs I imagine the robot might have.

As a space roboticist, I think about this sort of problem happening in orbit. Imagine an astronaut on a spacewalk, working on repairing something damaged on the outside of the spacecraft. Several tools might be needed, and parts to mend or replace others. An autonomous spacecraft could serve as a floating toolbox, holding parts and tools until they’re needed, and staying close to the astronaut as she moves around the area needing to be fixed. Another robot could be clamping parts together before they are permanently fastened.

How will these robots know where they’ll be needed to go next, to be useful but not in the way? How will the astronaut know whether the robots are planning to move to the place she actually needs? What if something comes loose unexpectedly – can the person and the machinery figure out how to stay out of each other’s way while handling the situation efficiently? In weightless space, spatial orientation is difficult and the dynamics of moving around one another are not intuitive.

Problems around effective communication between people and their machines – particularly about actions and intentions – arise throughout the field of robotics. They must be solved if we are to fully take advantage of the potential robots enable for us.

Feeling safe crossing the road

Understanding robots is already an increasing problem here on Earth. One day I found myself walking down a California road where autonomous cars are tested. I asked myself, “How would I know if a driverless vehicle is going to stop at the crosswalk?” I have always relied on eye contact and cues from the driver, but those options may be soon gone.

Robots have trouble understanding us, too. I recently read of an autonomous car unable to process a situation where a bicycle rider balanced himself for some time at an intersection, without putting his feet down. The onboard algorithms could not determine if the biker was going or staying.

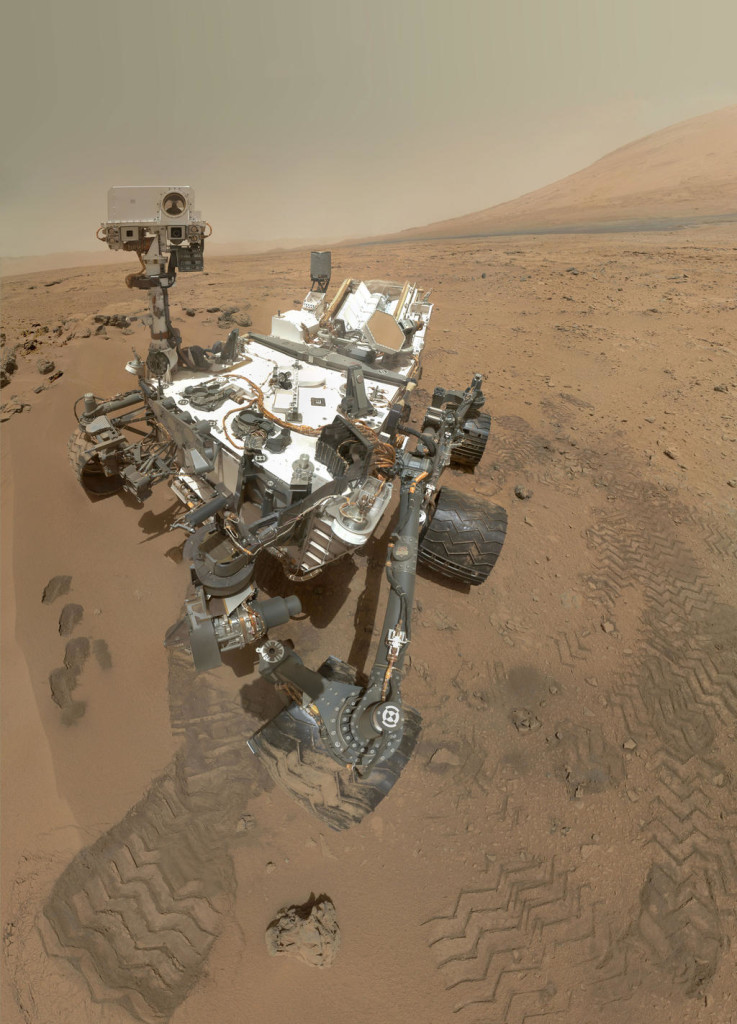

A self-portrait of the Mars rover Curiosity.

Source: NASA/JPL-Caltech/Malin Space Science Systems

When we look at space exploration and defense, we find similar problems. NASA has not used the full abilities of some of its Mars rovers, simply because the engineers could not be sure what would happen if the metallic pets were free to explore and investigate the Red Planet on their own. The humans didn’t trust the machines, so they prevented them from doing as much as they could.

The Department of Defense often uses crews of 10 or more trained personnel to support a single unmanned aerial vehicle up in the sky. Is such a drone really autonomous? Does it require the people, or do the people need it? In any case, how do they interact?

What is “autonomy,” really?

While “autonomy” means “self-governance” (from Greek), no man is an island; the same appears to be valid for our robotic creations. Today we see robots as agents able to operate independently – like my vacuum cleaner – but still part of a team – the family’s efforts to keep the house clean. If they are truly working with us, rather than instead of us, then communication is key, as well as the ability to infer intent. We may go solo for most duties, but sooner or later we will need to be able to connect with the rest of the team.

Do drones need people or do people need drones? Source: U.S. Air Force/Wikipedia Commons

The problem is that autonomous machines and humans do not fully understand each other, and often speak in languages each other does not know – and has not yet started to learn.

The question for the future is how we transmit intent between humans and robots, in both directions. How do we learn to understand – and then to trust – machines? How do they learn to trust us? What cues might each offer the other? Understanding intentions and trusting our fellow humans is already a bumpy ride, but at least we have known cues we can rely on – like pedestrian-driver eye contact at a crosswalk. We need to find new ways to read robots’ minds, the same way they need to be able to understand ours.

Perhaps an astronaut could be given a specialized display to show what the helper spacecraft’s intentions are, much like the gauges in an airplane cockpit show the plane’s status to the pilot. Maybe the displays would be embedded in a helmet visor, or enhanced by sounds that have specific meanings. But what information would they transmit, and how would they know it?

These questions are open ground for new work, the type of learning we’ll need to find to unlock an exciting future of unimaginable exploration in which a new species, the robot, can lead us farther than ever before.

This article was originally published on The Conversation. Read the original article.

tags: autonomous vehicles, c-Politics-Law-Society, Mars, national robotics week, robotics, robots, rover, Space, UAVs