Robohub.org

An ethical dilemma: When robot cars must kill, who should pick the victim?

We are moving closer to having driverless cars on roads everywhere, and naturally, people are starting to wonder what kinds of ethical challenges driverless cars will pose. One of those challenges is choosing how a driverless car should react when faced with an unavoidable crash scenario. Indeed, that topic has been featured in many of the major media outlets of late. Surprisingly little debate, however, has addressed who should decide how a driverless car should react in those scenarios. This who question is of critical importance if we are to design cars that are trustworthy and ethical.

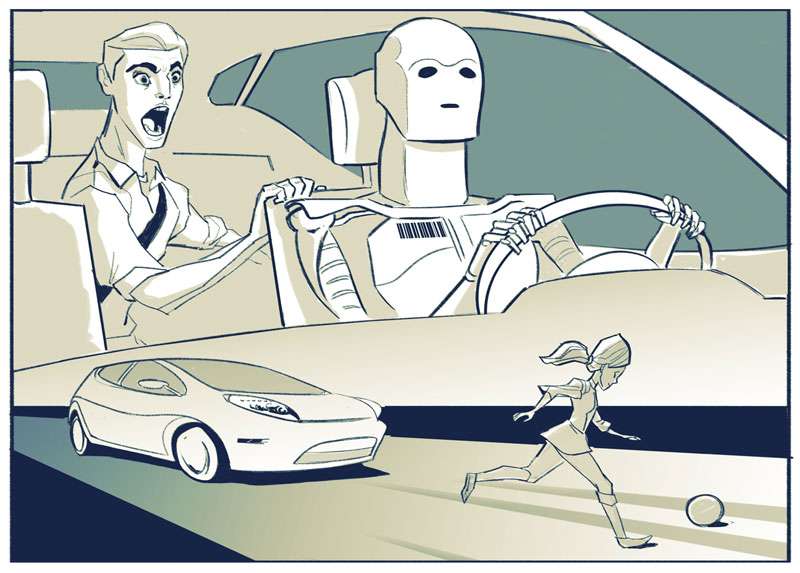

Driverless cars stand to reduce the number of driving fatalities overall. But there will still be fatal car crashes that are unavoidable. For example, consider this scenario in illustration, a sort of modified trolley problem (the name given by philosophers to classic examples of unavoidable crash scenarios, historically involving runaway trolleys and innocent bystanders), which I will call the tunnel problem.

Tunnel Problem: You are travelling along a single lane mountain road in an autonomous car that is fast approaching a narrow tunnel. Just before entering the tunnel a child attempts to run across the road but trips in the center of the lane, effectively blocking the entrance to the tunnel. The car has but two options: hit and kill the child, or swerve into the wall on either side of the tunnel, thus killing you. How should the car react?

Hypothetical scenarios like this one, borrowed from philosophical ethics, force us to accept the fact that some situations have only unfortunate outcomes, and focus our attention instead on the ethical complexities involved in deciding which unfortunate outcome to prefer. In these scenarios, sorting out the who question is as ethically important as sorting out the how.

In the tunnel problem, both outcomes will certainly result in harm — someone will surely die. But more importantly, from an ethical perspective there is no obvious “correct” answer to these kind of dilemmas. Tunnel problems are used as thought experiments in philosophy precisely because they are so difficult to answer, and they are popular among students for the conversations they spark at the pub.

But while philosophers in the past could ponder these kinds of difficult ethical dilemmas in the lecture hall, far removed from any practical application, when we bring autonomous cars onto the scene, these philosophical thought experiments suddenly acquire practical urgency. The tunnel problem, and trolley problems like it, point to imminent design challenges that must be addressed. From a design perspective, the tunnel problem raises the following question: How should we program autonomous cars to react in difficult ethical situations?

But there is another, related, ethical question inextricably linked to this first one: Who should decide how the car reacts in difficult ethical situations? This second question asks us to turn our attention to the users, designers, and policymakers surrounding autonomous cars, and consider who has legitimate moral authority to make decisions. We need to consider these questions together if our goal is to produce legitimate answers to either.

At first glance this second question — the who question — seems odd. Surely it is the designers’ job to program the car to react this way or that? I’d like to convince you otherwise.

I believe that in many driving situations, tunnel problems for example, it is ethically problematic to allow designers to decide how an autonomous car should react. In a draft paper I recently presented at We Robot – a conference focused on the legal, ethical, and policy issues surrounding robotics – I consider the who question in some detail. I argue that instead of assuming designers are the right people to decide in all circumstances how a driverless car should react, that we must explore design methodologies that in many circumstances allow individual drivers to decide on the preferred unfortunate outcome. My reasons stem from the nature of tunnel-like problems, and the relationship that exists between designers, drivers, and technology.

From a driver’s perspective, the Tunnel Problem is much more than a complex design issue. It is effectively an end-of-life decision. Even if we modify the problem so that serious injury is the likely outcome, Tunnel Problems pose deeply moral questions that implicate the driver directly. Seen this way, the tunnel problem is a deeply personal moral problem for the driver who happens to be in the car at that moment.

Allowing designers to pick the outcome of tunnel-like problems treats those dilemmas as if they have a “right” answer that can be selected and applied in all similar situations. In reality they do not. Is it best for the car to always hit the child? Is it best for the car always to sacrifice the driver? If we focus on designers, the tunnel problem appears to pose an impossible design problem, one that can only be solved arbitrarily.

But there is a better solution. We can look to other examples of complex moral decision making for examples of how to get some traction on the who question.

In healthcare we deal with deeply personal moral problems, such as end-of-life decisions, on a daily basis. We are so accustomed to them, in fact, that we have found ways of answering the similar who question in healthcare with some success. In medical ethics there is general agreement that it is impermissible to impose answers to deeply personal moral questions upon others, except in very special circumstances. In other words, it is generally left up to the individual for whom the question has direct moral implications to decide which outcome is preferable. When faced with a diagnosis of cancer, for example, it is up to the patient to decide whether or not to undergo chemotherapy. When faced with imminent death, it is up to the dying patient to decide if they would like CPR in the event of cardiac arrest. Doctors and nurses are trained to respect patients’ decisions, and to work around them within reason.

In healthcare, the notion of imposing medical interventions upon individuals without their express consent is so contentious that it is given a special name: paternalism. Subjecting someone to paternalism in the medical context is considered unethical, because it robs them of their personal autonomy.

An appeal to personal autonomy has intuitive appeal beyond healthcare. Why would one agree to let someone else decide on deeply personal moral questions, such as end-of-life decisions in a driving situation, that one feels capable of deciding autonomously? Why should we allow designers to decide on the outcome of tunnel problems if there is a way for individual drivers to do so autonomously?

From an ethical perspective there is nothing exceptional about the tunnel problem that suggests we should allow designers to impose an answer to that deeply personal moral question upon drivers. If we allow designers to choose how a car should react to a tunnel problem, we risk subjecting drivers to paternalism by design: cars will not respect drivers’ autonomous preferences in those situations.

What would personal autonomy look like in the context of a tunnel problem? For starters, as in healthcare we should expect drivers’ preferences to demonstrate their personal moral commitments. It could be that a very old driver would always choose to sacrifice herself to save a child. It might be that a deeply committed animal lover might opt for the wall even if it were a deer in his car’s path. It might turn out that most of us would choose not to swerve. These are all reasonable choices when faced with impossible situations. Whatever the outcome, it is in the choosing that we maintain our personal autonomy.

The upshot of considering the second question — Who should decide how the car reacts in difficult ethical situations? — is that it becomes clear that there are certain deeply personal moral questions that will arise with autonomous cars that ought to be answered by drivers. Just because designers hold the technical abilities to engineer autonomous cars does not give them the authority to impose particular moral decisions on all users. If designers assume moral authority, and subject users to paternalistic relationships, they run the risk of making technology that is less ethical, and certainly less trustworthy.

Of course, recognizing the ethical need for user input in some situations should not lead to designer paralysis. Technical and practical limitations will sometimes prevent designers from making autonomous cars that ask users to make explicit choices in all unavoidable crash scenarios. Yes, we must recognize the importance of letting drivers autonomously express certain preferences. But we must also balance the need for personal autonomy with the severity of the ethical problem posed by the design decision. Asking for driver input in all scenarios would create unreasonable barriers to design and would prevent society from realizing the many other benefits posed by the technology, such as a reduction in overall crashes. Some driver preferences will not be “serious” enough to warrant their input.

The same is true in healthcare, where sometimes consent can be assumed. Healthcare professionals are obligated to get consent from patients only in cases that warrant that consent. A proportional approach is applied to make the determination. The challenge for autonomous car designers is adopting a design methodology that allows for the identification of paternalistic design choices, and then applies a proportional assessment to determine which of those choices requires user input. Thus, just as we have proportional assessment models in healthcare, we can work to develop similar approaches when designing autonomous car technology.

None of this simplifies the design of autonomous cars. Indeed, there are significant design barriers associated with ethical issues that will need to be overcome before autonomous cars ever enter the general market. But making technology work well requires that we move beyond technical considerations in design to make it both trustworthy and ethically sound. We should work toward design methodologies that are capable of identifying paternalism, and that ultimately enable users to exercise their more of their autonomy when using technology. When robot cars must kill, there are good reasons why designers should not be the ones picking victims.

If you liked this article, you may also be interested in:

- Reader poll: If a death by an autonomous car is unavoidable, who should die?

- Should autonomous cars be allowed to speed? Results from our reader poll

- Kids with wheels: Should the unlicensed be allowed to ‘drive’ autonomous cars?

- What should a robot do? Designing robots that know right from wrong

- Morgan Stanley reports on the economic benefits of driverless cars

See all the latest robotics news on Robohub, or sign up for our weekly newsletter.

tags: Automotive, Autonomous Cars, c-Politics-Law-Society, ethics, human-robot interaction, Laws, policy, robohub focus on autonomous driving, Robot Car, self-driving cars, the Tunnel Problem