Robohub.org

AR app from docomo translates menus and signs in real time

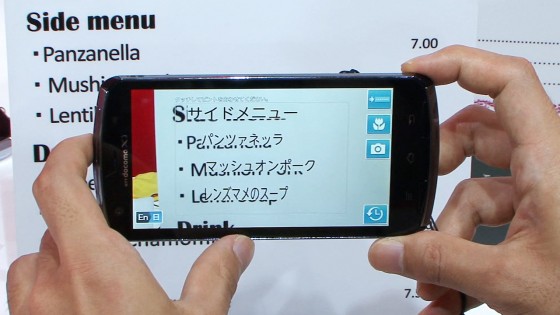

On October 11, NTT Docomo will start the Utsushite Honyaku service, which instantly translates foreign-language restaurant menus when you point a smartphone’s camera at them.

Utsushite Honyaku is a commercial version of a service that’s been available as a trial version. As well as menus, the new service can now handle signs. It works between Japanese and four languages: English, Korean and both simplified and traditional Chinese.

“For example, suppose you visit Korea, and you can’t read signs in Korean at all. You can start up this app, select the Korean dictionary, and use it just by pointing your smartphone’s camera at the writing you can’t read. The translation is shown over the Korean text, so when you use this app, it feels as if you’re looking at a sign in Japanese.”

“This service doesn’t use cloud translation, so instead, the app itself and the dictionary are downloaded to the phone. This means it can be used for free, without having to access the mobile network.”

The user can choose two translation modes. In one mode, the whole translation is superposed on the actual picture, and in the other, it’s shown line by line in a separate window.

This service can also translate from Japanese into the four languages. So, it’s helpful not only for Japanese Docomo users, but also for foreign Android smartphone users visiting Japan.