Robohub.org

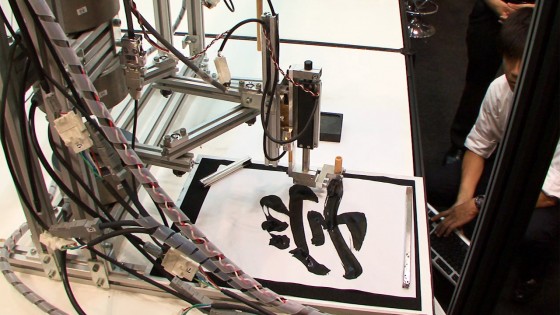

Calligraphy robot uses a Motion Copy System to reproduce detailed brushwork

A research group at Keio University, led by Seiichiro Katsura, has developed the Motion Copy System. This system can identify and store detailed brush strokes, based on information about movement in calligraphy. This enables a robot to faithfully reproduce the detailed brush strokes.

This system stores calligraphy movements by using a brush where the handle and tip are separate. The two parts are connected, with the head as the master system and the tip as the slave system. Characters can be written by handling the device in the same way as an ordinary brush.

Unlike conventional motion capture systems, a feature of this one is, it can record and reproduce the force applied to the brush as well as the sensation when you touch something. Until now, passing on traditional skills has depended on intuition and experience. It’s hoped that this new system will enable skills to be learned more efficiently.

“What’s new is, there’s a motor attached to the brush, so while the person’s moving, the motion and force are recorded as digital data using the motor. What’s more, with this technology, the recorded motion and force can be reproduced anytime, anywhere using the motor.”

“We’ve succeeded in using the motor to record the movements of a veteran calligrapher, and to actually reproduce them. So, I think we’ve demonstrated that, to record and reproduce human skills, it’s necessary to record not just motions, but also how strongly those motions are made.”

Looking at the graph of position and brush pressure, the position of the master system and the motion of the slave system are consistent, and also, the pressure shows the opposite waveform. This shows that the law of action and reaction is being artificially implemented between the master and slave systems.

“Currently, multimedia only uses audiovisual information. But we’d like to bring force, action, and motion into IT, by reproducing this kind of physical force via a network, or storing skills on a hard disk for downloading. So, with this new form of IT, you’d be able to access skill content.”