Robohub.org

CES 2017, part one: Robocar technology and concept cars

CES is the big event for major car makers to show off robocar technology. Most of the north hall, and a giant parking lot next to it, were devoted to car technology and self-driving demos.

Gallery of CES comments

Earlier I posted about many of the pre-CES announcements and it turns out there were not too many extra events during the show. I went to visit many of the booths and demos and prepared some photo galleries. The first is my gallery on cars. In this gallery, each picture has a caption so you need to page through them to see the actual commentary at the bottom under the photo. Just 3 of many of the photos are in this post.

BMW’s concept car starts to express the idea of an ultimate non-driving machine. Inside you see that the back seat has a bookshelf in it. Chances are you will just use your eReader, but this expresses and important message — that the car of the future will be more like a living, playing or working space than a transportation space.

Nissan

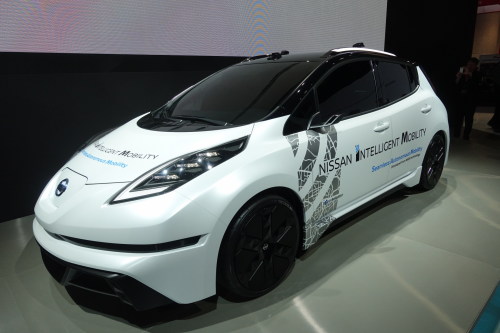

The main announcement during the show was from Nissan, which outlined their plans and revealed some concept cars you will see in the gallery. The primary demo they showed involved integration of some technology worked on by Nissan’s silicon valley lab leader, Maarten Sierhuis in his prior role at NASA. Nissan is located close to NASA Ames (I myself work at Singularity University on the NASA grounds) and did testing there.

Their demo showed an ability to ask a remote control center to assist a car with a situation it doesn’t understand. When the car sees something it can’t handle, it stops or pulls over, and people in the remote call center can draw a path on their console to tell the car where to go instead. For example, it can be drawn how to get around an obstacle, or take a detour, or obey somebody directing traffic. If the same problem happens again, and it is approved, the next car can use the same path if it remains clear.

I have seen this technology a number of places before, including of course the Mars rovers, and we use something like it at Starship Technologies for our delivery robots. This is the first deployment by a major automaker.

Nissan also committed to deployment in early 2020 as they have before — but now it’s closer.

You can also see Nissan’s more unusual concepts, with tiny sensor pods instead of side-view mirrors, and steering wheels that fold up.

Startups

Several startups were present. One is AIMotive, from Hungary. They gave me a demo ride in their test car. They are building a complete software suite, primarily using cameras and radar but also able to use LIDAR. They are working to sell it to automotive OEMs and already work with Volvo on DriveMe. The system uses neural networks for perception, but more traditional coding for path planning and other functions. It wasn’t too fond of Las Vegas roads because the lane markers are not painted there — lanes are divided only with Bott’s Dots. But it was still able to drive by finding the edge of the road. They claim they now have 120 engineers working on self-driving systems in Hungary.

Startup NAuto showed their dashcam system, currently sold for monitoring drivers. They plan to use the data gathered by it to help people train self-driving systems.

RobotTuner in the Netherlands has built a simulated environment for testing and development of cars. They offered to let people race it like a video game. The sample car was a neural network model — you can’t really train them in simulation or you will train on the artifacts of simulation — but you can use them to test out your simulation.

Civil Maps, only recently funded, was out pushing their new mapping database, built by using neural network techniques and people’s laser and video scans of the road.

Navya continues to push and deploy in more places in the shuttle market. They gave rides as well.

I didn’t take most of the rides. Turns out the rides are getting dull. If the system works well, the ride is boring. I see the wheel move and I see a screen where the car is drawing boxes around the cars and other obstacles I see. If they have designed the demo well it is not making too many mistakes.

Faraday Future finally showed a production car. Press on it has been mixed — it’s not shipping yet, and it has impressive specs but they don’t seem to be that much better than the Tesla. One cute feature was a pop-up 360 degree LIDAR in the hood. Frankly, the hood is not a great place to put such a LIDAR, it only sees forward and to the sides, but it looks sleek — as though looking sleek is important in first generation cars.

FF has talked about some interesting self-driving plans, but so far that has taken a backseat (!) to their push as a high-end EV vendor to compete with Tesla. Let’s hope we hear more in the future of Faraday Future.

There were several people promoting new LIDARs at low prices. These LIDARs use MEMs mirrors — the same tool used in DLP projectors — and will thus have no large moving parts. These vendors claimed prices and capabilities to compete with Quanergy’s solid state units. Two of the vendors claimed 200m of range. That’s either a revolutionary technology or a lie, however. LIDARs in the near-infrared just can’t emit enough light to be eye safe and have it bounce off a black car 200m away and come back to the sensor bright enough to be visible against sunlight. You would need a whole new type of sensitivity — and today’s LIDARs use single photon detectors — or a way to tune out noise. Which perhaps they have, but it would be big news. You can see a white car at 200m, which may be what they are promoting, but a LIDAR that only sees white cars is not really good enough.

But the world does need to see 200m. Today’s LIDARs don’t do that, and highway speeds demand able to see stalled objects (which radar is not good at) at 200m out.

Delphi

I was impressed with Delphi’s work and attitudes. Their new test cars include even more sensors, including MobilEye. The OEMs that fail to produce their own internal self-driving systems will be going to companies like Delphi to get such systems.

Delphi believes, as I do, that the big market will be in cars for robotaxi (mobility on demand) services, rather than high-end luxury cars for individual owners that feature self-drive systems. Certainly, with services like Uber being as hot as they are, robotaxi services will be accessible to a much larger segment of the population. Most car companies focus on making cars with added self-driving at the high end, and such cars will sell — but the robotaxis will drive more miles and thus sell more.

Concepts

There were a lot of concept cars. As per usual, most of them were silly — a lot of money spent to make the company look cool with something that will never actually ship.

Popular choices were very sparse and open interiors. Also shown by several were steering wheels that could fold away or hide. I think that’s a good thing to make, though it won’t be fancy multi-motor pop outs like we see here. Once you stop driving except in special situations, you might be fine with a much simpler driving interface like handlebars (which can pop out of a dashboard more easily) or even a wheel you pull out of a shelf and plug in. Eventually, it won’t have any mechanical linkage, it will have a drive-by-wire linkage that can bypass the self-drive systems in the event of total system failure.

Also super popular — see the BMW — are panels to hide the wheels. This can reduce drag but there are a lot of problems with doing it, especially on real roads. It is done to look futuristic.

Many concepts showed small sticks with sensors instead of side-view mirrors. Today, the law mandates side-view mirrors, but soon it will allow the use of cameras which show on a screen inside the car. Car makers want to do this because it reduces drag and they have to meet their fuel efficiency targets. Everybody also loves unusual doors, which either open upwards or are in the suicide door configuration.

I suspect all these fancy add-ons may appear in high-end cars, but not in robotaxis. There you want low costs. The convenience of electric doors (like in minivans today) may be popular for taxis, so we’ll probably see that. And no need for individual doors. One person vehicles will be meant for one person, and 4 person vehicles will be meant for 3 or 4, who all get in at the same time.

For more photos and commentary, check out my gallery on cars and look to a later posting of my gallery on the hype over “smart” and “connected” devices in the internet-o-thingies.

tags: Automotive, Autonomous Cars, autonomous vehicles, Brad Templeton, robocars