Robohub.org

Grasping objects in a way that is suitable for manipulation

This post is part of our ongoing efforts to make the latest papers in robotics accessible to a general audience.

Robots are expected to manipulate a large variety of objects from our everyday lives. The first step is to establish a physical connection between the robot end-effector and the object to be manipulated. In our context, this physical connection is a robotic grasp. What grasp the robot adopts will depend on how it needs to manipulate the object. This problem is studied in the latest Autonomous Robots paper by Hao Dang and Peter Allen at the University of Columbia.

Existing grasp planning algorithms have made impressive progress in generating stable robotic grasps. However, stable grasps are mostly good to transport objects. If you consider manipulation, the stability of the grasp is no longer sufficient to guarantee success. For example, a mug can be grasped with a top-down grasp or a side grasp. Both grasps are good for transporting the mug from one place to another. However, if the manipulation task is to pour water out of the mug, the top-down grasp is no longer suitable since the palm and the fingers of the hand may block the opening part of the mug. We call such task-related constraints “semantic constraints”.

In our work, we take an example-based approach to build a grasp planner that searches for stable grasps satisfying semantic constraints. This approach is inspired by psychological research which showed that human grasping is to a very large extent guided by previous grasping experience. To mimic this process, we propose that semantic constraints be embedded into a database which includes partial object geometry, hand kinematics, and tactile contacts. Task specific knowledge in the database should be transferable between similar objects. We design a semantic affordance map which contains a set of depth images from different views of an object and predefined example grasps that satisfy semantic constraints of different tasks. These depth images help infer the approach direction of a robot hand with respect to an object, guiding the hand along an ideal approach direction. Predefined example grasps provide hand kinematics and tactile information to the planner as references to the ideal hand posture and tactile contact formation. Utilizing this information, our planner searches for stable grasps with an ideal approach direction, hand kinematics, and tactile contact formation.

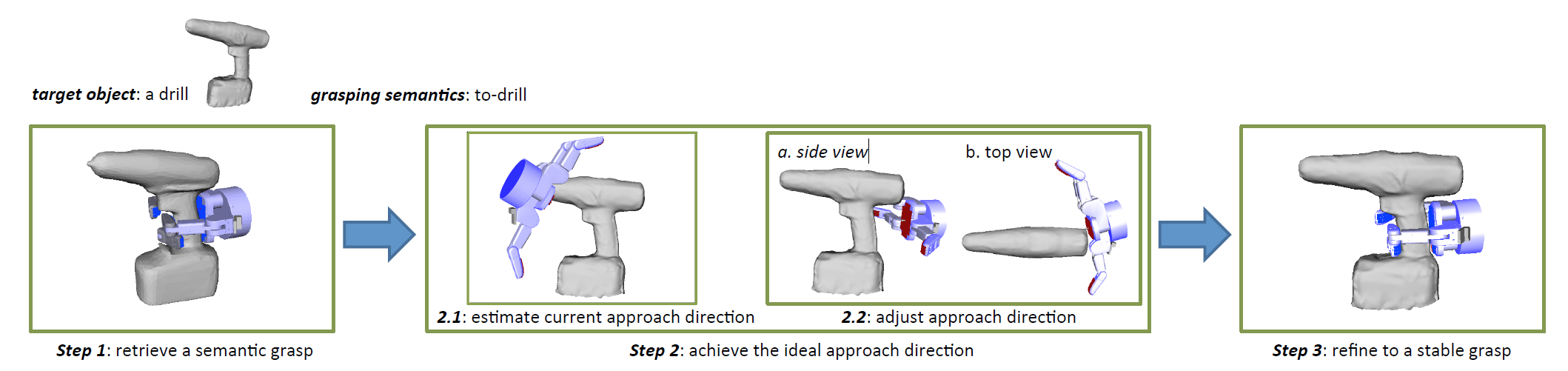

The figure above illustrates the process of planning a semantic grasp on a target object (i.e., a drill) with a given grasping semantics “to-drill” and a semantic affordance map built on a source object (i.e., another drill shown in Step 1, which is similar to the target drill). Step 1 is to retrieve a semantic grasp that is stored in the semantic affordance map. This semantic grasp is used as a reference in the next two steps. Step 2 is to achieve the ideal approach direction on the target object according to the exemplar semantic grasp. Once the ideal approach direction is achieved, a local grasp planning process starts in Step 3 to obtain stable grasps on the target object which share similar tactile feedback and hand posture as that of the exemplar semantic grasp.

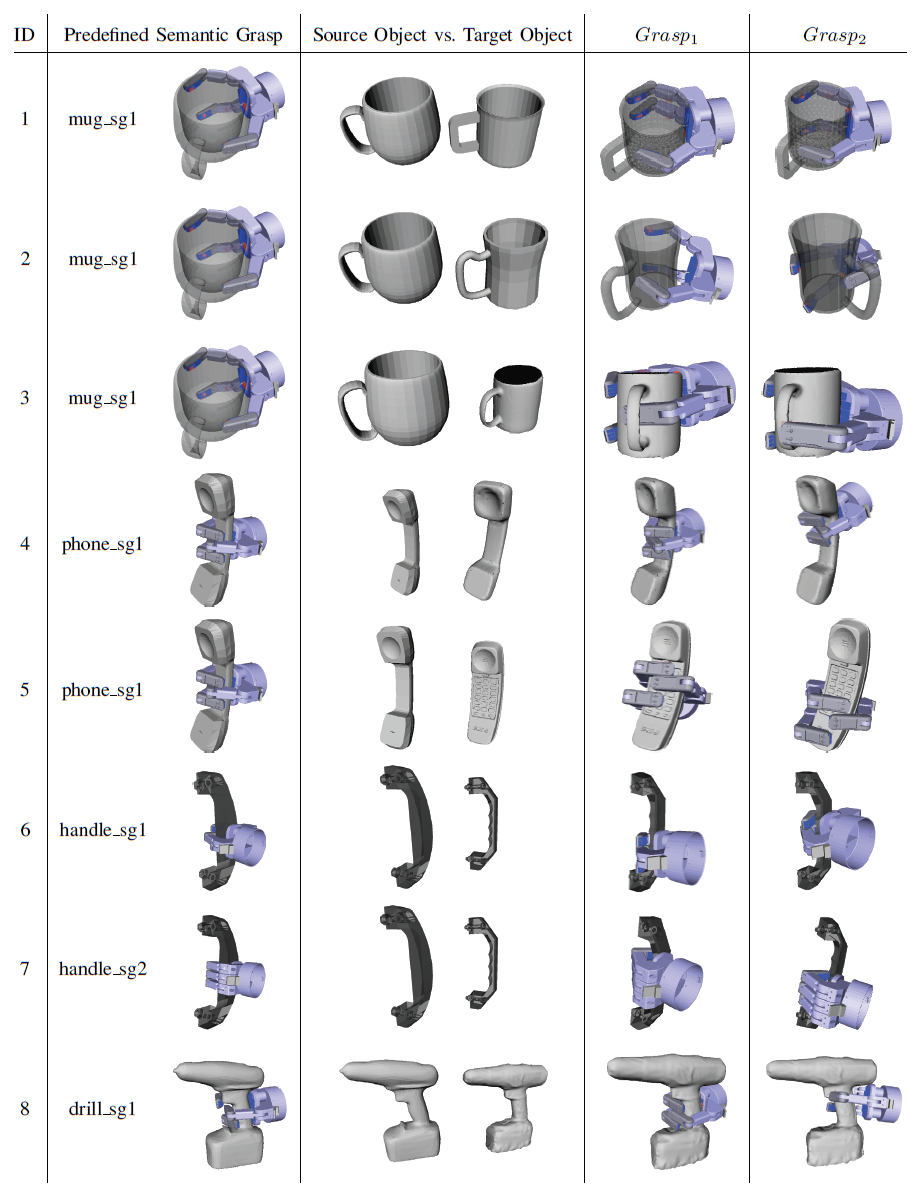

The figure below shows some grasps planned on typical everyday objects using the approach. Shown from left to right are: experiment ID, the predefined semantic grasps stored in the semantic affordance map, a pair of source object and target object for each experiment, and the top two grasps generated. The last two columns for the top two grasps were obtained within 180 seconds and are both stable in terms of their quality.

For more information, you can read the paper Semantic grasping: planning task-specific stable robotic grasps (Hao Dang and Peter K. Allen, Autonomous Robots – Springer US, June 2014) or ask questions below!

tags: c-Research-Innovation, cx-Industrial-Automation