Robohub.org

How a challenging aerial environment sparked a business opportunity

We develop the fastest, smallest and lightest distance sensors for advanced robotics in challenging environments. These sensors are born from a fruitful collaboration with CERN while developing flying indoor inspection systems.

How we began started with a challenge: the European Centre for Nuclear Research (CERN) asked if we could use drones to perform fully autonomous inspections within the tunnel of the Large Hadron Collider. Now if you haven’t seen it, it’s a complex environment; perhaps one of the most unfriendly environments imaginable for fully autonomous drone flight. But we accepted the mission, rolled-up our sleeves, and got to work. As you can imagine, the mission was very challenging!

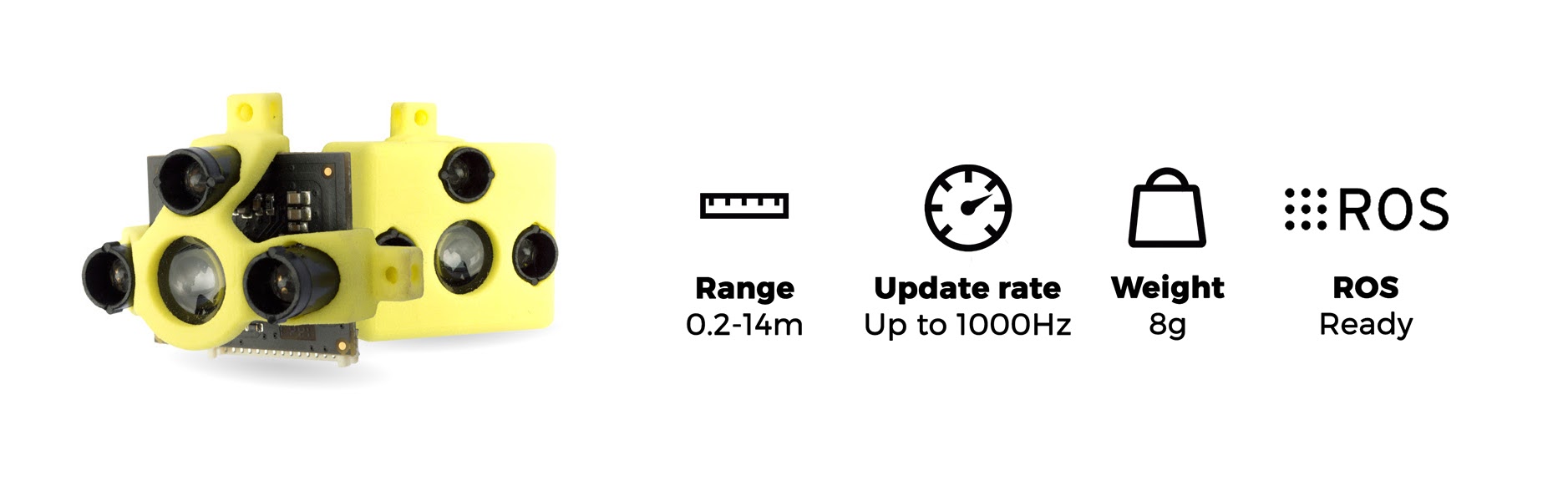

One of the main issues we faced was finding suitable sensors to place on the drone for navigation and anti-collision. We got everything on the market that we could find and tried to make it work. Ultrasound was too slow and the range too short. Lasers tend to be too big, too heavy and consumed too much power. Monocular and stereo vision was highly complex and placed a huge computational burden on the system and even then was prone to failure. It became clear that what we really needed, simply didn’t exist! That’s how the concept for TeraRanger’s brand of sensors was born.

Having failed to make any of the available sensing technologies work at the performance levels required, we came to the conclusion that we would need to build the sensors from the ground up. It wasn’t easy (and still isn’t) but finally, we had something small enough, light enough (8g), with fast refresh rates and enough range to work well on the drone. Leading academics in robotics could see potential using the sensor and wanted some for themselves, then more people wanted them, and before too long we had a new business.

Millimetre precision wasn’t vital for the drone application, but the high refresh rates and range were. And by not using a laser emitter we were able to give the sensor a 3 degree field of view, which for many applications proved to be a real boon, giving a smoother flow of data when faced with uneven surfaces and complex and cluttered environments. It also enabled the sensor to be fully eye-safe and the supply current to remain low.

Plug and play multi-axis sensing

Knowing that we would often need to use multiple sensors at the same time, we also designed-in support for multi-sensor, multi-axis requirements. Using a ‘hub’ we can simultaneously connect up to eight sensors to provide a simple to use, plug and play approach to multi-sensor applications. By controlling the sequence in which sensors are fired (along with some other parameters) we are able to limit or eliminate, the potential for sensor cross-talk and then stream an array of calibrated distance values in millimetres, and at high speed. From a user’s’ perspective this is about as simple as it gets since the hub also centralises power management.

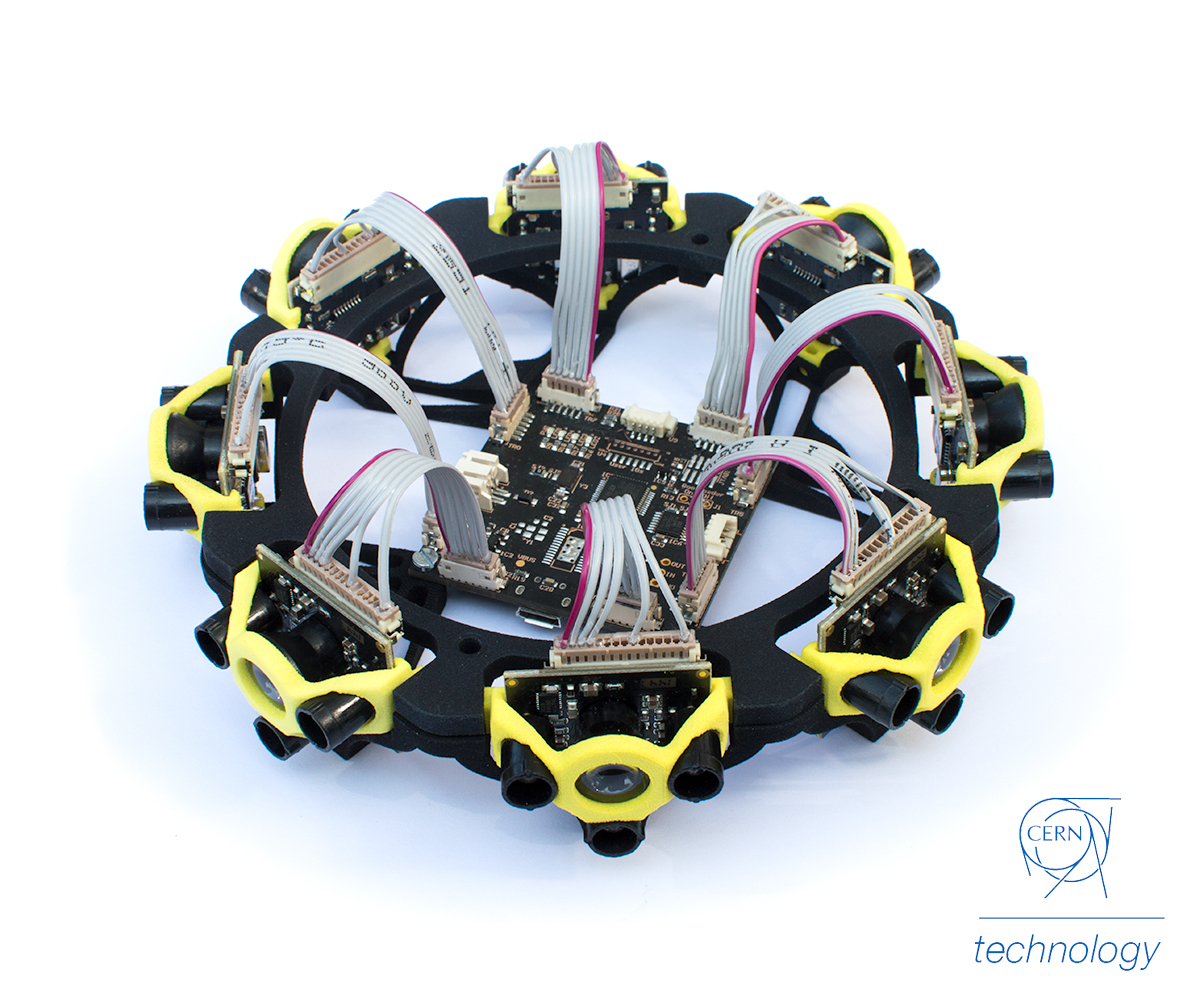

TeraRanger Tower

There’s no need to get in a spin

Using that same concept we continued to push the boundaries. A significant evolution has been our approach to LiDAR scanning – not just from a hardware point of view (although that is also different) but from a conceptual approach too. We’ve taken the same philosophy of small size, lightweight sensors with very high refresh rates (up to 1kHz) and applied that to create a new style of static LiDAR. Rather than rotating a sensor or using other mechanical methods to move a beam, TeraRanger Tower simultaneously monitors eight axis (or more if you stack multiple units together) and streams an array of data at up to 270Hz!

Challenging the point-cloud conundrum

With no motors or other moving parts, the hardware itself has many advantages, being silent, lightweight and robust, but there is also a secondary benefit from the data. Traditional thinking amongst the robotics community is that to perform navigation, Simultaneous Localisation and Mapping (SLAM) and collision avoidance you have to “see” everything around you. Just as we did at the start of our journey, people focus on complex solutions – like stereo vision – gathering millions of data points which then requires complex and resource-hungry processing. The complexity of the solution – and of the algorithms – has the potential to create many failure modes. Having discovered for ourselves that the complicated solution is not always necessary, our approach is different in that, we monitor fewer points. But, we monitor them at very fast refresh rates to ensure that what we think we see, is really there. As a result, we build less intense point clouds, but with very reliable data. This then requires less complex algorithms and processing and can be done with lighter-weight computing. The result is a more robust, and potentially safer solution, especially when you can make some assumptions about your environment, or harness odometry data to augment the LiDAR data. Many times we were told you could never do SLAM monitoring on just eight points, but we proved that wrong.

Coming full circle: There are no big problems, just a lot of little problems

All of this leads back to our original mission. We’ve not solved it yet, but recently we mounted TeraRanger Tower to a drone and proved, for the first time we believe, that a static LiDAR can be used for drone anti-collision. The Proof of Concept was quickly put together to harness code developed for the open source APM 3.5 flight controller, with Terabee writing drivers to hook into the codebase. Anti-collision is just one step in the journey to fully autonomous drone flight and we are still on the wild-ride of technology, but definitely, we are taming the beast!

If you have expertise in drone collision avoidance and wish to help us overcome the remaining challenges, please contact us at teraranger@terabee.com. For more information about Terabee and our TeraRanger brand of sensors, please visit our website.

tags: Mapping-Surveillance, SLAM