Robohub.org

How do we control robots on the moon?

In the future, we imagine that teams of robots will explore and develop the surface of nearby planets, moons and asteroids – taking samples, building structures, deploying instruments. Hundreds of bright research minds are busy designing such robots. We are interested in another question: how to provide the astronauts the tools to efficiently operate their robot teams on the planetary surface, in a way that doesn’t frustrate or exhaust them?

Received wisdom says that more automation is always better. After all, with automation, the job usually gets done faster, and the more tasks (or sub-tasks) robots can do on their own, the less the workload on the operator. Imagine a robot building a structure or setting up a telescope array, planning and executing tasks by itself, similar to a “factory of the future”, with only sporadic input from an astronaut supervisor orbiting in a spaceship. This is something we tested in the ISS experiment SUPVIS Justin in 2017-18, with astronauts on board the ISS commanding DLR Robotic and Mechatronic Center’s humanoid robot, Rollin’ Justin, in Supervised Autonomy.

However, the unstructured environment and harsh lighting on planetary surfaces makes things difficult for even the best object-detection algorithms. And what happens when things go wrong, or a task needs to be done that was not foreseen by the robot programmers? In a factory on Earth, the supervisor might go down to the shop floor to set things right – an expensive and dangerous trip if you are an astronaut!

The next best thing is to operate the robot as an avatar of yourself on the planet surface – seeing what it sees, feeling what it feels. Immersing yourself in the robot’s environment, you can command the robot to do exactly what you want – subject to its physical capabilities.

Space Experiments

In 2019, we tested this in our next ISS experiment, ANALOG-1, with the Interact Rover from ESA’s Human Robot Interaction Lab. This is an all-wheel-drive platform with two robot arms, both equipped with cameras and one fitted with a gripper and force-torque sensor, as well as numerous other sensors.

On a laptop screen on the ISS, the astronaut – Luca Parmitano – saw the views from the robot’s cameras, and could move one camera and drive the platform with a custom-built joystick. The manipulator arm was controlled with the sigma.7 force-feedback device: the astronaut strapped his hand to it, and could move the robot arm and open its gripper by moving and opening his own hand. He could also feel the forces from touching the ground or the rock samples – crucial to help him understand the situation, since the low bandwidth to the ISS limited the quality of the video feed.

There were other challenges. Over such large distances, delays of up to a second are typical, which mean that traditional teleoperation with force-feedback might have become unstable. Furthermore, the time delay the robot between making contact with the environment and the astronaut feeling it can lead to dangerous motions which can damage the robot.

To help with this we developed a control method: the Time Domain Passivity Approach for High Delays (TDPA-HD). It monitors the amount of energy that the operator puts in (i.e. force multiplied by velocity integrated over time), and sends that value along with the velocity command. On the robot side, it measures the force that the robot is exerting, and reduces the velocity so that it doesn’t transfer more energy to the environment than the operator put in.

On the human’s side, it reduces the force-feedback to the operator so that no more energy is transferred to the operator than is measured from the environment. This means that the system stays stable, but also that the operator never accidentally commands the robot to exert more force on the environment than they intend to – keeping both operator and robot safe.

This was the first time that an astronaut had teleoperated a robot from space while feeling force-feedback in all six degrees of freedom (three rotational, three translational). The astronaut did all the sampling tasks assigned to him – while we could gather valuable data to validate our method, and publish it in Science Robotics. We also reported our findings on the astronaut’s experience.

Some things were still lacking. The experiment was conducted in a hangar on an old Dutch air base – not really representative of a planet surface.

Also, the astronaut asked if the robot could do more on its own – in contrast to SUPVIS Justin, when the astronauts sometimes found the Supervised Autonomy interface limiting and wished for more immersion. What if the operator could choose the level of robot autonomy appropriate to the task?

Scalable Autonomy

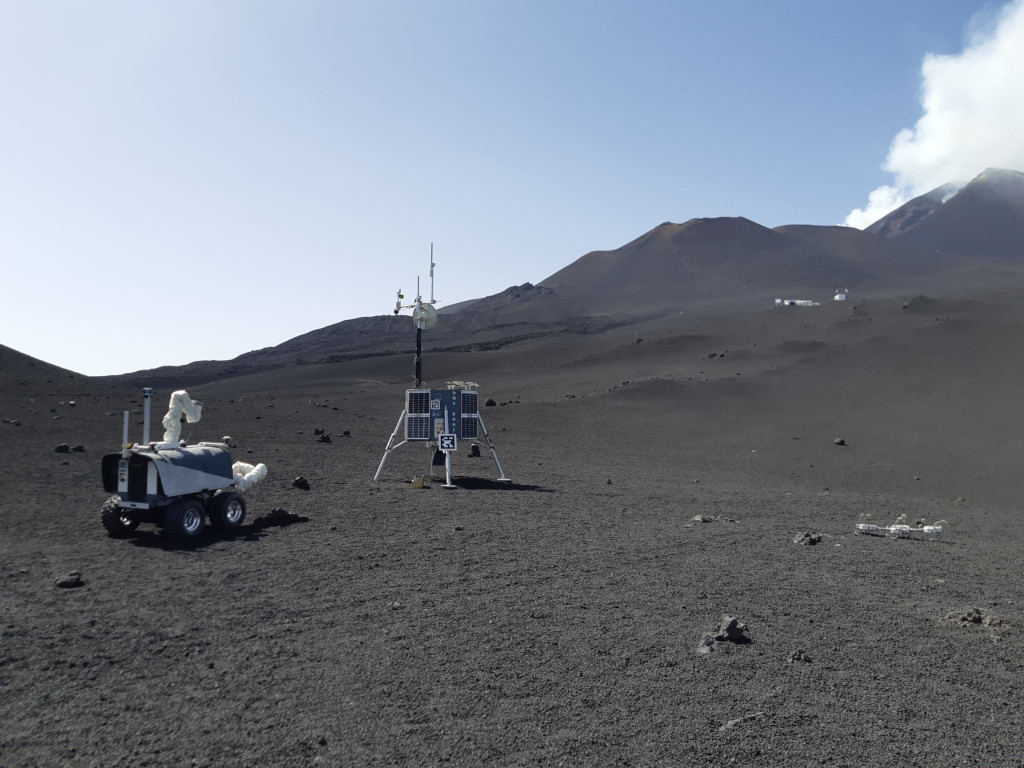

In June and July 2022, we joined the DLR’s ARCHES experiment campaign on Mt. Etna. The robot – on a lava field 2,700 metres above sea level – was controlled by former astronaut Thomas Reiter from the control room in the nearby town of Catania. Looking through the robot’s cameras, it wasn’t a great leap of the imagination to imagine yourself on another planet – save for the occasional bumblebee or group of tourists.

This was our first venture into “Scalable Autonomy” – allowing the astronaut to scale up or down the robot’s autonomy, according to the task. In 2019, Luca could only see through the robot’s cameras and drive with a joystick, this time Thomas Reiter had an interactive map, on which he could place markers for the robot to automatically drive to. In 2019, the astronaut could control the robot arm with force feedback; he could now also automatically detect and collect rocks with help from a Mask R-CNN (region-based convolutional neural network).

We learned a lot from testing our system in a realistic environment. Not least, that the assumption that more automation means a lower astronaut workload is not always true. While the astronaut used the automated rock-picking a lot, he warmed less to the automated navigation – indicating that it was more effort than driving with the joystick. We suspect that a lot more factors come into play, including how much the astronaut trusts the automated system, how well it works, and the feedback that the astronaut gets from it on screen – not to mention the delay. The longer the delay, the more difficult it is to create an immersive experience (think of online video games with lots of lag) and therefore the more attractive autonomy becomes.

What are the next steps? We want to test a truly scalable-autonomy, multi-robot scenario. We are working towards this in the project Surface Avatar – in a large-scale Mars-analog environment, astronauts on the ISS will command a team of four robots on ground. After two preliminary tests with astronauts Samantha Christoforetti and Jessica Watkins in 2022, the first big experiment is planned for 2023.

Here the technical challenges are different. Beyond the formidable engineering challenge of getting four robots to work together with a shared understanding of their world, we also have to try and predict which tasks would be easier for the astronaut with which level of autonomy, when and how she could scale the autonomy up or down, and how to integrate this all into one, intuitive user interface.

The insights we hope to gain from this would be useful not only for space exploration, but for any operator commanding a team of robots at a distance – for maintenance of solar or wind energy parks, for example, or search and rescue missions. A space experiment of this sort and scale will be our most complex ISS telerobotic mission yet – but we are looking forward to this exciting challenge ahead.

tags: Space