Robohub.org

People favour expressive, communicative robots over efficient and effective ones

Making an assistive robot partner expressive and communicative is likely to make it more satisfying to work with and lead to users trusting it more, even if it makes mistakes, a new study suggests.

But the research also shows that giving robots human-like traits could have a flip side – users may even lie to the robot in order to avoid hurting its feelings.

These were the main findings of the study I undertook as part of my MSc in Human Computer Interaction at University College London (UCL), with the objective of designing robotic assistants that people can trust. I’m presenting the research at the IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN) later this month.

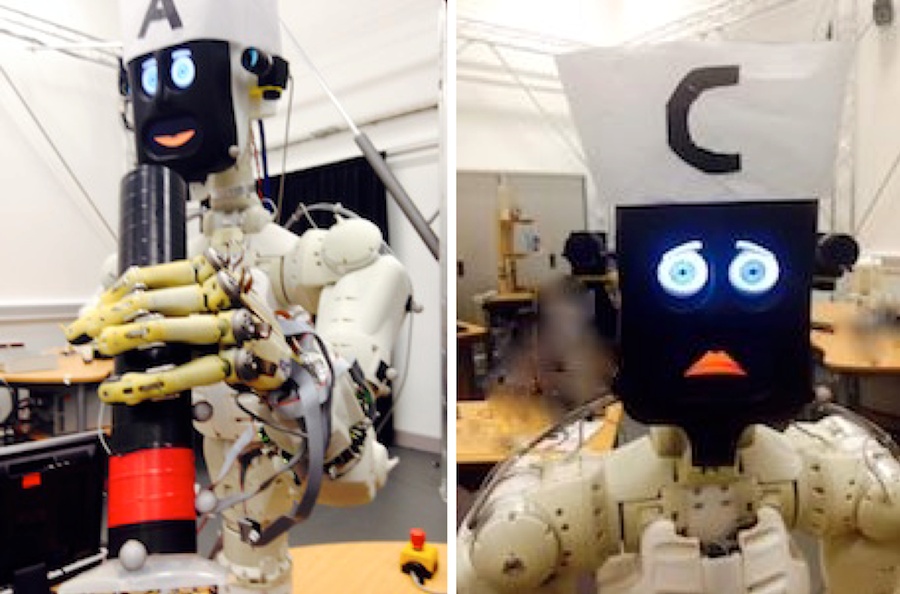

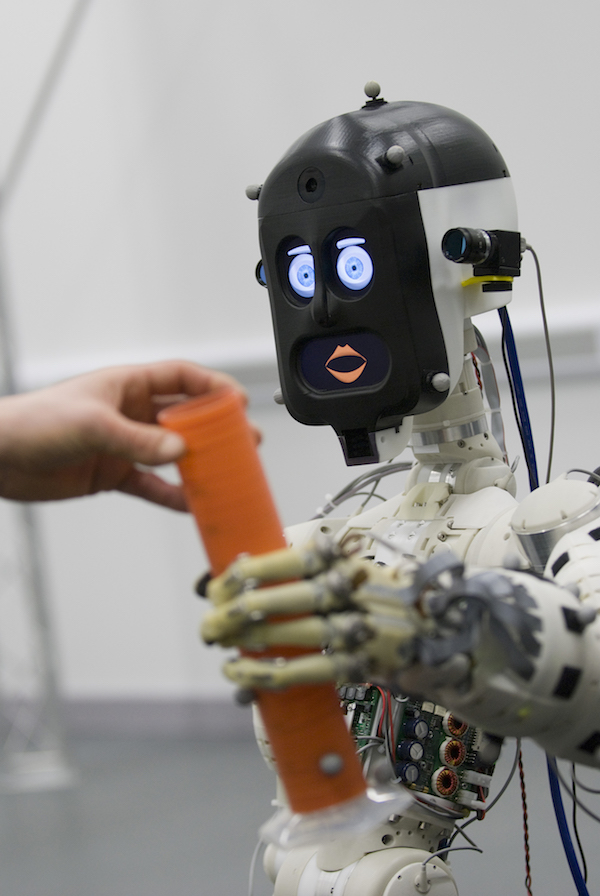

With the help of my supervisors, Professors Nadia Berthouze at UCL and Kerstin Eder at the University of Bristol, I constructed an experiment with a humanoid assistive robot helping users to make an omelette. The robot was tasked with passing the eggs, salt and oil but dropped one of the polystyrene eggs in two of the conditions and then attempted to make amends.

The aim was to investigate how a robot may recover a users’ trust when it makes a mistake and how it can communicate its erroneous behaviour to somebody who is working with it, either at home or at work.

The somewhat surprising result suggests that a communicative, expressive robot is preferable for the majority of users to a more efficient, less error prone one, despite it taking 50 per cent longer to complete the task.

Users reacted well to an apology from the robot that was able to communicate, and were particularly receptive to its sad facial expression which is likely to have reassured them that it ‘knew’ it had made a mistake.

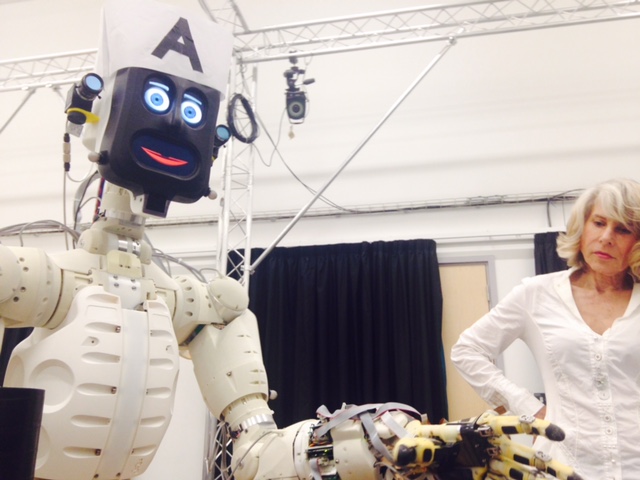

At the end of the interaction, the communicative robot was programmed to ask participants whether they would give it the job of kitchen assistant, but they could only answer yes or no and were unable to qualify their answers.

Some were reluctant to answer and most appeared very uncomfortable. One person was under the impression that the robot looked sad when he said ‘no’, when it had not been programmed to appear so. Another complained of emotional blackmail and a third went as far as to lie to the robot.

Their reactions would suggest that, having seen the robot display human-like emotion when the egg dropped, many participants were now pre-conditioned to expect a similar reaction and therefore hesitated to say no; they were mindful of the possibility of a display of further human-like distress.

The research underlines that human-like attributes, such as regret, can be powerful tools in negating dissatisfaction but we must identify with care which specific traits we want to focus on and replicate. If there are no ground rules then we may end up with robots with different personalities, just like the people designing them.

“Trust in our counterparts is fundamental for successful interaction, says Kerstin Eder, who leads the Verification and Validation for Safety in Robots research theme at the Bristol Robotics Laboratory. “This study gives key insights into how communication and emotional expressions from robots can mitigate the impact of unexpected behaviour in collaborative robotics. Complementing thorough verification and validation with sound understanding of these human factors will help engineers design robotic assistants that people can trust.”

The study was aligned with the EPSRC funded project Trustworthy Robotic Assistants, where new verification and validation techniques are being developed to ensure safety and trustworthiness of the machines that will enhance our quality of life in the future.

The IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), takes place from 26 to 31 August in New York City, and the study – Believing in BERT: Using expressive communication to enhance trust and counteract operational error in physical Human-Robot Interaction’ by Adriana Hamacher, Nadia Bianchi-Berthouze, Anthony G. Pipe and Kerstin Eder – will be published by the IEEE as part of the conference proceedings, available via the IEEE Xplore Digital Library.

A pre-publication copy of the research paper is available at: https://arxiv.org/pdf/1605.08817.pdf

tags: assistive robots, c-Research-Innovation, HRI, humanoid