Robohub.org

Phone-based laser rangefinder works outdoors

Illustration: Christine Daniloff/MIT

The Microsoft Kinect was a boon to robotics researchers. The cheap, off-the-shelf depth sensor allowed them to quickly and cost-effectively prototype innovative systems that enable robots to map, interpret, and navigate their environments.

But sensors like the Kinect, which use infrared light to gauge depth, are easily confused by ambient infrared light. Even indoors, they tend to require low-light conditions, and outdoors, they’re hopeless.

At the International Conference on Robotics and Automation in May, researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) will present a new infrared depth-sensing system, built from a smartphone with a $10 laser attached to it, that works outdoors as well as in.

The researchers envision that cellphones with cheap, built-in infrared lasers could be snapped into personal vehicles, such as golf carts or wheelchairs, to help render them autonomous. A version of the system could also be built into small autonomous robots, like the package-delivery drones proposed by Amazon, whose wide deployment in unpredictable environments would prohibit the use of expensive laser rangefinders.

“My group has been strongly pushing for a device-centric approach to smarter cities, versus today’s largely vehicle-centric or infrastructure-centric approach,” says Li-Shiuan Peh, a professor of electrical engineering and computer science whose group developed the system. “This is because phones have a more rapid upgrade-and-replacement cycle than vehicles. Cars are replaced in the timeframe of a decade, while phones are replaced every one or two years. This has led to drivers just using phone GPS today, as it works well, is pervasive, and stays up-to-date. I believe the device industry will increasingly drive the future of transportation.”

Joining Peh on the paper is first author Jason Gao, an MIT PhD student in electrical engineering and computer science and a member of Peh’s group.

Background noise

Infrared depth sensors come in several varieties, but they all emit bursts of laser light into the environment and measure the reflections. Infrared light from the sun or man-made sources can swamp the reflected signal, rendering the measurements meaningless.

To compensate, commercial laser rangefinders use higher-energy bursts of light. But to limit the risk of eye damage, those bursts need to be extremely short. And detecting such short-lived reflections requires sophisticated hardware that pushes the devices’ cost into the thousands of dollars.

Gao and Peh’s system instead performs several measurements, timing them to the emission of low-energy light bursts. Essentially, it captures four frames of video, two of which record reflections of laser signals and two of which record only the ambient infrared light. It then simply subtracts the ambient light from its other measurements.

In their prototype, the researchers used a phone with a 30-frame-per-second camera, so capturing four images imposed a delay of about an eighth of a second. But 240-frame-per-second cameras, which would reduce that delay to a 60th of a second, are already commercially available.

The system uses a technique called active triangulation. The laser, which is mounted at the bottom of the phone in the prototype, emits light in a single plane. The angle of the returning light can thus be gauged from where it falls on the camera’s 2-D sensor.

Global replace

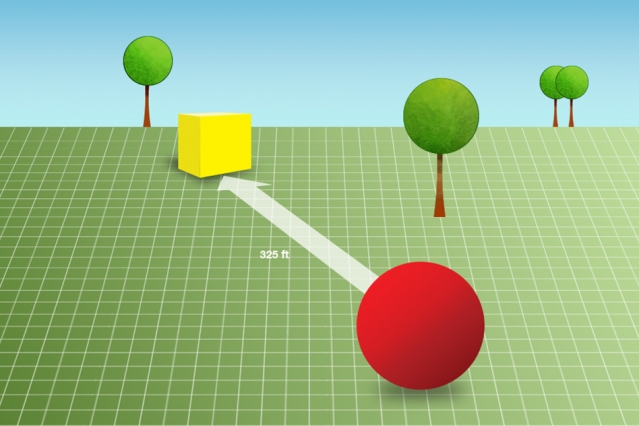

At ranges of 3 to 4 meters, the system gauges depth to an accuracy measured in millimeters, while at 5 meters, the accuracy declines to 6 centimeters. The researchers tested their system on a driverless golf cart developed by the Singapore-MIT Alliance for Research and Technology and found that its depth resolution should be adequate for vehicles moving at rates of up to 15 kilometers per hour.

Imminent advances in camera technology could improve those figures, however. Currently, most cellphone cameras have what’s called a rolling shutter. That means that the camera reads off the measurements from one row of photodetectors before moving on to the next one. An exposure that lasts one-thirtieth of a second may actually consist of a thousand sequential one-row measurements.

In Gao and Peh’s prototype, the outgoing light pulse thus has to last long enough that its reflection will register no matter which row it happens to strike. Future smartphone cameras, however, will have a “global shutter,” meaning that they will read off measurements from all their photodetectors at once. That would enable the system to emit shorter light bursts, which could consequently have higher energies, increasing the effective range.

“It is exciting to see research institutions and businesses coming up with innovation and technological advances, as it would support Singapore’s push for a seamless transport experience,” says Lam Wee Shann, director of the Futures Division at Singapore’s Ministry of Transport. “MIT’s new laser depth-sensing system could help advance the development of self-driving vehicles, bringing us one step closer to their deployment in the near future.”