Robohub.org

Robocar recap: Tesla radar, MobilEye fight, and the Comma One $1,000 add-on-box

Tesla’s spat with MobilEye reached a new pitch this week with Tesla announcing a new release of their autopilot and plans. As reported earlier, MobilEye announced during the summer that they would not be supplying the new and better versions of their EyeQ system to Tesla. Since that system was/is central to the operation of the Tesla Autopilot, they may have been surprised that MBLY stock took a big hit after the announcement (though it recovered for a while, and is now back down) and TSLA did not.

Statements and documents now show a nastier battle with MobilEye saying they were worried about Tesla using their tool in an unsafe way, invoking all the debate about the fatality and other crashes allegedly caused by people who were lulled into not bothering to supervise the Autopilot. Tesla says that, instead, they have been developing their own advanced vision tools and that MobilEye was afraid and told Tesla if they wanted more EyeQ chips, they would need to halt the competing project and commit to ME. That’s a nasty spat.

Tesla’s own efforts represent a threat to MobilEye from the growing revolution in neural network pattern matchers. Computer vision is going through a big revolution. MobilEye is a big player in that revolution because their ASICs do both standard machine vision functions and can do neural networks. An ASIC will beat a general purpose processor when it comes to cost, speed, and power, but only if the ASIC’s abilities were designed to solve those particular problems. Since it takes years to bring an ASIC to production, you have to aim right. MobilEye aimed pretty well, but at the same time, lots of research out there is trying to aim even better or do things with more general purpose chips, like GPUs. Soon we will see ASICs aimed directly at neural network computations. To solve the problem with neural networks, you need the computing horsepower, and you need well designed deep network architectures, plus, you need the right training data and lots of it. Tesla and ME both are gaining lots of training data. Many companies, including Nvidia, Intel and others are working on the hardware for neural networks. Most people would point to Google as the company with the best skills in architecting the networks, though there are many doing interesting work there. (Google’s DeepMind built the tools that beat humans at the seemingly impossible game of Go, for example.) It’s definitely a competitive race.

Using radar

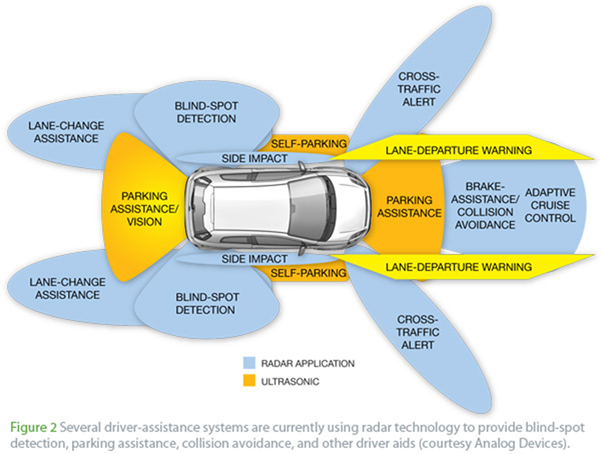

While Tesla works on their vision systems, they also announced a plan to make much more use of radar. That’s an interesting plan. Radar has been the poor 3rd-class sensor of the robocar, after LIDAR and vision. Everybody uses it — you would be crazy not to unless you need to be very low cost. Radar sees further than the other systems, and it tells you immediately how fast any radar target you see is moving relative to you. It sees through fog and other weather, and it can even see under and around big cars in front of you as it bounces off the road and other objects. It’s really good at licence plates, as well.

What radar doesn’t have is high resolution. Today’s automotive radars have gotten good enough to tell you what lane an object like another car is in, but they are not designed to have any vertical resolution — you will get radar returns from a stalled car ahead of you on the road and a sign above that lane, and not be sure of the difference. You need your car to avoid a stalled car in your lane, but you can’t have a car that hits the brakes every time it sees a road sign or bridge!

Real world radar is messy. Your antennas send out and receive from a very broad cone with potential signals from other directions and from side lobes. Reflections are coming from vehicles and road users but also from the ground, hills, trees, fences, signs, bushes and bridges. It’s work to get reliable information from it. Early automotive radars found the best solution was to use the doppler speed information, and discard all returns from anything that wasn’t moving towards or away from you — including stalled cars and cross traffic.

One thing that can help (imperfectly) is a map. You can know where the bridges and signs are so you don’t brake for them. Now you can brake for the stalled cars and the cross traffic the Tesla failed to see. You still have an issue with a stalled car under a bridge or sign, but you’re doing a lot better.

There’s a lot of room for improvement in radar and I will presume — Tesla has not said — that Tesla plans to work on this. The automotive radars everybody buys (from companies like Bosch) were made for the ADAS market — adaptive cruise control, emergency braking etc. It is possible to design new radars with more resolution (particularly in the vertical) and other approaches. You can also try for more resolution, particularly by splitting the transmitter and receiver to produce a synthetic larger aperture. You can go into different bands and get more bandwidth and get more resolution in general. You can play more software tricks, and particularly, you can learn by examining not just single radar returns, but rather the pattern of returns over time. (After all, humans don’t navigate from still frames, we depend on our visual system’s deep evolved ability to use motion and other clues to understand the world.)

The neural networks are making strides here. For example, while pedestrians produce basic radar returns, it turns out that their walking stride has a particular pattern of changes that can be identified by neural networks. People are doing research now on how examining the moving and dynamic pattern of radar returns can help you get more resolution and also identify shapes and motion patterns of objects and figure out what they are.

I will also speculate that it might be possible to return to a successor of the “sweeped” radars of old, the ones we are used to seeing in old war movies. Modern car radars don’t scan like that, but I have to wonder if with new techniques, like phased arrays to steer virtual beams (already the norm in military radar) and modern high-speed electronics, that we might produce radars that get a better sense of where their target is. We’re also getting better at sensor fusion — identifying a radar target in an image or LIDAR return to help learn more about it.

The one best way to improve radar resolution would be to use more bandwidth. There have been experiments in using ultrawideband signals in the very high frequencies which may offer promise. As the name suggests, UWB uses a very wide band, and it distributes its energy over that very wide band, which means it doesn’t put too much energy into any one band and has less chance of interfering in those bands. It’s also possible that the FCC, seeing the tremendous public value that reliable robocars offer, might consider opening up more spectrum for use in radar applications using modern techniques and thus increase the resolution.

In other words, Tesla is wise to work on getting more from radar. With the loss of all MobilEye’s vision tools, they will have to work hard to duplicate and surpass that. For now, Tesla is committed to using parts that are for sale for existing production cars, costing hundreds of dollars. That has taken LIDAR “off their radar” even though almost all research teams depend on LIDAR and expect LIDAR to be cheap in a couple of years. (Including the LIDAR from Quanergy, a company I advise.)

Comma announces a $1,000 autopilot box

I wrote earlier about comma.ai and their efforts to drive with just vision, radar and neural networks. They now plan to offer a box for $1,000 to give you some basic autopilot functionality as an add-on.

To do this, they are working with only some specific car models, namely some Honda vehicles that already have advanced ADAS in them. Using the car’s internal bus, they can talk to the sensors in these cars (such as the radar and possibly the camera) and also send control signals to actuate the steering, brakes and throttle. Then their neural networks can take the sensor information, and output the steering and speed commands to keep you in the lane. (Details are scant so I don’t know if the Comma One box uses its own camera or depends on access to the car’s.)

When I rode in Comma’s prototype it certainly wasn’t up to the level of the Tesla autopilot or some others, but it has been several months so I can’t judge it now. Like the Tesla autopilot, the Comma will not be safe enough to drive the car on its own, and you will need to supervise and be ready to intervene at any time. If you get complacent, as some Tesla drivers have, you could get injured or killed. I have yet to learn what measures Comma will take to make sure people keep their eyes on the road.

Generally, I feel that autopilots are not very exciting products when you have to watch them all the time — as you do — and that bolt-on products are not particularly exciting. Cruise’s initial plan (after they abandoned valet parking) was a bolt-on autopilot, but they soon switched to trying to build a real vehicle, and that got them the huge $700M sale to General Motors.

But for Comma, there is a worthwhile angle. Users of this bolt-on box will be helping to provide training data to improve their systems. In fact, they will be paying for the privilege of testing the system and training it. Something that companies like Google did the old-fashioned way, paying a staff of professionals to drive the cars and gather data. For a tiny, young startup it’s a worthwhile approach.

If you liked this article, you may also want to read:

- Robocar recap: Uber buys Otto, folks leave Google, Ford goes big, Tesla drops MobilEye

- Will robocars be heaven or hell for our cities?

- Robocar platooning, or just carpool?

- Understanding the massive gulf between the Tesla Autopilot and a real robocar, in light of the crash

See all the latest robotics news on Robohub, or sign up for our weekly newsletter.

tags: Automotive, Autonomous Cars, autonomous vehicles, autopilot, robohub focus on autonomous driving, Tesla