Robohub.org

Robots track moving objects with unprecedented precision

MIT Media Lab researchers are using RFID tags to help robots home in on moving objects with unprecedented speed and accuracy, potentially enabling greater collaboration in robotic packaging and assembly and among swarms of drones.

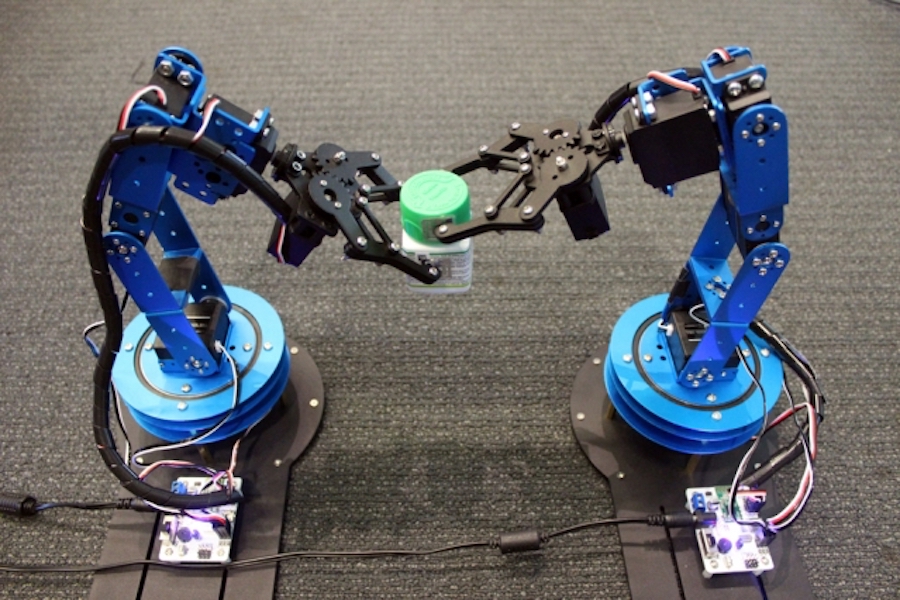

Photo courtesy of the researchers

A novel system developed at MIT uses RFID tags to help robots home in on moving objects with unprecedented speed and accuracy. The system could enable greater collaboration and precision by robots working on packaging and assembly, and by swarms of drones carrying out search-and-rescue missions.

In a paper being presented next week at the USENIX Symposium on Networked Systems Design and Implementation, the researchers show that robots using the system can locate tagged objects within 7.5 milliseconds, on average, and with an error of less than a centimeter.

In the system, called TurboTrack, an RFID (radio-frequency identification) tag can be applied to any object. A reader sends a wireless signal that reflects off the RFID tag and other nearby objects, and rebounds to the reader. An algorithm sifts through all the reflected signals to find the RFID tag’s response. Final computations then leverage the RFID tag’s movement — even though this usually decreases precision — to improve its localization accuracy.

The researchers say the system could replace computer vision for some robotic tasks. As with its human counterpart, computer vision is limited by what it can see, and it can fail to notice objects in cluttered environments. Radio frequency signals have no such restrictions: They can identify targets without visualization, within clutter and through walls.

To validate the system, the researchers attached one RFID tag to a cap and another to a bottle. A robotic arm located the cap and placed it onto the bottle, held by another robotic arm. In another demonstration, the researchers tracked RFID-equipped nanodrones during docking, maneuvering, and flying. In both tasks, the system was as accurate and fast as traditional computer-vision systems, while working in scenarios where computer vision fails, the researchers report.

“If you use RF signals for tasks typically done using computer vision, not only do you enable robots to do human things, but you can also enable them to do superhuman things,” says Fadel Adib, an assistant professor and principal investigator in the MIT Media Lab, and founding director of the Signal Kinetics Research Group. “And you can do it in a scalable way, because these RFID tags are only 3 cents each.”

In manufacturing, the system could enable robot arms to be more precise and versatile in, say, picking up, assembling, and packaging items along an assembly line. Another promising application is using handheld “nanodrones” for search and rescue missions. Nanodrones currently use computer vision and methods to stitch together captured images for localization purposes. These drones often get confused in chaotic areas, lose each other behind walls, and can’t uniquely identify each other. This all limits their ability to, say, spread out over an area and collaborate to search for a missing person. Using the researchers’ system, nanodrones in swarms could better locate each other, for greater control and collaboration.

“You could enable a swarm of nanodrones to form in certain ways, fly into cluttered environments, and even environments hidden from sight, with great precision,” says first author Zhihong Luo, a graduate student in the Signal Kinetics Research Group.

The other Media Lab co-authors on the paper are visiting student Qiping Zhang, postdoc Yunfei Ma, and Research Assistant Manish Singh.

Super resolution

Adib’s group has been working for years on using radio signals for tracking and identification purposes, such as detecting contamination in bottled foods, communicating with devices inside the body, and managing warehouse inventory.

Similar systems have attempted to use RFID tags for localization tasks. But these come with trade-offs in either accuracy or speed. To be accurate, it may take them several seconds to find a moving object; to increase speed, they lose accuracy.

The challenge was achieving both speed and accuracy simultaneously. To do so, the researchers drew inspiration from an imaging technique called “super-resolution imaging.” These systems stitch together images from multiple angles to achieve a finer-resolution image.

“The idea was to apply these super-resolution systems to radio signals,” Adib says. “As something moves, you get more perspectives in tracking it, so you can exploit the movement for accuracy.”

The system combines a standard RFID reader with a “helper” component that’s used to localize radio frequency signals. The helper shoots out a wideband signal comprising multiple frequencies, building on a modulation scheme used in wireless communication, called orthogonal frequency-division multiplexing.

The system captures all the signals rebounding off objects in the environment, including the RFID tag. One of those signals carries a signal that’s specific to the specific RFID tag, because RFID signals reflect and absorb an incoming signal in a certain pattern, corresponding to bits of 0s and 1s, that the system can recognize.

Because these signals travel at the speed of light, the system can compute a “time of flight” — measuring distance by calculating the time it takes a signal to travel between a transmitter and receiver — to gauge the location of the tag, as well as the other objects in the environment. But this provides only a ballpark localization figure, not subcentimter precision.

Leveraging movement

To zoom in on the tag’s location, the researchers developed what they call a “space-time super-resolution” algorithm.

The algorithm combines the location estimations for all rebounding signals, including the RFID signal, which it determined using time of flight. Using some probability calculations, it narrows down that group to a handful of potential locations for the RFID tag.

As the tag moves, its signal angle slightly alters — a change that also corresponds to a certain location. The algorithm then can use that angle change to track the tag’s distance as it moves. By constantly comparing that changing distance measurement to all other distance measurements from other signals, it can find the tag in a three-dimensional space. This all happens in a fraction of a second.

“The high-level idea is that, by combining these measurements over time and over space, you get a better reconstruction of the tag’s position,” Adib says.

The work was sponsored, in part, by the National Science Foundation.