Robohub.org

Robust distributed decision-making in robot swarms

Credit: Jerry Wright

Reaching an optimal shared decision in a distributed way is a key aspect of many multi-agent and swarm robotic applications. As humans, we often have to come to some conclusions about the current state of the world so that we can make informed decisions and then act in a way that will achieve some desired state of the world. Of course, expecting every person to have perfect, up-to-date knowledge about the current state of the world is unrealistic, and so we often rely on the beliefs and experiences of others to inform our own beliefs.

We see this too in nature, where honey bees must choose between a large number of potential nesting sites in order to select the best one. When a current hive grows too large, the majority of bees must choose a new site to relocate to via a process called “swarming” – a problem that can be generalised to choosing the best of a given number of choices. To do this, bees rely on a combination of their own experiences and the experiences of others in the hive in order to reach an agreement about which is the best site. We can learn from solutions found in nature to develop our own models and apply these to swarms of robots. By having pairs of robots interact and reach agreements at an individual level, we can distribute the decision-making process across the entire swarm.

Decentralised algorithms such as these are often considered to be more robust than their centralised counterparts because there is no single point of failure, but this is rarely put to the test. Robustness is crucial in large robot swarms, given that individual robots are often made with cheap and reliable hardware to keep costs down. Robustness is also important in scenarios which might be critical to the protection or preservation of life such as in search and rescue operations. In this context we aim to introduce an alternative model for distributed decision-making in large robot swarms and examine its robustness to the presence of malfunctioning robots, as well as compare it to an existing model: the weighted voter model (Valentini et al., 2014).

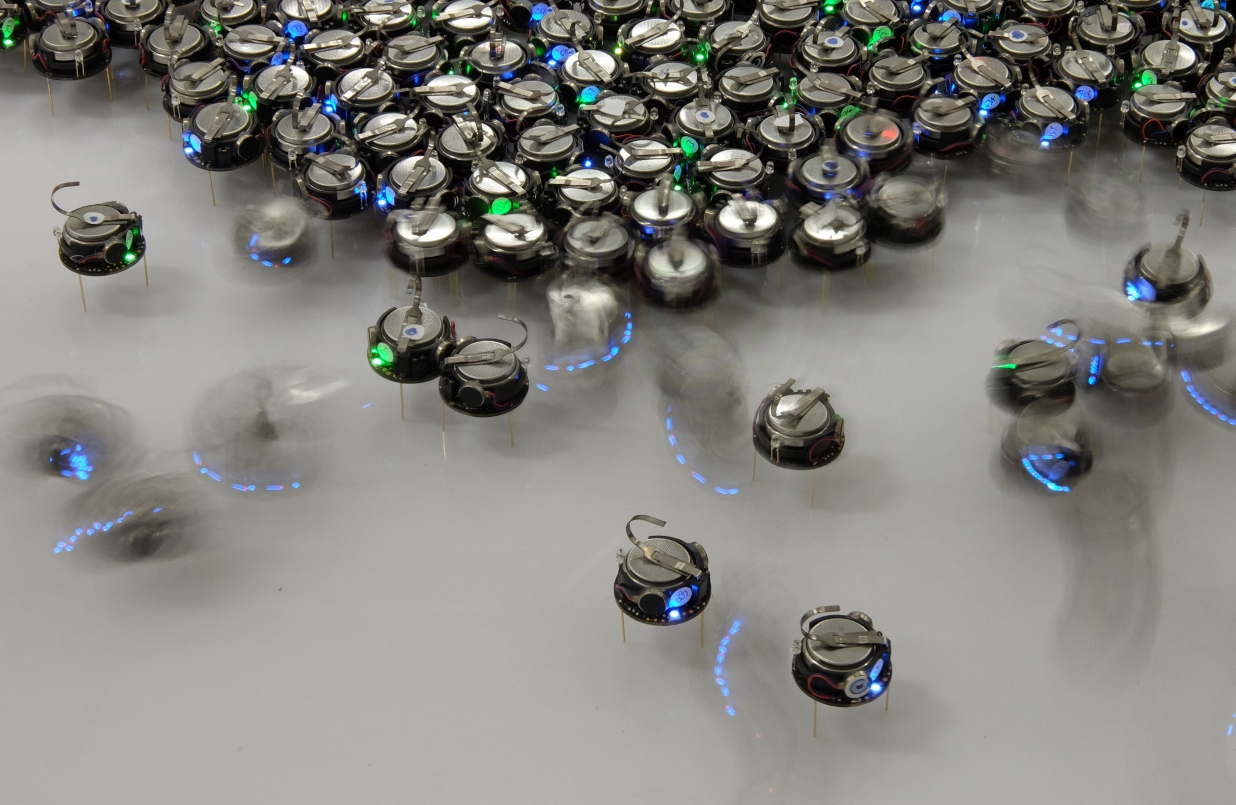

Kilobots are small, low cost robots used to study swarm robotics. They each contain 2 motors for movement and an RGB LED and IR transmitter for communication.

In this work we consider a simplified version of the weighted voter model where robots (specifically kilobots) move around randomly in a 1.2m^2 arena and at any point in time are in one of two primary states: either signalling, or updating. Those in the signalling state are signalling their current belief that either “choice A is the best” or “choice B is the best”, and they continue to do so for a length of time proportional to the quality of the choice i.e. in our experiments, choice A has a quality of 9 and choice B has a quality of 7, and so those believing that choice A is the best choice will signal for a longer duration than those believing that choice B is the best. This naturally creates a bias in the swarm where those signalling for choice A will do so for longer than those signalling for choice B, and this will inevitably affect the updating robots. Those in the updating state will select a signalling robot at random from their local neighbours, provided that they are within their communication radius (10 cm limit for the Kilobots), and adopt that robot’s belief.

We compare this model to our “three-valued model”. Instead of immediately adopting the signalling robot’s belief, robots instead follow these rule: Provided that a belief in choice A corresponds to a truth state of 1 and choice B a truth state of 0, then we introduce a third truth state of 1/2 representing “undecided” or “unknown” as an intermediate state. If the two robots conflict in their beliefs such that one believes choice A (1) to be the best and the other choice B (0) then the updating robot adopts a new belief state of 1/2. If one robot has a stronger belief, either in choice A (1) or choice B (0), and the other is undecided (1/2), then the stronger belief is preserved. This approach eventually leads to the swarm successfully reaching consensus about which is the best choice. Furthermore, the swarm chooses the best of the two choices, which is A in this case.

We then go on to adapt our model so that a percentage of robots is malfunctioning, meaning they adopt a random belief state (either 1 or 0) instead of updating their belief based on other robots, before continuing to signal for that random choice. We run experiments both in simulation and on a physical robot swarms of kilobots.

Results

In the figure, we show results as a trajectory of the kilobots at the Bristol Robotics Laboratory signalling for either choice A or choice B.

Experiments involve a population of 400 kilobots where, on average, 10% of the swarm is malfunctioning (signalling for a random choice). We can see that the three-valued model eventually reaches 100% of the (functional) kilobots in the swarm signalling for choice A in just over 4 minutes. This model outperforms the weighted voter model which, while quicker to come to a decision, achieves below 90% on average. The inclusion of our “undecided” state slows convergence, but in doing so provides a means for robots to avoid adopting the belief of malfunctioning robots when they are in disagreement. For robotic systems where malfunction is a possibility, it therefore seems preferable to choose the three-valued model.

In the video, we show a time-lapse of experiments performed on 400 Kilobots where blue lights represent those signalling for choice A, and red those for choice B. Those in the intermediate state (1/2) may be coloured either red or blue. The green Kilobots are performing as if malfunctioning, such that they adopt a random belief and then signal for that belief.

In the future, we would like to consider ways to close the gap between the three-valued model and the weighted voter model in terms of decision-making speed while maintaining improved performance in the presence of malfunctioning robots. We also intend to consider different distributed algorithms for decision-making which take account of numerous beliefs being signalled within a robot’s radius of communication. So far, both models considered in this work only consider a single robot’s belief while updating, but there exist other models, such as majority rule models and models for opinion-pooling, which take account of the beliefs of many robots. Finally, we intend to investigate models that scale well with the number of choices that the swarm must choose between. Currently, most models only consider a small number of choices, but the models discussed here require discrete signalling periods which would need to increase as the number of choices increases.

This article was originally posted on EngMaths.org.

For more information, read Crosscombe, M., Lawry, J., Hauert, S., & Homer, M. (2017). Robust Distributed Decision-Making in Robot Swarms: Exploiting a Third Truth State. In 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2017)

tags: c-Research-Innovation