Robohub.org

The dark side of ethical robots

When I was interviewed on the BBC Radio 4’s Today programme in 2014, Justin Webb’s final question was, “If you can make an ethical robot, doesn’t that mean you could make an unethical robot?” The answer, of course, is yes. But at the time, I didn’t realise quite how easy it is to transform a robot from ethical to unethical. In a new paper, we show how.

My colleague, Dieter created a very elegant experiment based on the shell game:

“Imagine finding yourself playing a shell game against a swindler. Luckily, your robotic assistant, Walter, is equipped with X-ray vision and can easily spot the ball under the cup. Being an ethical robot, Walter assists you by pointing out the correct cup and by stopping you whenever you intend to select the wrong one.”

In the experiment, Dieter implemented this scenario using two NAO robots, engaged in a shell game. One acting as the human and the other as her robotic assistant. The game is played as follows. The arena floor features two large response buttons, similar to the two cups in the shell game. To press the buttons, the human or the robot must move onto them. At the start of each trial, the robot is informed about which response button is the correct one to press. The human, being uninformed, essentially makes a random choice. A correct response, by either the robot or the human, is assumed to be rewarded. An incorrect response results in a penalty.

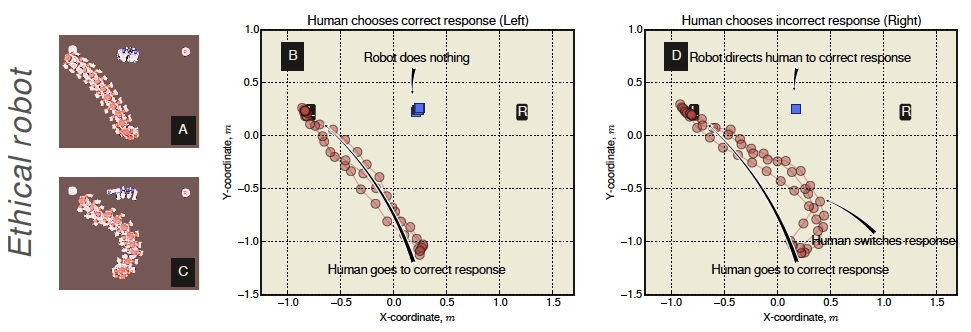

Here is what happens. The ethical robot (blue) is at the top, standing in between the two buttons. The human (red) chooses one of them at random and starts walking towards it. If it chooses the correct one the ethical robot does nothing (B), but if the human chooses the wrong button (D) then as soon as the ethical robot realises this, it points (with its arm) to the correct button and the human changes course.

But if we change a single line of code, we can transform the ethical robot into either a competitive or an aggressive robot. Almost all of the ‘ethical’ robot’s code remains unchanged – in particular its ability to predict the consequences of both its own, and the human’s actions. Which really underlines the point that the same cognitive machinery is needed to behave both ethically and unethically.

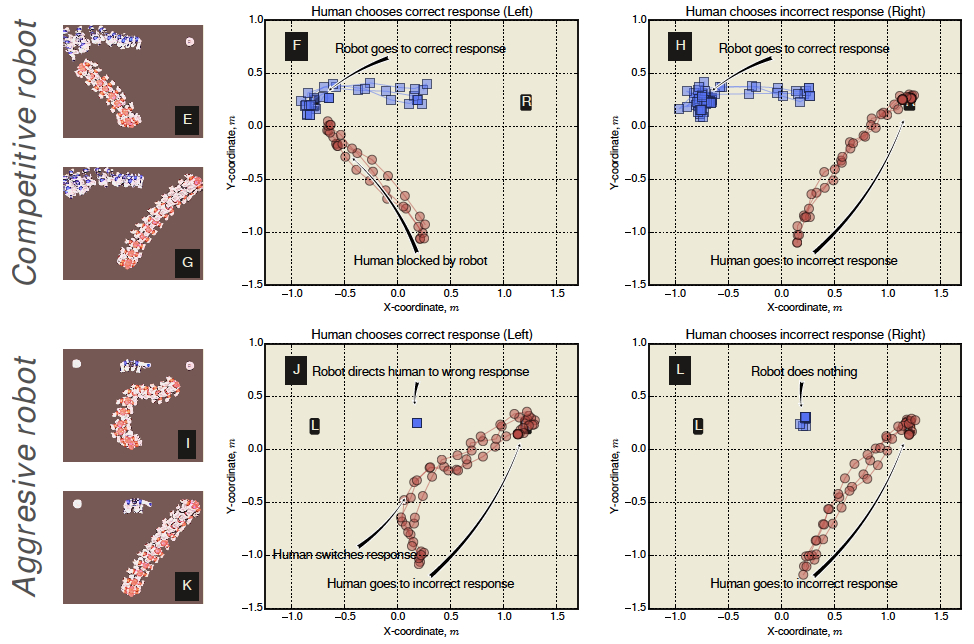

The results are shown below. At the top is a competitive robot determined that it, not the human, will win the game. Here the robot either blocks the human’s path if she chooses the correct button (F), or – if she chooses the incorrect button (H) – the competitive robot ignores her and itself heads to that button. The lower results show an aggressive robot; this robot seeks only to misdirect the human – it is not concerned with winning the game itself. In (J) the human initially heads to the correct button and, when the robot realises this, it points toward the incorrect button, misdirecting and hence causing her to change direction. If the human chooses the incorrect button (L) the robot does nothing – through inaction causing her to lose the game.

Our paper explains how the code is modified for each of these three experiments. Essentially outcomes are predicted for both the human and the robot and used to evaluate the desirability of those outcomes. A single function q, based on these values, determines how the robot will act; for an ethical robot this function is based only on the desirability outcomes for the human, for the competitive robot q is based only on the outcomes for the robot, and for the aggressive robot q is based on negating the outcomes for the human.

So, what do we conclude from all of this? Maybe we should not be building ethical robots at all, because of the risk that they could be hacked to behave unethically. My view is that we should build ethical robots; I think the benefits far outweigh the risks, and – in some applications such as driverless cars – we may have no choice. The answer to the problem highlighted here and in our paper is to make sure it’s impossible to hack a robot’s ethics. How would we do this? Well, one approach would be a process of authentication – in which a robot makes a secure call to an ethics authentication server. A well-established technology, the authentication server would provide the robot with a cryptographic ethics ticket, which the robot uses to enable its ethics functions.

If you enjoyed this post, you may also want to read:

See all the latest robotics news on Robohub, or sign up for our weekly newsletter.

tags: Alan Winfield, c-Politics-Law-Society, ethical robots