Robohub.org

Video: Quadrocopter learns from its mistakes, perfects air racing

Manual programming of robots only gets you so far. And, as you can see in the video, for quadrocopters that’s not very far at all (see the “Without Learning” part starting at 1:30):

On its first try to navigate the obstacle course, the flying robot attempts to navigate based on a pre-computed flight path. The path is derived using a basic mathematical (“first principles”) model. But quadrocopters have complex aerodynamics – the force produced by the propellers changes depending on the vehicle’s velocity and orientation, and thus the actual amount of force produced is quite different from what the simple math describes.

What’s worse, these flying vehicles use soft propellers for safety, which bend differently depending on how much thrust is applied and wear rapidly with use (and even more rapidly when crashing).

Even with continuous feedback on the robot’s position from the motion capture system, manually programming the robots with a control sequence that takes all these imperfections into account is impractical.

My colleague Angela Schoellig and the Flying Machine Arena team here at ETH Zurich have now developed and implemented algorithms that allow their flying robots to race through an obstacle parcours – and learn to improve their performance.

Here is how Angela described the process to me:

The learning algorithm is applied to a quadrocopter that is guided by an underlying trajectory-following controller. The result of the learning is an adapted input trajectory in terms of desired positions. The algorithm has been equipped with several unique features that are particularly important when pushing vehicles to the limits of their dynamic capabilities and when applying the learning algorithm to increasingly complex systems:

1. We designed an input update rule that explicitly takes actuation and sensor limits into account by solving a constrained convex problem.

2. We developed an identification routine that extracts the model data required by the learning algorithm from a numerical simulation of the vehicle dynamics. That is, the algorithm is applicable to systems for which an analytical model is difficult (or impossible) to derive.

3. We combined model data and experimental data, traditional filtering methods and state-of-the- art optimization techniques to achieve an effective and computationally efficient learning strategy that achieves convergence in less than ten iterations.

The result is a robot that learns and improves each time it tries to perform a task.

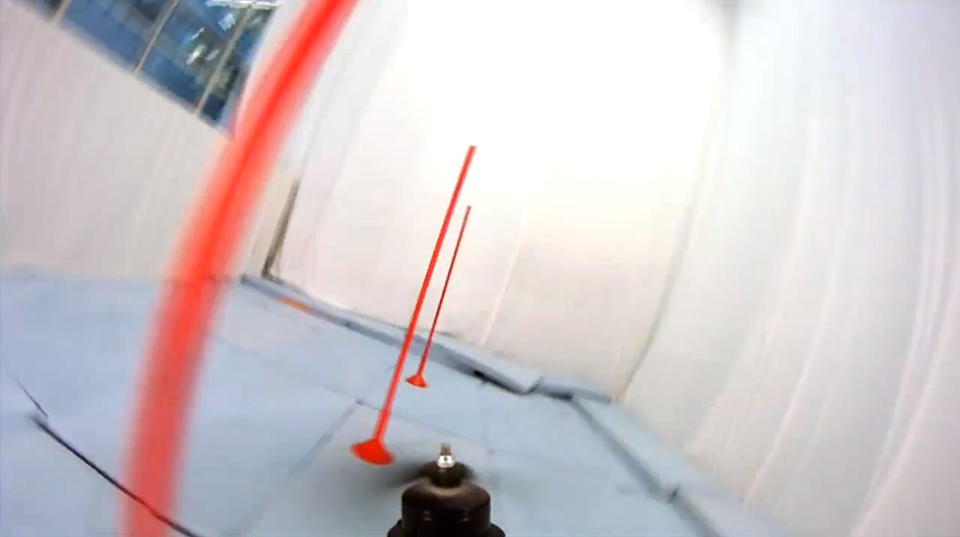

In this example the robot races through a pylon parcours, calling to mind air races, such as the Red Bull Air Racing Championships or the Reno Air Races – except that there are no human pilots that spent their life learning to fly – here it’s robots doing the learning. And they are efficient, taking less than 10 training sessions to find the optimal steering commands!

Moreover, the learning algorithms are not specific to slalom racing, they can be used to learn other tasks. As Angela points out:

Our goal is to enable autonomous systems – such as the quadrocopter in the video – to ‘learn’ the way humans do: through practice.

The videos below show how learning algorithms can be used for other robotic tasks:

Full disclosure: Angela and the Flying Machine Arena team work in the same lab as I. Also, I’m working on RoboEarth, trying to allow robot learning on a much larger scale.

tags: Algorithm, ETH Zurich, Flying, Flying Machine Arena, Learning, quadrocopter, Raffaello D'Andrea, Swiss Robots, Switzerland