Robohub.org

Vision-enabled package handling

Capturing and processing camera and sensor data and recognizing various shapes to determine a set of robotic actions is conceptually easy. Humans do it naturally. But it is difficult for a machine.

Vision-guided motion is a growing field within robotics, with a number of vendors, integrators and alternative methods such as vision guided robots (VGR), advanced vision guided robots (AVGR) and machine learning guided robots (MLGR).

According to Hob Wubbena, VP at Universal Robotics,

- VGR refers to 2 and 2.5D vision for pick, place, servo control, object identification, and tracking of known objects in structured locations

- AVGR refers to 2D and 3D vision for pick, place, servo control, identification, and tracking of a few pre-known objects in semi-structured or random locations

- And MLGR stands for machine learning guided robot and means 2D & 3D vision for pick, place, servo control, identification, and tracking of many unknown objects in random locations.

To use 3D vision to determine the differences between shapes and be able to manipulate items from a stack of different objects, all while avoiding collision with other things within its work environment is the holy grail of bin picking and automated mixed-case and carton handling robotics.

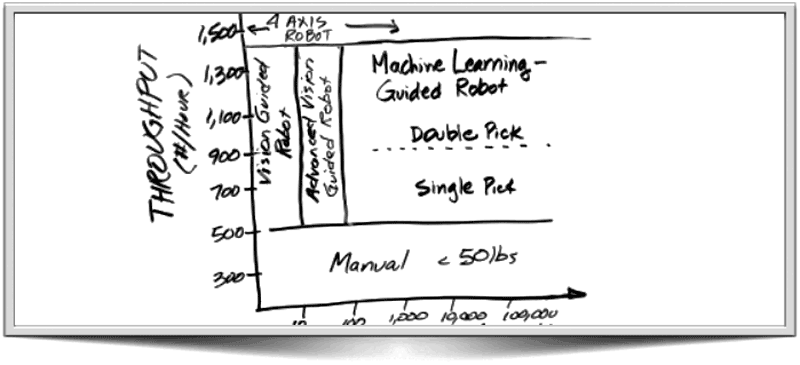

In Hob Wubbena’s throughput chart shown above, Hob plots out the various alternatives and compares them to a human picker. A human can recognize and pick an unlimited number of different-sized and shaped cartons or cases but can only physically handle up to 500 per hour. A VGR 4-axis robot can do the same job at speeds up to 1,400 per hour but with a very limited (10 or less) number of different sizes and shapes. An AVGR 4-axis robot can do the same but with a wider range of package sizes and shapes – up to 100. Both VGR and AVGR are limited because their vision recognition processing and memory systems cannot ascertain random objects at high speed throughput rates. A MLGR 4-axis robot can handle unlimited numbers of random cartons or cases and can do so at throughput speeds up to 1,400 per hour because of the speedy machine learning capabilities of the software.

Note that when conditions are favorable, all 3 vision-enabled robot picking alternatives can pick two cases at the same time thereby increasing throughput over the robots physical speed limitation.

Wubbena’s company, Universal Robotics (not to be confused with Danish Universal Robots), has developed a hardware-independent software system called Neocortex which uses camera and sensor information and basic parameters of box dimensions to learn and react with robot control instructions in real time.

Neocortex uses supervised learning models with associated learning algorithms that analyzes the data and recognize patterns for each unique carton. Neocortex sees the attributes, assembles a reasonable real-time model, builds out the nuances of its parameters, and drives behavior in the machine. Its learning model continues to get smarter as it encounters more cartons over time.

Universal’s Neocortex system is an example of the evolutionary progression of robots learning how to make more decisions for themselves. This new generation of smarter robots are leaving research labs and making their way into factories and shops with robust vision-enabled technologies for logistics, distribution, materials handling and intricate manufacturing.

tags: c-Industrial-Automation