Robohub.org

Visual model-based reinforcement learning as a path towards generalist robots

By Chelsea Finn∗, Frederik Ebert∗, Sudeep Dasari, Annie Xie, Alex Lee, and Sergey Levine

With very little explicit supervision and feedback, humans are able to learn a wide range of motor skills by simply interacting with and observing the world through their senses. While there has been significant progress towards building machines that can learn complex skills and learn based on raw sensory information such as image pixels, acquiring large and diverse repertoires of general skills remains an open challenge. Our goal is to build a generalist: a robot that can perform many different tasks, like arranging objects, picking up toys, and folding towels, and can do so with many different objects in the real world without re-learning for each object or task.

While these basic motor skills are much simpler and less impressive than mastering Chess or even using a spatula, we think that being able to achieve such generality with a single model is a fundamental aspect of intelligence.

The key to acquiring generality is diversity. If you deploy a learning algorithm in a narrow, closed-world environment, the agent will recover skills that are successful only in a narrow range of settings. That’s why an algorithm trained to play Breakout will struggle when anything about the images or the game changes. Indeed, the success of image classifiers relies on large, diverse datasets like ImageNet. However, having a robot autonomously learn from large and diverse datasets is quite challenging. While collecting diverse sensory data is relatively straightforward, it is simply not practical for a person to annotate all of the robot’s experiences. It is more scalable to collect completely unlabeled experiences. Then, given only sensory data, akin to what humans have, what can you learn? With raw sensory data there is no notion of progress, reward, or success. Unlike games like Breakout, the real world doesn’t give us a score or extra lives.

We have developed an algorithm that can learn a general-purpose predictive model using unlabeled sensory experiences, and then use this single model to perform a wide range of tasks.

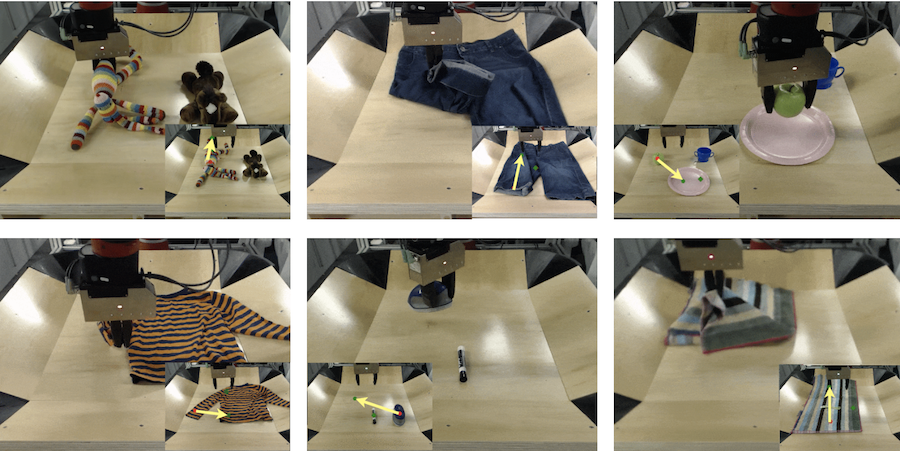

With a single model, our approach can perform a wide range of tasks, including lifting objects, folding shorts, placing an apple onto a plate, rearranging objects, and covering a fork with a towel.

In this post, we will describe how this works. We will discuss how we can learn based on only raw sensory interaction data (i.e. image pixels, without requiring object detectors or hand-engineered perception components). We will show how we can use what was learned to accomplish many different user-specified tasks. And, we will demonstrate how this approach can control a real robot from raw pixels, performing tasks and interacting with objects that the robot has never seen before.

Learning to Predict from Unsupervised Interaction

We first need a means to collect diverse data. If we train the robot to perform a single skill with a single object instance, i.e. using a particular hammer to hit a particular nail, then it will only learn about that narrow setting; that particular hammer and nail is its entire universe. How can we build robots that learn more general skills? Instead of learning a single task in a narrow environment, we can have robots learn on their own, in diverse environments, akin to a child playing and exploring.

If a robot can collect data on its own and learn from that experience completely autonomously, then it doesn’t require a person to supervise and can hence collect experience and learn about the world at any time of day, even overnight! Further, multiple robots can collect data simultaneously and share their experiences – data collection is scalable, hence making it practical to collect diverse data with many objects and motions. To implement this, we had two robots collect data in parallel by taking random actions with a wide range of objects, both rigid objects like toys and cups, and deformable objects like cloth and towels:

Two robots interact with the world, collecting data

autonomously with many objects and many motions.

In the data collection process, we observe what the robot’s sensors measure: the image pixels (vision), the position of the arm (proprioception), and the motor commands sent to the robot (action). We cannot directly measure the positions of the objects, how they react to being pushed, their speed, etc. Further, in this data, there is no notion of progress or success. Unlike a game of Breakout or hammering a nail, we don’t get a score or an objective. All we have to learn from, when interacting in the real world, is what is provided by our senses, or in this case, the robot’s sensors.

So, what can we learn, when only given our senses? We can learn to predict — what will the world look like, or feel like, if the robots moves its arm in one way versus in another way?

The robot learns to predict what the future will look like if it moves

its arm in different ways, learning about physics, objects, and itself.

Prediction allows us to learn general things about the world, things like objects and physics. And such general-purpose knowledge is exactly what the Breakout-playing agent is missing. Prediction also allows us to learn from all of the data that we have: a stream of actions and images has a lot of implicit supervision. This is critical because we don’t have a score or reward function. Model-free reinforcement learning systems typically only learn from the supervision provided from the reward function, whereas model-based RL agents utilize the rich information available in the pixels they observe. Now, how do we actually use these predictions? We will discuss this next.

Planning to Perform Human-Specified Tasks

If we have a predictive model of the world, then we can use it to plan to achieve goals. That is, if we understand the consequences of our actions, then we can use that understanding to choose actions that lead to the desired outcome. We use a sampling-based procedure to plan. In particular, we sample many different candidate action sequences, then select the top plans—the actions that are most likely to lead to the desired outcome—and refine our plan iteratively, by resampling from a distribution of actions fitted to the top candidate action sequences. Once we come up with a plan that we like, we then execute the first step of our plan in the real world, observe the next image, and then replan in case something unexpected happened.

A natural question now is—how can a user specify a goal or desired outcome to the robot? We have experimented with a number of different ways to do so. One of the easiest mechanisms that we have found is to simply click on a pixel in the initial image and specify where the object corresponding to that pixel should be moved, by clicking another pixel position. We can also give more than one pair of pixels to specify other desired object motions. While there are types of goals that cannot be expressed in this way (and we have explored more versatile goal specifications, such as goal classifiers), we have found that specifying pixel positions can be used to describe a wide variety of tasks and is remarkably easy to provide. To be clear, these user-provided goal specifications are not used during the data collection, when the robot is interacting with the world—they are only used at test-time per se, when we want the robot to use its predictive model to accomplish a certain goal.

Experiments

We experiment with this overall approach on a Sawyer robot, collecting 2 weeks of unsupervised experience. Critically, the only human involvement during training is providing a diverse range of objects for the robot to interact with (swapping out objects periodically) and coding the random robot motions that are used to collect data. This allows us to collect data on multiple robots nearly 24 hours a day, with very little effort. We train a single action-conditioned video prediction model on all of this data, including two camera viewpoints, and use the iterative planning procedure described previously to plan and execute on user-specified tasks.

Since we set out to achieve generality, we evaluate the same predictive model on a wide range of tasks involving objects that the robot has never seen before and goals the robot has not encountered previously.

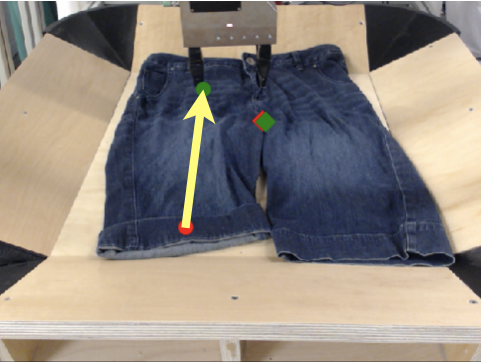

For example, we ask the robot to fold shorts:

Left: The goal is to fold the left side of the shorts. Middle: the robot’s

prediction corresponding to its plan. Right: the robot performs its plan.

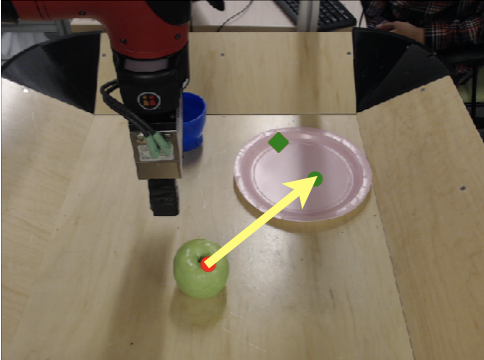

Or put an apple on a plate:

Left: The goal is to put the apple on the plate. Middle: the robot’s

prediction corresponding to its plan. Right: the robot performs its plan.

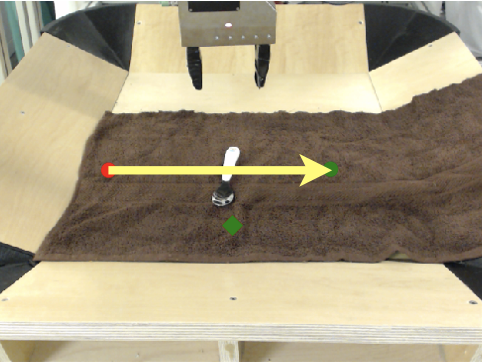

Finally, we can also ask the robot to cover a spoon with a towel:

Left: The goal is to cover the spoon with the towel. Middle: the robot’s

prediction corresponding to its plan. Right: the robot performs its plan.

Interestingly, we find that, even though the model’s predictions are far from perfect, it can still use them to effectively accomplish the specified goal.

Related Work

There have been many prior works that approach the problem of model-based reinforcement learning (RL), i.e. learning a predictive model, and then using this model to act or using it to learn a policy. Many of such prior works have focused on settings where the the positions of objects or other task-relevant information can be accessed directly—rather than through images or other raw sensor observations. Having this low-dimensional state representation is a strong assumption that is often impossible to fulfill in the real world . Model-based RL methods that directly operate on raw image frames have not been studied as extensively. Several algorithms have been proposed for simple, synthetic images and video game environments, which have focused on a fixed set of objects and tasks. Other work has studied model-based RL in the real world, again focusing on individual skills.

A number of recent works have studied self-supervised robotic learning, where large-scale unattended data collection is used to learn individual skills such as grasping (e.g. see these works), push-grasp synergies, or obstacle avoidance. Our approach is also fully self-supervised; in contrast with these approaches, we learn a predictive model that is goal-agnostic and can be used to perform a variety of manipulation skills.

Discussion

Generalization to many distinct tasks in visually diverse settings is arguably one of the biggest challenges in reinforcement learning and robotics research today. Deep learning has greatly reduced the amount of task-specific engineering needed to deploy an algorithm; however, prior methods typically require extensive amounts of supervised experience or focus on mastery of individual tasks. Our results suggest that our approach can generalize to a wide range of tasks and objects, including those never seen previously. The generality of the model is the result of large-scale self-supervised learning from interaction. We believe the results represent a significant step forward in terms of generality of tasks achieved by a single robotic reinforcement learning system.

The work in this post is based on the following paper:

- Visual Foresight: Model-Based Deep Reinforcement Learning for Vision-Based Robotic Control

Frederik Ebert*, Chelsea Finn*, Sudeep Dasari, Annie Xie, Alex Lee, Sergey Levine

Project webpage

The above paper is an extended version of the following four papers, and builds upon the fifth paper:

-

Robustness via Retrying: Closed-Loop Robotic Manipulation via Self-Supervised Learning

Frederik Ebert, Sudeep Dasari, Alex Lee, Sergey Levine, Chelsea Finn

Conference on Robot Learning (CoRL), 2018 -

Few-Shot Goal Inference for Visuomotor Learning and Planning

Annie Xie, Avi Singh, Sergey Levine, Chelsea Finn

Conference on Robot Learning (CoRL), 2018 -

Self-Supervised Visual Planning with Temporal Skip Connections

Frederik Ebert, Chelsea Finn, Alex Lee, Sergey Levine

Conference on Robot Learning (CoRL), 2017 -

Deep Visual Foresight for Planning Robot Motion

Chelsea Finn & Sergey Levine

International Conference on Robotics and Automation (ICRA), 2017 -

Unsupervised Learning for Physical Interaction via Video Prediction

Chelsea Finn, Ian Goodfellow, Sergey Levine

Neural Information Processing Systems (NeurIPS), 2016

This article was initially published on the BAIR blog, and appears here with the authors’ permission.

tags: herotagrc