Robohub.org

When humans play in competition with a humanoid robot, they delay their decisions when the robot looks at them

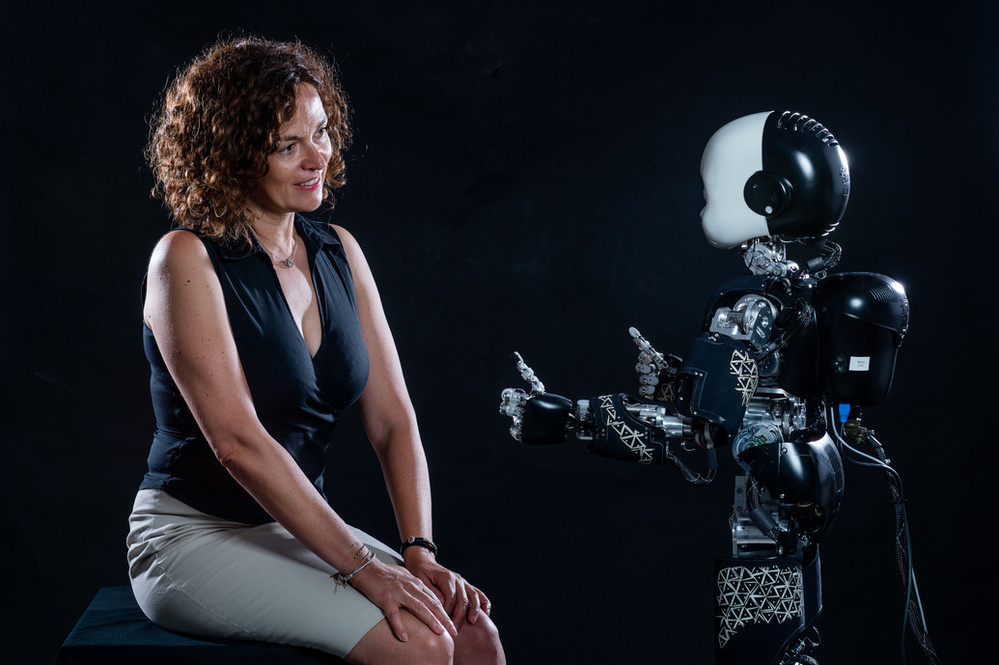

Author: Andrea Facco. Credits: Istituto Italiano di Tecnologia – © IIT, all rights reserved

Gaze is an extremely powerful and important signal during human-human communication and interaction, conveying intentions and informing about other’s decisions. What happens when a robot and a human interact looking at each other? Researchers at IIT-Istituto Italiano di Tecnologia (Italian Institute of Technology) investigated whether a humanoid robot’s gaze influences the way people reason in a social decision-making context. What they found is that a mutual gaze with a robot affects human neural activity, influencing decision-making processes, in particular delaying them. Thus, a robot gaze brings humans to perceive it as a social signal. These findings have strong implications for contexts where humanoids may find applications such as co-workers, clinical support or domestic assistants.

The study, published in Science Robotics, has been conceived within the framework of a larger overarching project led by Agnieszka Wykowska, coordinator of IIT’s lab “Social Cognition in Human-Robot Interaction”, and funded by the European Research Council (ERC). The project, called “InStance”, addresses the question of when and under what conditions people treat robots as intentional beings. That is, whether, in order to explain and interpret robot’s behaviour, people refer to mental states such as beliefs or desires.

The research paper’s authors are Marwen Belkaid, Kyveli Kompatsiari, Davide de Tommaso, Ingrid Zablith, and Agnieszka Wykowska.

Author: Andrea Facco. Credits: Istituto Italiano di Tecnologia – © IIT, all rights reserved

In most everyday life situations, the human brain needs to engage not only in making decisions, but also in anticipating and predicting the behaviour of others. In such contexts, gaze can be highly informative about others’ intentions, goals and upcoming decisions. Humans pay attention to the eyes of others, and the brain reacts very strongly when someone looks at them or directs gaze to a certain event or location in the environment. Researchers investigated this kind of interaction with a robot.

“Robots will be more and more present in our everyday life” comments Agnieszka Wykowska, Principal Investigator at IIT and senior author of the paper. “That is why it is important to understand not only the technological aspects of robot design, but also the human side of the human-robot interaction. Specifically, it is important to understand how the human brain processes behavioral signals conveyed by robots”.

Wykowska and her research group, asked a group of 40 participants to play a strategic game – the Chicken game – with the robot iCub while they measured the participants’ behaviour and neural activity, the latter by means of electroencephalography (EEG). The game is a strategic one, depicting a situation in which two drivers of simulated cars move towards each other on a collision course and the outcome depends on whether the players yield or keep going straight.

Author: Andrea Facco. Credits: Istituto Italiano di Tecnologia – © IIT, all rights reserved

Researchers found that participants were slower to respond when iCub established mutual gaze during decision making, relative to averted gaze. The delayed responses may suggest that mutual gaze entailed a higher cognitive effort, for example by eliciting more reasoning about iCub’s choices or higher degree of suppression of the potentially distracting gaze stimulus, which was irrelevant to the task.

“Think of playing poker with a robot. If the robot looks at you during the moment you need to make a decision on the next move, you will have a more difficult time in making a decision, relative to a situation when the robot gazes away. Your brain will also need to employ effortful and costly processes to try to “ignore” that gaze of the robot” explains further Wykowska.

These results suggest that the robot’s gaze “hijacks” the “socio-cognitive” mechanisms of the human brain – making the brain respond to the robot as if it was a social agent. In this sense, “being social” for a robot could be not always beneficial for the humans, interfering with their performance and speed of decision making, even if their reciprocal interaction is enjoyable and engaging.

Wykowska and her research group hope that these findings would help roboticists design robots that exhibit the behaviour that is most appropriate for a specific context of application. Humanoids with social behaviours may be helpful in assisting in care elderly or childcare, as in the case of the iCub robot, being part of experimental therapy in the treatment of autism. On the other hand, when focus on the task is needed, as in factory settings or in air traffic control, presence of a robot with social signals might be distracting.

tags: c-Research-Innovation