Robohub.org

Drones can almost see in the dark

We want to share with you our recent breakthrough teaching drones to fly using an eye-inspired camera, which opens the door to them performing fast, agile maneuvers and flying in low-light environments, where all commercial drones fail. Possible applications include supporting rescue teams with search missions at dusk or dawn. We submitted this work to the IEEE Robotics and Automation Letters.

How it works

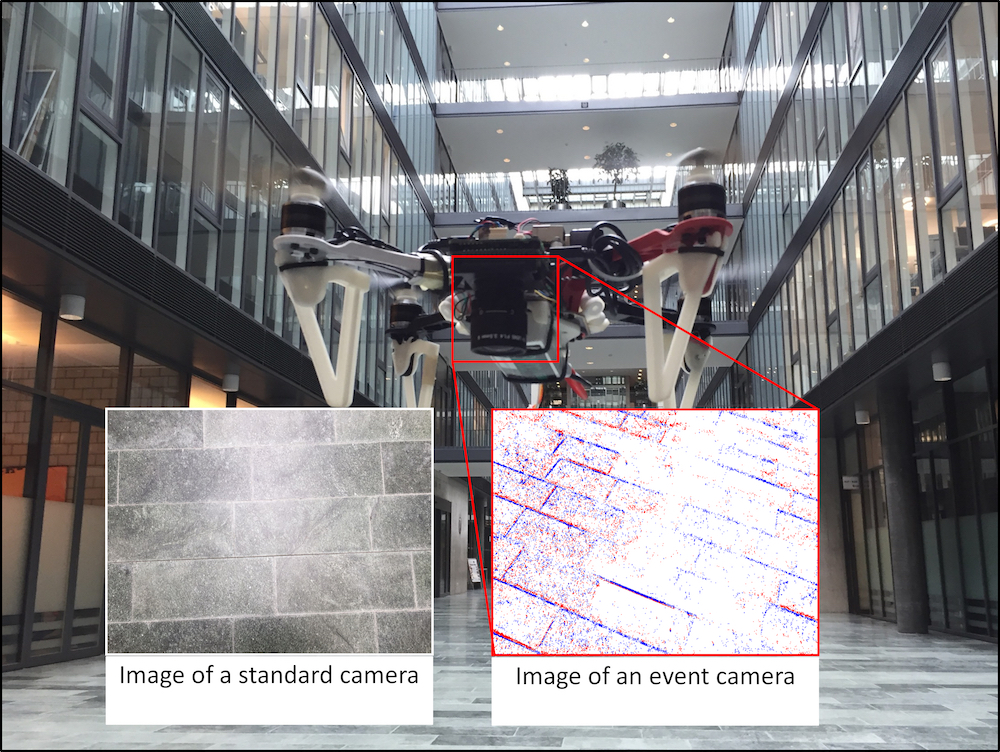

Event cameras are bio-inspired vision sensors that output pixel-level brightness changes instead of standard intensity frames. They do not suffer from motion blur and have a very high dynamic range, which enables them to provide reliable visual information during high speed motions or in scenes characterized by high dynamic range. However, event cameras output only little information when the amount of motion is limited, such as in the case of almost still motion. Conversely, standard cameras provide instant and rich information about the environment most of the time (in low-speed and good lighting scenarios), but they fail severely in case of fast motions or difficult lighting such as high dynamic range or low light scenes. In this work, we present the first state estimation pipeline that leverages the complementary advantages of these two sensors by fusing in a tightly-coupled manner events, standard frames, and inertial measurements. We show that our hybrid pipeline leads to an accuracy improvement of 130% over event-only pipelines, and 85% over standard-frames only visual-inertial systems, while still being computationally tractable. Furthermore, we use our pipeline to demonstrate—to the best of our knowledge—the first autonomous quadrotor flight using an event camera for state estimation, unlocking flight scenarios that were not reachable with traditional visual inertial odometry, such as low-light environments and high dynamic range scenes: we demonstrate how we can even fly in low light (such as after switching completely off the light in a room) or scenes characterized by a very high dynamic range (one side of the room highly illuminated and another side of the room dark).

Paper:

T. Rosinol Vidal, H.Rebecq, T. Horstschaefer, D. Scaramuzza

Hybrid, Frame and Event based Visual Inertial Odometry for Robust, Autonomous Navigation of Quadrotors, submitted to IEEE Robotics and Automation Letters PDF

tags: c-Aerial