Robohub.org

How to start with self-driving cars using ROS

Self-driving cars are inevitable.

In recent years, self-driving cars have become a priority for automotive companies. BMW, Bosch, Google, Baidu, Toyota, GE, Tesla, Ford, Uber and Volvo are investing in autonomous driving research. Also, many new companies have appeared in the autonomous cars industry: Drive.ai, Cruise, nuTonomy, Waymo to name a few (read this post for a list of 260 companies involved in the self-driving industry).

The rapid development of this field has prompted a large demand for autonomous cars engineers. Among the skills required, knowing how to program with ROS is becoming an important one. You just have to visit the robotics-worldwide list to see the large amount of job offers for working/researching in autonomous cars, which demand knowledge of ROS.

Why ROS is interesting for autonomous cars

Robot Operating System (ROS) is a mature and flexible framework for robotics programming. ROS provides the required tools to easily access sensors data, process that data, and generate an appropriate response for the motors and other actuators of the robot. The whole ROS system has been designed to be fully distributed in terms of computation, so different computers can take part in the control processes, and act together as a single entity (the robot).

Due to these characteristics, ROS is a perfect tool for self-driving cars. After all, an autonomous vehicle can be considered as just another type of robot, so the same types of programs can be used to control them. ROS is interesting because:

1. There is a lot of code for autonomous cars already created. Autonomous cars require the creation of algorithms that are able to build a map, localize the robot using lidars or GPS, plan paths along maps, avoid obstacles, process pointclouds or cameras data to extract information, etc… Many algorithms designed for the navigation of wheeled robots are almost directly applicable to autonomous cars. Since those algorithms are already available in ROS, self-driving cars can just make use of them off-the-shelf.

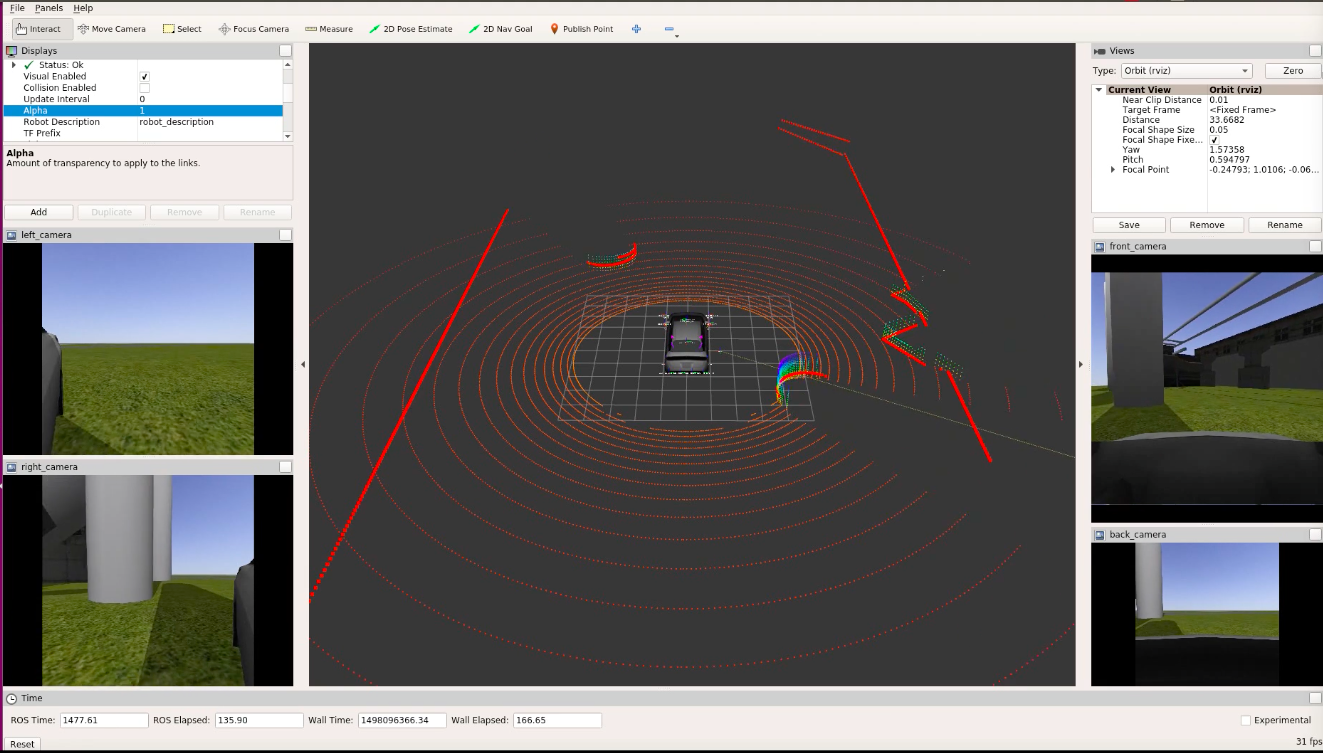

2. Visualization tools are already available. ROS has created a suite of graphical tools that allow the easy recording and visualization of data captured by the sensors, and representation of the status of the vehicle in a comprehensive manner. Also, it provides a simple way to create additional visualizations required for particular needs. This is tremendously useful when developing the control software and trying to debug the code.

3. It is relatively simple to start an autonomous car project with ROS onboard. You can start right now with a simple wheeled robot equipped with a pair of wheels, a camera, a laser scanner, and the ROS navigation stack. You’re set up in a few hours. That could serve as a basis to understand how the whole thing works. Then you can move to more professional setups, like for example buying a car that is already prepared for autonomous car experiments, with full ROS support (like the Dataspeed Inc. Lincoln MKZ DBW kit).

Self-driving car companies have identified those advantages and have started to use ROS in their developments. Examples of companies using ROS include BMW (watch their presentation at ROSCON 2015), Bosch or nuTonomy.

Weak points of using ROS

ROS is not all nice and good. At present, ROS presents two important drawbacks for autonomous vehicles:

1. Single point of failure. All ROS applications rely on a software component called the roscore. That component, provided by ROS itself, is in charge of handling all coordination between the different parts of the ROS application. If the component fails, then the whole ROS system goes down. This implies that it does not matter how well your ROS application has been constructed. If roscore dies, your application dies.

2. ROS is not secure. The current version of ROS does not implement any security mechanism for preventing third parties from getting into the ROS network and reading the communication between nodes. This implies that anybody with access to the network of the car can get to the ROS messaging and kidnap the car behavior.

All those drawbacks are expected to be solved in the newest version of ROS, ROS 2. Open Robotics, the creators of ROS have recently released a second beta of ROS 2 which can be tested here. It is expected there will be a release version by the end of 2017.

In any case, we believe that the ROS-based path to self-driving vehicles is the way to go. That is why, we propose a low budget learning path for becoming a self-driving cars engineer, based on the ROS framework.

Our low cost solution to become a self-driving car engineer

Step 1

First thing you need is to learn ROS. ROS is quite a complex framework to learn and requires dedication and effort. Watch the following video for a list of the 5 best methods to learn ROS. Learning basic ROS will help you understand how to create programs with that framework, and how to reuse programs made by others.

Step 2

Next, you need to get familiar with the basic concepts of robot navigation with ROS. Learning how the ROS navigation stack works will provide you the knowledge of basic concepts in navigation like mapping, path planning or sensor fusion. There is no better way to learn this than taking the ROS Navigation in 5 days course developed by Robot Ignite Academy (disclaimer – this is provided by my company The Construct).

Step 3

Third step would be to learn the basic ROS application to autonomous cars: how to use the sensors available in any standard of autonomous car, how to navigate using a GPS, how to generate an algorithm for obstacle detection based on the sensors data, how to interface ROS with the Can-bus protocol used in all the cars used in the industry…

The following video tutorial is ideal to start learning ROS applied to Autonomous Vehicles from zero. The course teaches how to program a car with ROS for autonomous navigation by using an autonomous car simulation. The video is available for free, but if you want to get the most of it, we recommend you to do the exercises at the same time by enrolling into the Robot Ignite Academy.

Step 4

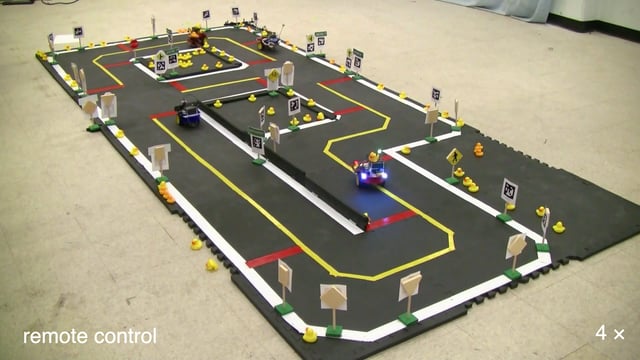

After the basic ROS for Autonomous Cars course, you should learn more advanced subjects like obstacles and traffic signals identification, road following, as well as coordination of vehicles in cross roads. For that purpose, our recommendation would be to use the Duckietown project at MIT. The project provides complete instructions to physically build a small size town, with lanes, traffic lights and traffic signals, to practice algorithms in the real world (even if at a small scale). It also provides instructions to build the autonomous cars that should populate the town. Cars are based on differential drives and a single camera for sensors. That is why they achieve a very low cost (around 100$ per each car).

Image by Duckietown project

Due to the low monetary requirements, and to the good experience it offers for testing real stuff, the Duckietown project is ideal to start practicing autonomous cars concepts like line following based on vision, detecting other cars, traffic signal-based behavior. Still, if your budget is below that cost, you can use a Gazebo simulation of the Duckietown, and still practice most of the content.

Step 5

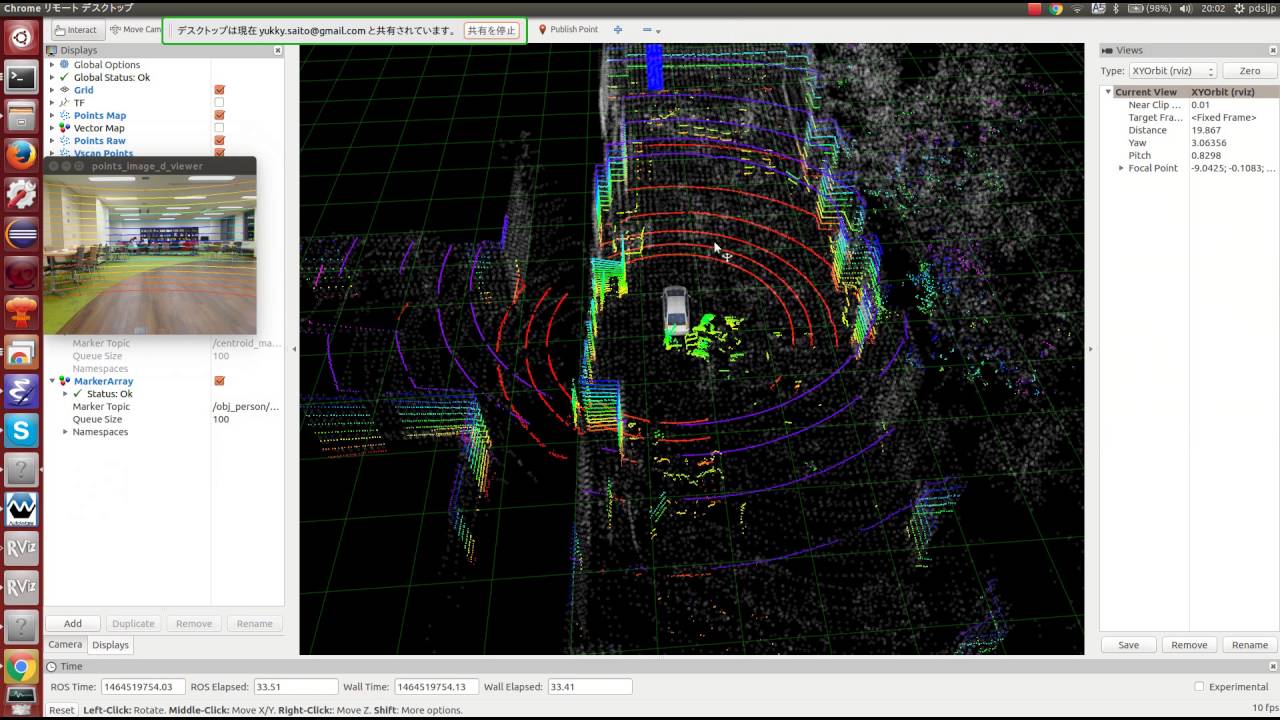

Then if you really want to go pro, you need to practice with real life data. For that purpose we propose you install and learn from the Autoware project. This project provides real data obtained from real cars on real streets, by means of ROS bags. ROS bags are logs containing data captured from sensors which can be used in ROS programs as if the programs were connected to the real car. By using those bags, you will be able to test algorithms as if you had an autonomous car to practice with (the only limitation is that the data is always the same and restricted to the situation that happened when it was recorded).

Image by the Autoware project

The Autoware project is an amazing huge project that, apart from the ROS bags, provides multiple state-of-the-art algorithms for localization, mapping, obstacles detection and identification using deep learning. It is a little bit complex and huge, but definitely worth studying for a deeper understanding of ROS with autonomous vehicles. I recommend you to watch the Autoware ROSCON2017 presentation for an overview of the system (will be available in October 2017).

Step 6

Final step would be to start implementing your own ROS algorithms for autonomous cars and testing them in different, realistic situations. Previous steps provided you with real-life situations, the bags were limited to the situations where they were recorded. Now it is time to test your algorithms in different situations. You can use already existing algorithms in a mix of all the steps above, but at some point, you will see that all those implementations lack some things required for your goals. You will have to start developing your own algorithms, and you will need lots of tests. For this purpose, one of the best options is to use a Gazebo simulation of an autonomous car as a testbed for your ROS algorithms. Recently, Open Robotics has released a simulation of cars for Gazebo 8 simulator.

Image by Open Robotics

That simulation based on ROS contains a Prius car model, together with 16 beam lidar on the roof, 8 ultrasonic sensors, 4 cameras, and 2 planar lidar, which you can use to practice and create your own self-driving car algorithms. By using that simulation, you will be able to put the car in as many different situations as you want, checking if your algorithm works on those situations, and repeating as many times as you want until it works.

Conclusion

Autonomous driving is an exciting subject with demand for experienced engineers increasing year after year. ROS is one of the best options to quickly jump into the subject. So learning ROS for self-driving vehicles is becoming an important skill for engineers. We have presented here a full path to learn ROS for autonomous vehicles while keeping the budget low. Now it is your turn to make the effort and learn. Money is not an excuse anymore. Go for it!

tags: Automotive