Robohub.org

Lifehand 2 prosthetic grips and senses like a real hand

Roboticists and doctors working in Switzerland and Italy have come together to develop a bionic hand that provides sensory feedback in real time, meaning that an amputee can be given back the ability to feel and modify grip like someone with a “real” hand. Using a combination of surgically implanted electrodes (connected at one end to the nervous system, and at the other end to sensors) and an algorithm to convert signals, the team has produced a hand that sends information back to the brain that is so detailed that the wearer could even tell the hardness of objects he was given to hold.

In a paper published in Science Translational Medicine in Feb. 2014, the team from EPFL (Switzerland) and SSSA (Italy) headed by Prof. Micera of EPFL and NCCR-Robotics presented an entirely new type of prosthetic hand, Lifehand 2, that is capable of interfacing with the nervous system of the wearer in order to give the ability to grip and sense like a real hand – including being able to feel shape and hardness of an object. This life-like sensation of feeling is something that has never before been achieved in prosthetics.

Using a combination of the basic concepts from TMR (targeted muscle reinnervation) – a new but increasingly sophisticated technique where nerves are rerouted across the chest and can allow a sense of touch when stimulated with tactile traces – and new robotic techniques, the team has allowed an amputee to feel as though his old hand was back during a series of 700 tests.

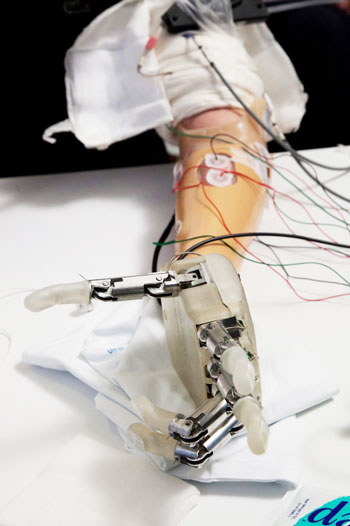

In order for the hand to function, a looped sensory feedback mechanism is employed. The first step of this mechanism involves transverse intrafascicular multichannel electrodes (TIMEs) being surgically implanted in the median and ulna nerves in the arm – those that control the sensory fields of the palm and fingers. The electrodes are then connected to a number of sensors distributed across the prosthetic hand in locations that mimic the locations of tendons on a real hand. The signals from the sensors are then relayed to an external unit where they are processed before being passed back to the nerves in a format that allows the brain to understand how much pressure is being exerted on the sensors, much like how information is passed from a real hand to the brain.

Residual muscular signals are then taken from 5 positions on the forearm and are used to decode the intentions of the user, and a power source is used to activate the hand in one of four different grasping motions.

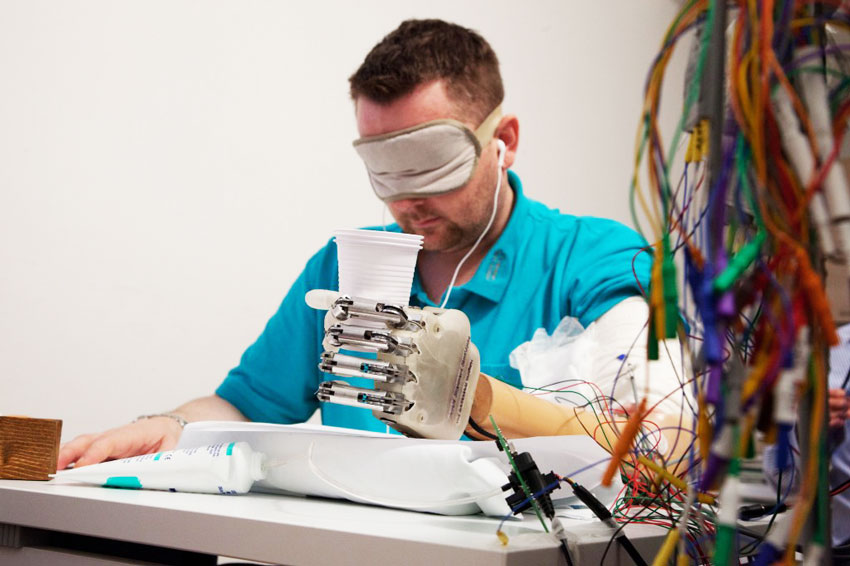

Results of the 700 tests performed during the study were positive, showing that even when the subject was tested under audio and visual deprivation he was able to modify his grip according to what he was holding – so a soft object such as a sponge or an orange was naturally held with a softer grip than a hard object such as a cricket ball. By the end of four weeks of testing, 93% accuracy was recorded for pressure control in the index finger and it was found that the brain automatically assimilated data from multiple sensors in the palm of the hand. The subject was able to distinguish the stiffness of the object within 3 seconds, a response comparable to that of a natural hand, and said “When I held an object, I could feel if it was soft or hard, round or square”.

For many years medical scientists have used prosthetics to replace the lost hands of amputees. Over time, these hands have become more and more sophisticated, using materials that are lighter, more flexible and that look more and more realistic. Such prosthetics are considered invaluable by some amputees but do not have a high uptake within the community due to issues with use and comfort – if a prosthetic is heavy, uncomfortable and cannot move like a real hand it might not be practical for everyday life.

While prosthetics is not new, just the idea of a technique that allows sensors, motors and human nerves to communicate so easily with each other in real time would have been unthinkable a few years ago. For this to have now been developed into a method that supports the ability of the brain to assimilate impulses from 2 different areas to allow the subject to feel the neurologically complex action of palm closure is something exceptional.

Another particularly encouraging aspect of the study was the level of success despite it being 8 years since the chosen subject’s hand was amputated. The team were concerned that because of the long timescales involved, the nerves would be too degraded for use. However, the tests indicated that this was not the case, thus opening up the technology to a wide range of potential users.

As with any other science or technology project, the work is still ongoing. Although the functionality of the hand has been demonstrated to be lifelike, the appearance of a hand made of plastics, iron, tendons and electrical circuits is not, so the team is working on creating a sensitive polymer skin to make the appearance of the hand more realistic and more usable in everyday life situations. In a parallel line of work, in order for the hand to be made portable the electronics required for the sensory feedback system must be miniaturized so that they can be implanted into the prosthetic.

If you liked this article, you may also be interested in:

- This robotic prosthetic hand can be made for just $1000

- Medical robotic systems market to reach $13.6 billion by 2018

- Bionic Man documentary

- If you could enhance yourself with some robotic accessory or implant, what would it be?

See all the latest robotics news on Robohub, or sign up for our weekly newsletter.

tags: c-Health-Medicine, cx-Research-Innovation, prosthetic hand, Service Professional Medical Prosthetics