Robohub.org

Living with a prosthesis that learns: A case-study in translational medicine

Picture the scene: one day you wake up in a hospital with no memory of what happened and you discover in horror that your left hand is no longer there. The doctors say you’ve been in a car accident and from that moment on, you are a “trans-radial mono-lateral amputated person.” After a slow and painful recovery from the wound, you start looking for solutions to at least partially restore the daily living functions you have lost as you’ve quickly learned that 99% of what is around us was designed to be operated by hands. There must be a way, you say to yourself, in which robotics and rehabilitation medicine can help you.

Upon the suggestion of a friend, you venture into the nearest rehabilitation clinic, are enlisted in a state-supported free program for the upper-limb disabled, and fitted with a mechatronic hand/wrist prosthesis. The prosthesis seamlessly and unobtrusively connects to your body, does exactly what you want it to do whenever you want it to do it, and can happily be worn every morning anew. Well, not exactly: sometimes at the beginning of the program, it will fail, to say, to make a fist the way you wanted. In that case, you would close your fist and your prosthesis would learn in one shot how a fist should look like. After three months of practice, you have essentially forgotten this is a prosthesis. It feels it like a part of your body, and you are socially accepted exactly as before the accident.

And now, for the sad reality.

Every year 1,900 new upper-limb amputations occur in Europe, maintaining a population of such disabled persons hovering around 90,000[1]. What can assistive robotics, rehabilitation science and engineerings do for these people? At the time of writing this article, very little.

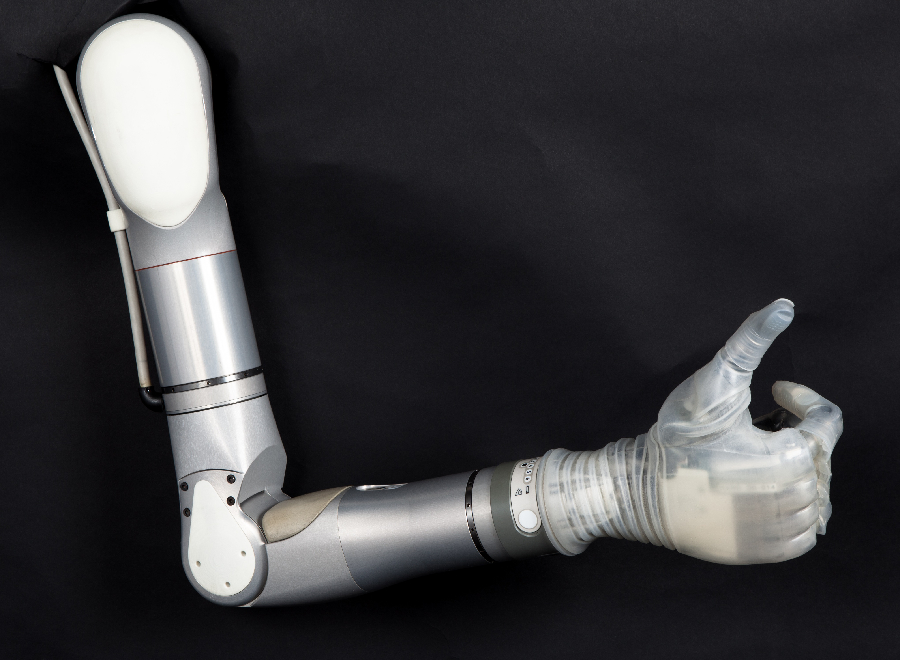

While multi-fingered hand prostheses appeared on the clinical market about eight years ago, there is little hope to have a control system which will enable the patients do the things I sketched above. The situation becomes even harder in case of a proximal amputation, for instance, if you have lost the whole arm including the shoulder, not to mention bilateral amputations – losing both upper limbs. Only the most advanced laboratories in the world, and indeed those which have access to very large funding schemas, can try to solve the problem. Currently, there is only one fully-fledged motorized prosthetic arm, the DEKA arm, which has recently received FDA approval in the USA. What it can do in controlled conditions is spectacular; nevertheless, the clinical practice is still far from this, mainly because amputees can hardly control such complex artefacts [2].

Industrial robot or motorbike?

At our Institution, we’ve been concentrating on this issue for around ten years, and that is how to enforce reliable, natural and dexterous control of an upper-limb prosthesis. The human-machine interface involved must detect the subject’s “intent” to perform a certain action; it must do it reliably and stably; and it must convert this knowledge, in real time, into proper control commands, exploiting all the mechatronic capabilities of the prosthesis.

The challenges are formidable and include how to connect the prosthesis to the patient’s body, how to collect the required amount information from it, how to ensure that the system will do what the patient wants, and how to avoid disastrous error by the control system. We have strived to contribute to these open issues by connecting with hospitals and clinics in an effort to understand the real needs of this specific population of patients.

The main bottleneck we have identified is that current prostheses work like industrial robots. They are calibrated upon a specific subject in the beginning, and are then supposed to work seamlessly for the rest of the patient’s life. Due to the variability of signals gathered from human subjects, this is essentially an impossible task.

We need to have a prosthetic artefact – including the socket, the sensors, the control electronics and the prosthesis itself – which connects to the patient more and more tightly day by day. This really is a two-way learning process: on one hand, control systems based upon machine learning can be used in an „incremental“ fashion, periodically adapting to new situations [3]; but on the other hand, our patients naturally learn how to use the prosthesis as time goes by and they produce signals which drift and change during weeks and months [4].

It’s like learning to drive a new motorbike. You need to master the power of the engine, the stability of the steering mechanism, the ability to brake without slipping; you even need to exploit its weaknesses – and this can only happen as you drive it more and more, a little more every day. After an initial training phase, it will bring you exactly where you want, respecting your driving style. Novel situations can occur, in which case a new, small adaptation will happen. In the long run, it will feel like an extension to your body, and will be ready to seamlessly serve to you to its purpose, every single day of your life.

Incremental to interactive to interdisciplinary

We use incremental machine learning exactly in order to foster and exploit this “reciprocal adaptation” effect. Incremental machine learning methods allow for the continual updating of their own model of the world. In this case, it is the predictive algorithm which turns bodily signals (denoting the subject’s intent) into control commands for the prosthesis. Whenever the subject deems that the control has become unreliable, for instance because she is under stress and her muscle activation is different from what it was beforehand, such a system just needs to be shown the signal pattern corresponding to the desired command in this particular novel situation. This new knowledge is then seamlessly incorporated in the old model and, from this point on, whenever a similar situation or a reasonably similar one occurs, the control will be stable.

This characteristic can actually seem marginal, but it is indeed crucial. There is an essentially unlimited variety of different situations in which the subject might want to, for instance, steadily hold a power grasp. Imagine a shopping afternoon in a mall: the shopping bag must be held at all times while our patient walks, stands, squats; it must be reliably released when she decides to sit down and rest for a while; and the grasp must be stable with respect to all possible wrist, elbow and shoulder rotations and displacement. We claim that no sensible calibration strategy can be put into effect, which will enable the control system to predict all these situations once at the beginning of the rehabilitation program. Rather, it seems to us more natural to let the subject “teach” the prosthesis each time she feels that it required – even to learn completely novel actions [5].

Incremental learning of the control system leads to interaction with the subject, which we consider the practical enforcement to put the patient at the center of the therapy. Additionally, no quick test in laboratory-controlled conditions can ever be enough to check that such an approach works. We need to devise a specific experimental protocol taking into account the particularity of the subject / socket / control system / prosthesis complex, and we need to build apt setups in our labs, enabling our pioneer amputees test these systems in daily-life conditions. This means that we engineers, mathematicians and roboticists must definitely transfer our expertise to the clinics, learn how to interact with end-users and physicians, and start testing mechatronic artifacts on the field since the start [6].

The long-term perspective

With the advent of sockets composed of adaptive biocompatible material and embedding multi-modal sensors, incremental learning could be the key to improving the acceptance of mechatronic prostheses among amputees. The current abandonment rates are astonishingly high and there is no established rehabilitation program in the world as the one we described at the beginning of this article.

In the medium and long term, we shall be able to sell prostheses that work like a motorbike: accompanied by a short instruction manual, they will never cease to adapt to the patient and will push them to obtain an optimal synergy, with minimal supervision by the medical advisors – except for maybe a routine check every now and then. Only in this way will we be able to justify the huge amount of effort that the scientific community puts in this field, and actually improve the life of the large population of disabled persons that we target.

For more information see the original research papers:

Upper-Limb Prosthetic Myocontrol: Two Recommendations

http://journal.frontiersin.org/article/10.3389/fnins.2015.00496/full

Stable myoelectric control of a hand prosthesis using non-linear incremental learning

http://journal.frontiersin.org/article/10.3389/fnbot.2014.00008/full

References

[1] Silvestro Micera, Jacopo Carpaneto and Staniša Raspopovic, Control of hand prostheses using peripheral information. IEEE Reviews in Biomedical Engineering, 3:48–68, 2010.

[2] Jiang, N., Dosen, S., Müller, K.-R., and Farina, D., Myoelectric control of artificial limbs: is there the need for a change of focus? IEEE Signal Processing Magazine, 29(5):149–152, 2012.

[3] Gijsberts, A., Bohra, R., Sierra González, D., Werner, A., Nowak, M., Caputo, B., Roa, M., and Castellini, C., Stable myoelectric control of a hand prosthesis using non-linear incremental learning. Frontiers in Neurorobotics, 8(8), 2014.

[4] Powell, M. A. and Thakor, N. V. A training strategy for learning pattern recognition control for myoelectric prostheses. Journal of Prosthetics and Orthotics, 25(1):30–41, 2013.

[5] Castellini C., Incremental learning of muscle synergies: from calibrating a prosthesis to interacting with it, in Human and Robot Hands – Sensorimotor Synergies to Bridge the Gap between Neuroscience and Robotics, eds Moscatelli A., Bianchi M., editors. (Springer Netherlands; ). Springer series on Touch and Haptic Systems, 2015.

[6] Castellini, C., Bongers, R. M., Nowak, M., and van der Sluis, C. K., Upper-limb prosthetic myocontrol: two recommendations. Frontiers in Neuroscience, 9(496), 2015.

If you enjoyed this article, you may also like:

- Towards building brain-like cognition and control for robots

- Creating a synthetic “second skin” with soft pneumatic actuators

- A helping hand for high-tech firms

- Putting humanoid robots in contact with their environment

- Teaching a brain-controlled robotic prosthetic to learn from its mistakes

- OpenBionics prosthetic hands: Open source, affordable, lightweight, anthropomorphic

- This robotic prosthetic hand can be made for just $1000

See all the latest robotics news on Robohub, or sign up for our weekly newsletter.

tags: c-Health-Medicine, LUKE arm, robotic prosthetics