Robohub.org

Robot selfies, and the road to self-recognition

People take selfies with smartphones and digital cameras (or even with flying robots), and share them on social media, blogs, microblogs and image platforms for social purposes, and though selfies may just be a trend, they say a lot about the narcissism of people and the zeitgeist of the media age.

But could selfies be used more productively? What does it mean for a robot to take a selfie? What good would that be? And is it even all that new?

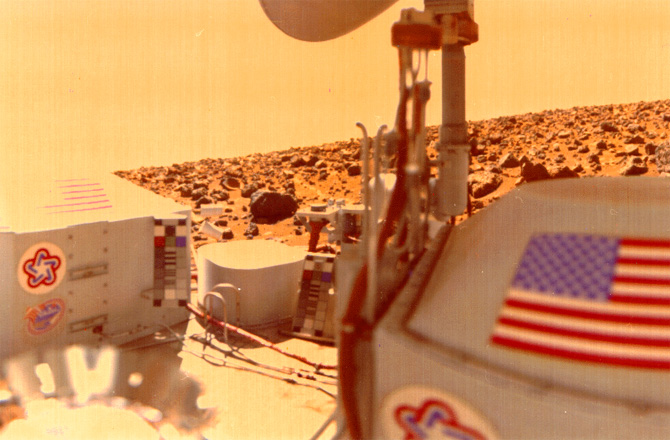

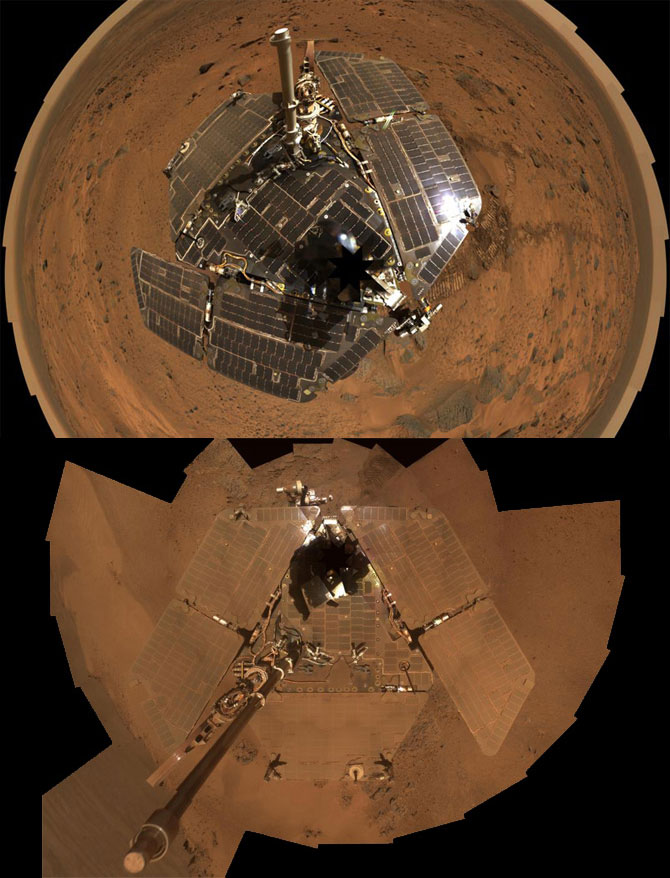

Robot selfies in space

Space robots have been known to take selfies for some time. They are far away, alone, and what could be more important than taking a picture of one’s self and sending it to the carbon units back home? The web abounds with “space robot selfies” and “rover selfies”, such as the Top 10 Space Robot Selfies from Discovery News.

Space robot selfies are usually meant for engineers who want to check the status of instruments with their own eyes and not rely on feedback alone. Space scientists also welcome the views of the ground, mountains, and space – reflections on a robot’s surface can provide information about the light conditions and the atmosphere, while imprints can inform about the characteristics of the ground – but they could do without the vanity of robots, even if it makes relating the dimensions easier.

Just like Transformers

Humans are transformative beings by nature: we grow up and form ourselves, we wrinkle and decompose. The selfies we boast today will one day show us how old we have become and how much we have changed. Transformation will also likely become a key trait in robots. Software robots (bots) are already adept at it – the avatar is a cover donned by a god to discover the world without drawing attention to his mission, and the computer god can chose any cover he wishes. We are likely to expect hardware robots to master transformation just as easily; think only of the popular toy characters marketed through animated films or movies.

One would have to reach out far to explain and solidify this thesis. 3D printers would be needed to produce parts that can be combined in different ways. Robots would be needed to assemble, disassemble, or reassemble other robots. The future would have to be depicted as a permanently changing environment, to which modern machines adjust, all on their own or with the help of their fellow species.

In a world where a robot may have to be small one day and tall the other, fast at one hour and slow at the next, or ugly in one second and pretty in the next, a selfie will allow a robot to remember who it is, whom it encountered with this appearance and what it did under this cover. Selfies will show a robot how old it has become, how much it has changed, and they will help it maintain its identity.

Facebook for robots

Just when a trend seems to be wearing out, it can be transferred to another context where it causes a new stir.

Could selfies provide relevant information not just to engineers and scientists, but to the robots, too? What if they landed on a platform similar to Facebook, where the robots could network with each other, and share data and functions? Could they contribute to robot development and self-learning?

This is not farfetched … already cloud robotics research platforms like RoboEarth and commercial services like MyRobots.com are creating new ways for robots to exchange information and learn from the experience of other robots. One day robot selfies could be part of the information they share, perhaps allowing them to gain new knowledge about their own state and their immediate environment.

On the road to self-recognition

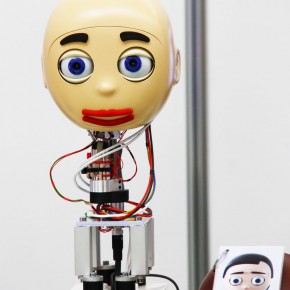

The field of sociable robotics investigates how people and robots interact with each other, and is becoming increasingly important as robots enter our homes and workplaces. What if, by taking a selfie, a robot could interpret its own gestures, and reflect and optimize its behavior? Or if it could study its selfie and learn to make its smile more credible? Could it gain “self-awareness” by recognizing its own reflection in the mirror?

A view from a mirror, one might object, should be enough for the standard robot, which can freeze the perceived reflection and evaluate it as long as it wants. Yet with a selfie (taken by means of an arm or a mirror), it could do more than that. It could show other machines (as well as humans) what it looks like. It could draw attention to itself and advertise itself. It could make an impression and obtain feedback.

Once an android pulls a duckface and takes a selfie, any roboticist will know the breakthrough has been made.

If the robot selfie proliferates, it may be that we humans will get to know hundreds or thousands of artificial beings. We will look into their faces – provided they have faces – and we will learn something about their self-reflection.

And we might even find these images more exciting than the selfies taken by humans.

tags: Algorithm AI-Cognition, c-Arts-Entertainment, cx-Research-Innovation, Space