Robohub.org

Virtual race: Competing in Brain Computer Interface at Cybathlon

This week the world’s first Cybathlon will take place in Zurich, Switzerland. Cybathlon is the brainchild of NCCR Robotics co-director and ETH Zurich professor Robert Riener, and is designed to facilitate discussion between academics, industry and end users of assistive aids, to promote the position of people with disabilities within society and to push development of assistive technology towards solutions that are suitable for use all-day, every day.

In our privileged position as presenting sponsor we are also proud to have NCCR Robotics represented by two teams: In the Brain Computer Interface (BCI) race, by the team EPFL Brain Tweakers, and in the Powered Arm Prosthesis Race, by the team LeMano.

The BCI race is a virtual race, whereby the pilots use BCIs to control an avatar running through a computer game – the pilots may only use their thoughts as no other commands (e.g. head movements) will affect the actions of the avatar. As the Brain Tweakers are a team of researchers representing the Chair in Brain-Machine Interface (CNBI) laboratory led by Prof. José del R. Millán at the Swiss Federal Institute of Technology (EPFL), Lausanne and NCCR Robotics, they jumped at the chance to participate in this race, which plays to their experience and expertise. Indeed, the focus of their research is on the direct use of human brain signals to control devices and interact with the environment around the user.

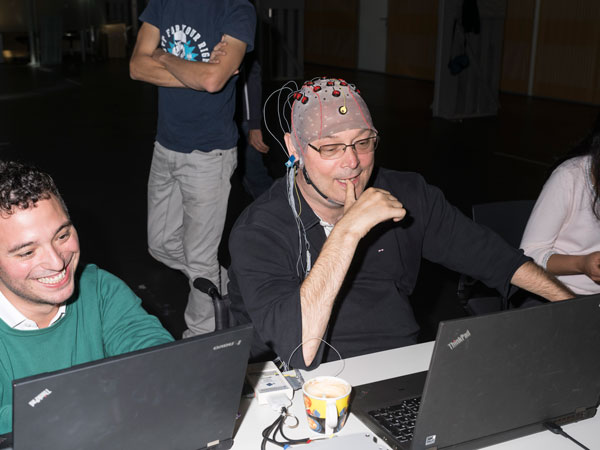

At Cybathlon, the Brain Tweakers will race with two pilots, 30-year-old Numa Poujouly and 48-year-old Eric Anselmo, both of whom have been practicing over the summer during weekly and bi-weekly two hour sessions. The BCI system that they will use translates brain patterns, as captured in real-time by Electroencephalography (EEG, a completely safe, non-invasive and minimally obtrusive approach), into game commands.

At Cybathlon, the Brain Tweakers will race with two pilots, 30-year-old Numa Poujouly and 48-year-old Eric Anselmo, both of whom have been practicing over the summer during weekly and bi-weekly two hour sessions. The BCI system that they will use translates brain patterns, as captured in real-time by Electroencephalography (EEG, a completely safe, non-invasive and minimally obtrusive approach), into game commands.

This is possible by means of what is called a Motor Imagery (MI) BCI. EEG signals (i.e., electrical activity on the user’s scalp, which occurs naturally in everyone as part of the process of using one’s brain), which are monitored through 16 electrodes placed in specific locations on the pilot’s head. Distinct spatio-spectral cortical patterns (patterns of brainwaves) are known to emerge and persist when someone either makes or thinks about making a movement (i.e., movements of the hands, arms and/or feet). It is this pattern of brainwaves when a person imagines making a movement that makes this technology usable by and attractive to people with severe disabilities. These cortical patterns are not only specific to which limb is being thought about, but also tend to be fairly user-specific. Therefore the first of the objectives of the sessions that the team have been using to train this summer have been to identify the MI tasks that are “optimal” (i.e., more easily distinguishable) for each of the two pilots. These then feed into a set of processing modules, consisting of signal processing and machine learning algorithms, that allow the online, real-time detection of the type of movement being executed. The latter is straightforwardly translated into a predetermined command to the pilot’s avatar in the game (speed-up, roll, slide).

This is possible by means of what is called a Motor Imagery (MI) BCI. EEG signals (i.e., electrical activity on the user’s scalp, which occurs naturally in everyone as part of the process of using one’s brain), which are monitored through 16 electrodes placed in specific locations on the pilot’s head. Distinct spatio-spectral cortical patterns (patterns of brainwaves) are known to emerge and persist when someone either makes or thinks about making a movement (i.e., movements of the hands, arms and/or feet). It is this pattern of brainwaves when a person imagines making a movement that makes this technology usable by and attractive to people with severe disabilities. These cortical patterns are not only specific to which limb is being thought about, but also tend to be fairly user-specific. Therefore the first of the objectives of the sessions that the team have been using to train this summer have been to identify the MI tasks that are “optimal” (i.e., more easily distinguishable) for each of the two pilots. These then feed into a set of processing modules, consisting of signal processing and machine learning algorithms, that allow the online, real-time detection of the type of movement being executed. The latter is straightforwardly translated into a predetermined command to the pilot’s avatar in the game (speed-up, roll, slide).

While BCI technology is not in and of its self very new, what the Brain Tweakers hope will give them the winning edge is this introduction of machine learning techniques. For the Brain Tweakers, the Cybathlon provides an excellent opportunity for their team to rapidly advance and test their research outcomes in real-world conditions, exchange expertise and foster collaborations with other groups, as well as to push BCI technology out of the lab to provide practical daily service for end-users in their homes.

While BCI technology is not in and of its self very new, what the Brain Tweakers hope will give them the winning edge is this introduction of machine learning techniques. For the Brain Tweakers, the Cybathlon provides an excellent opportunity for their team to rapidly advance and test their research outcomes in real-world conditions, exchange expertise and foster collaborations with other groups, as well as to push BCI technology out of the lab to provide practical daily service for end-users in their homes.

Attend the Cybathlon in person or watch along live on the Cybathlon website to cheer along for the Brain Tweakers.

If you liked this article, you may also want to read:

- New video shows bionic athletes rehearsing for upcoming Cybathlon competition

- Cybathlon: A bionics competition for people with disabilities

- Meet the pilots preparing for Cybathlon: Claudia Breidbach

- Robohub roundtable: Cybathlon and advancements in prosthetics

See all the latest robotics news on Robohub, or sign up for our weekly newsletter.

tags: c-Research-Innovation, Cybathlon