Robohub.org

Pure autonomy: Google’s new purpose-built self driving car

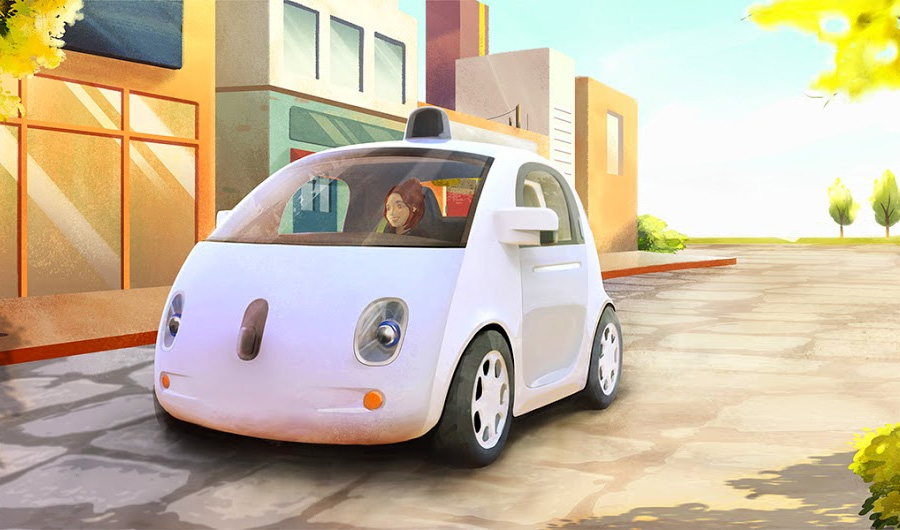

Google completed a major step in its long and extensive self-driving cars project by presenting its first purpose-built autonomous car, which is designed from scratch for its role and is not a modified conventional Toyota.

The as yet unnamed car is very small (looks smaller than a Smart) and can accommodate two people and some luggage. It’s probably electric and its maximum speed is limited to 25mph (~40km/h). Its most striking characteristic is that it doesn’t have any controls — no steering wheel, accelerator or brake pedals — and you can ride it strictly as a passenger, which is probably a strange feeling, but according to Google’s video not entirely unpleasant.

This is a purely experimental project in every aspect. Google will make about a hundred prototypes and it looks like it’s a very serious effort. It is equipped with multiple sensors (a big LIDAR on its roof is probably doing most of the work), but there are also sensors in the front, in the back and where the side view mirrors would have been in a regular car. Thanks to Google’s previous experience with self-driving cars, one can expect very good performance in real environments; other Google self-driving cars have completed hundreds of thousands miles with no major incidents.

The car itself may look like a toy but it is cleverly and purposefully designed to be as cute as possible in order to inspire trust and reduce fear of its autonomous status. Small cars in general may be more vulnerable in a crash with a heavier vehicle, but it’s already proven that with clever engineering you can have almost no compromises in their passive safety, and most small cars from established manufacturers achieve very high scores in a crash test. The main chassis is made from robust box sections of (probably) aluminum tubing and there’s also a tubular roll cage above. Even the suspension wishbones look highly over-engineered for a 25mph city car. A substantial crash box is placed in the front, and above it the whole front panel is made of foam, so the car is not only safe for its passengers but for pedestrians as well. Of course at this stage there are no independent crash tests to verify its performance.

As mentioned above, the most striking aspect of the car is its lack of any kind of manual control. This is a very clever move from Google that aspires to overcome the legal and ethical problems of who should be able to drive or control a self-driving car.

If you completely eliminate any kind of input from the occupants (apart from the destination selection), then anyone on board is defined strictly as a passenger and a question like “should we allow a child to ‘drive’ an autonomous car” is transformed into “should we allow a child to be transported by an autonomous car” — a question that is still probably not very easy to answer, but which is certainly easier than the former one.

Google will launch a small pilot program in California in the next couple of years. The prototypes released on public roads will have manual controls for obvious legal reasons but the goal of this project is to learn and develop the technology and know-how. Read the full post in Google’s official blog here.

It’s worth mentioning that similar concepts and ideas (but not a functional prototype) have been presented over the years. The concept closest to this is ‘UC’ by the pioneering design firm Rinspeed, presented four years ago at the Geneva Motor Show. It was also a two-seater (loosely based on a Fiat 500) but its main feature was the interior, where similarly to Google’s car, no manual controls were present.

tags: analysis, Automotive, Autonomous Cars, ethics, Google, Google car, Laws, Prototype, Research, robohub focus on autonomous driving, Robot Car