Robohub.org

Towards independence: A shared control BCI telepresence robot

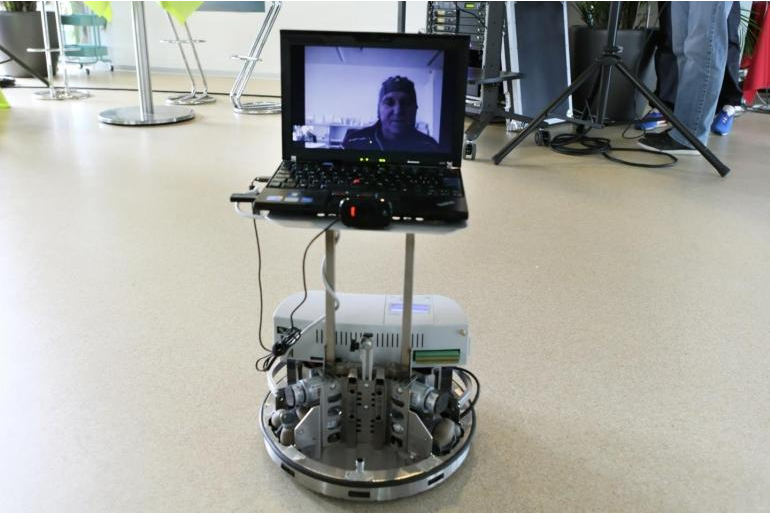

Telepresence robots give the disabled a sense of independence (Photo: Alain Herzog, EPFL)

For those with extreme mobility problems, such as paralysis following spinal cord injury or neurological disease, telepresence can greatly help to offset social isolation. However, controlling a mobile telepresence device through obstacles like doorways can be difficult when fine motor skills have been compromised. Researchers from CNBI, EPFL and NCCR Robotics this week published a cunning solution that uses brain-computer interfaces (BCIs) to enable patients to share control with the robot, making it far easier to navigate.

The idea is for patients to use BCIs to remotely control a mobile telepresence robot, through which the user can move and communicate with friends using a bidirectional audio/video connection.

The study tested nine end users and 10 healthy participants using a BCI and telepresence robot. The aim was to study both the BCI configuration and shared control of the robot when used in tandem with this.

The system was tested using a non-invasive BCI to record brain impulses from a cap placed over the head on the sensorimotor complex, with 16 electroencephalogram (EEG) channels transmitting to the robot via Skype. Healthy participants, who were at home, were then asked to remotely manoeuvre the robot in the CNBI lab along a set path with obstacles (such as doorways and tables) and under four separate conditions: BCI shared control; BCI no shared control; manual shared control and manual no shared control. Two separate conditions (BCI shared control and BCI no shared control) were used with end-users.

https://youtu.be/I1KvtNhv9Yc

In the shared control condition, the robot uses sensors to avoid obstacles without instruction from the driver, this has two advantages:

Firstly, inaccuracies in the system are reduced. For example, if a participant instructs the robot to turn left, this can result in a hard turn or a gentle turn; the system isn’t able to detect which. With shared control, if the user wishes to travel through a doorway to the left, they instruct the robot to turn in that direction, and the robot uses its sensors to avoid the doorframe and travels safely through it.

The second advantage is that less information needs to be communicated from the user, thus reducing the cognitive workload. In shared control, participants were able to complete tasks in shorter time periods and with fewer commands.

Of the 19 participants, all were able to pilot the robot after training. There was no discernable difference in ability between disabled and able-bodied users. “Each of the nine subjects with disabilities managed to remotely control the robot with ease after less than 10 days of training,” said Prof. José del R. Millán, who was in charge of the study.

Where possible, patients were also allowed to control the robot through small residual movements, such as head leans that someone might perform while playing a video game, or pressing a button with their head. It was remarkable that these movements were shown to be no more effective in controlling the robot than using the information transmitted over the BCI alone.

Reference:

R. Leeb, L. Tonin, M. Rohm, L. Desideri, T. Carlson and J. Millán, “Towards Independence: A BCI Telepresence Robot for People with Severe Motor Disabilities,” Proceedings of the IEEE, vol. 103, no. 6, pp. 969-982, Jun 2015. A

tags: c-Research-Innovation, EPFL, NCCR Robotics, telepresence