Robohub.org

Teaching a brain-controlled robotic prosthetic to learn from its mistakes

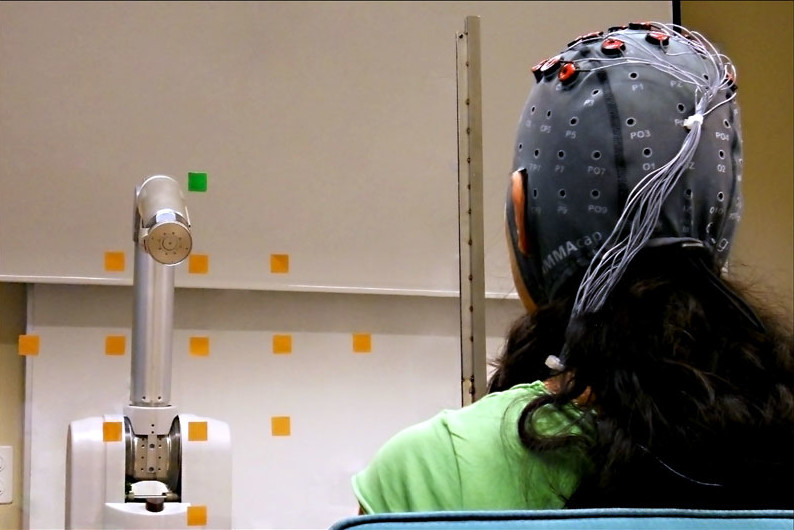

Using a BCI the robot was able to find targets that the person could see but the robot could not (Photo: Iturrate et al., 2015).

Brain-Machine Interfaces (BMIs) — where brain waves captured by electrodes on the skin are used to control external devices such as a robotic prosthetic — are a promising tool for helping people who have lost motor control due to injury or illness. However, learning to operate a BMI can be very time consuming. In a paper published in Nature Scientific Reports, a group from CNBI, EPFL and NCCR Robotics show how their new feedback system can speed up the training process by detecting error messages from the brain and adapting accordingly.

One issue that bars the use of BMIs in everyday life for those with disabilities is the amount of time required to train users, who must learn to modulate their thought processes before their brain signals are clear enough to control an external machine. For example, to move a robotic prosthetic arm, a person must actively think about moving their arm — a thought process that uses significantly more brainpower than the subconscious thought required to move a natural arm. Furthermore, even with extensive training, users are often not able to perform complex movements.

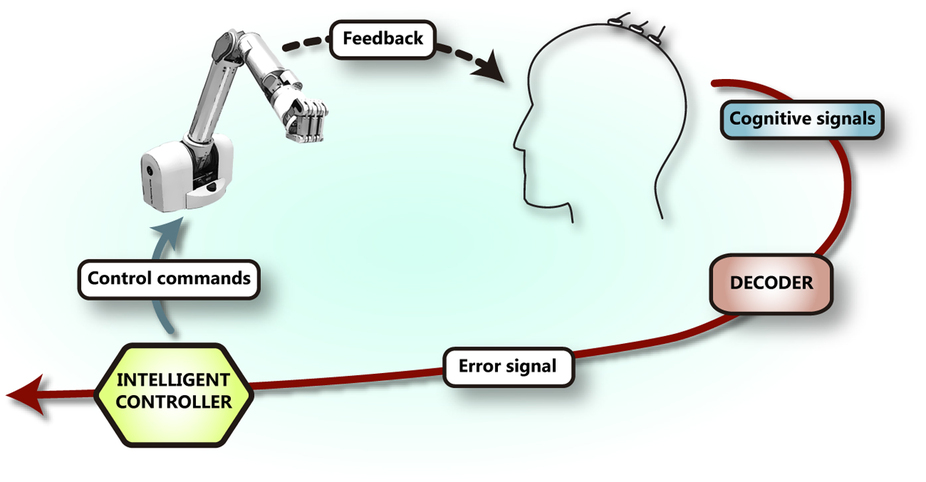

It has been observed, however, that the brain emits very different waves when it experiences success at controlling a BMI than when it experiences failure. With this in mind, the research team developed a new feedback system that records error signals from the brain (called ‘error-related potentials’, or ErrPs) and uses these to evaluate whether or not the correct movement has been achieved. The system then adapts the movement until it finds the correct one, becoming more accurate the longer it is in use.

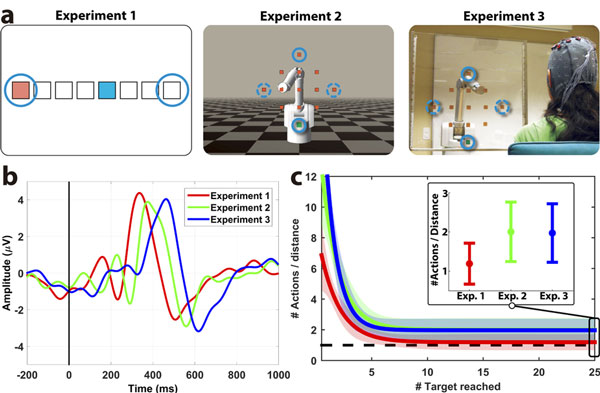

In order to determine the ErrP, twelve subjects were asked to watch a machine perform 350 separate movements, where the machine was programmed to make the wrong movement in 20% of cases. This step took an average of 25 minutes. After this first training stage, each subject performed three experiments where they attempted to locate a specific target using the robotic arm. As expected, the time taken to locate a target reduced as the experiment continued.

Three experiments showed that a robot improved its ability to find the position of a fixed point using error-related brain activity. (Iturrate et al. 2015)

https://youtu.be/jAtcVlTqxeA

This new approach finds obvious applicability in the field of neuroprosthesis, particularly for those with degenerative neurological conditions who find that their requirements change over time. The system also has the potential to automatically adapt itself without the need for retraining or reprogramming.

Reference

I. Iturrate, R. Chavarriaga, L. Montesano, J. Minguez and J. del R. Millán, “Teaching brain-machine interfaces as an alternative paradigm to neuroprosthetics control,” Nature Scientific Reports, vol. 5, Article number: 13893, 2015. doi:10.1038/srep13893

If you liked this article, you may also be interested in:

- DARPA’s HAPTIX project hopes to provide prosthetic hands with sense of touch

- Lifehand 2 prosthetic grips and senses like a real hand

- Control strategies for active lower extremity prosthetics and orthotics

- The gateway to advanced neuroprosthetics: Jessica Feldman talks BrainGate and BCI

- Bionic athletes compete in disciplines drawn from everyday life

See all the latest robotics news on Robohub, or sign up for our weekly newsletter.

tags: BMI, c-Health-Medicine, cx-Research-Innovation, human-robot interaction, machine learning