Robohub.org

Future robots will learn through curiosity and self-generated goals

Imagine a friend asking for help to tidy up her room that is full of objects and furniture. Now imagine for some reason your friend will not be there to help (we are all lazy) and she just describes, showing photos, the way she would like the room to look. Although it may be a boring task, everyone could handle this with ease. As little kids, we discovered new objects and learned to recognise them and developed new skills to manipulate these objects. Guided by our curiosity, we progressively developed visual, attentional, and sensorimotor knowledge that allows us, as adults, to manipulate our physical environment as desired.

Current robots are ill-suited for these challenges. Imagine a humanoid robot helping to clean a room. Assume you have shown the robot the room in its normal tidy state, but once it is messy you tell the robot to make it tidy, as it was before. In such conditions, it would be tedious to teach the robot where to allocate attention and show how each object has to be manipulated to put it in its desired place and orientation, or how to sequence the different actions to do so.

Although new sophisticated robots and powerful algorithms are developed each year, carrying out complex duties and finding unknown solutions to different tasks still require tedious programming of low-level motor control details. In the best case, robots are able to learn a small set of inflexible behaviours. If we compare current artificial agents to biological ones, we find that artificial ones still present relevant limitations in terms of autonomy and versatility.

Future robots, instead, should be able to learn how to truly “master” their environments autonomously, i.e. to self-generate goals and autonomously and efficiently learn the skills to accomplish them based on the progressive acquisition, modification, generalisation, and recombination of previously learned skills and knowledge. This will allow them, with little additional learning, to change an environment from its current state to a wide range of potential goal states desired by the user. The question is: how can we create future robots that are able to face this challenge?

The GOAL-Robots project

Addressing this question and having central importance for applications and artificial intelligence, is the start of a new European project led by the Laboratory of Computational Embodied Neuroscience (LOCEN), an Italian research group based in Rome at the Institute of Cognitive Sciences and Technologies of the Italian National Research Council (ISTC-CNR).

“GOAL-Robots – Goal-based Open-ended Autonomous Learning Robots” project ranked #1 among all 11 projects funded out of the 800 participants to the April 2016 EU FET-OPEN call (Future Emergent Technologies), and is part of the Horizon 2020 EU research program. LOCEN and its Principal Investigator, Gianluca Baldassarre, will coordinate the project consortium also involving other three important European research groups:

- the Laboratoire Psychologie de la Perception (LPP, France), headed by Kevin O’Regan and based in Paris at the Institut Paris Descartes de Neurosciences et Cognition, that will carry out experiments on the learning of goals and skills in children;

- the Frankfurt Institute of Advanced Studies (FIAS, Germany), headed by Jochen Triesch, focussed on the development of bio-inspired visual and attention systems and motor synergies; and

- the group of roboticists directed by Jan Peters, based at the Technische Universitaet Darmstadt (TUDa, Germany), which will lead the challenging robotic demonstrators of the project.

GOAL-Robots follows a previous European project, IM-CLeVeR (“Intrinsically Motivated Cumulative Learning Versatile Robots,” where LOCEN and its former project partners investigated the role of intrinsic motivations (IMs) in fostering autonomous learning both in biological agents and robots. The scientific investigation of IMs began by observing how children, driven by curiosity, explore and interact with the world, acquiring knowledge of how things work and an ample repertoire of sensorimotor skills to act on it.

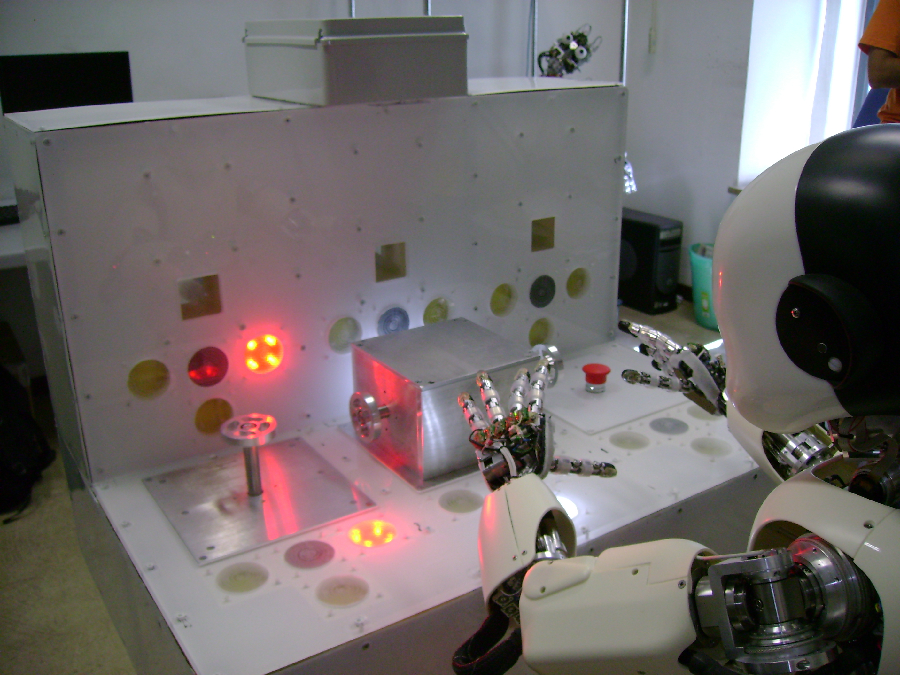

A robot exploring the effects it can produce on a “mechatronic board”. Credit: Laboratory of Computational Embodied Neuroscience.

If curiosity and IMs are at the basis of human versatility and adaptability then endowing artificial agents with architectures and algorithms mimicking IMs might help the needed “motivational engine” to drive robots through an “open-ended” autonomous learning process without the need for continuous programming and training by human experts.

GOAL-Robots also adds a new critical component for the development of open-ended learning in robots: goals. A goal is an agent’s internal representation of a world or body state or event, or a set of them, having two important properties. Firstly, the agent can activate this representation even in the absence of the perception of the corresponding world state or event. Secondly, this activation has “motivational effects”, i.e. it can contribute to select and focus the agent’s attention and behaviour, and guide its learning processes, towards the accomplishment of the goal. The possibility of creating motivating goals at will, even abstract ones, and use them to guide actions and learning is a key element of the behavioural flexibility and learning power of biological agents. The bet of GOAL Robots is that endowing robots with suitable mechanisms to form and exploit goals for learning will drastically increase their autonomous learning potential.

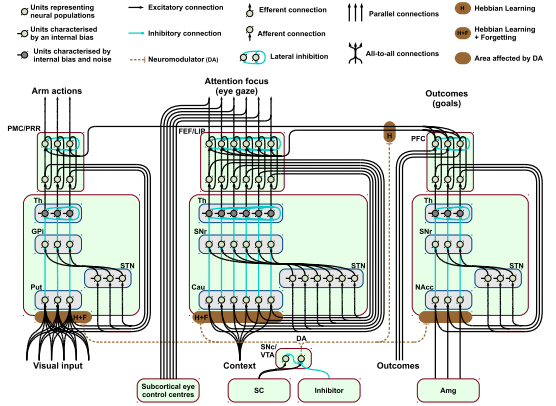

An example of robot controller able to discover goals inspired by surprise mechanisms in brain. Credit: Laboratory of Computational Embodied Neuroscience, Institute of Cognitive Sciences and Technologies, Italian National Research Council.

The possibility of creating and motivating goals at will, even abstract ones, and use them to guide actions and learning is a key element of the behavioural flexibility and learning power of biological agents. GOAL-Robots bets that endowing robots with suitable mechanisms to form and use goals will drastically increase their autonomous learning potential.

Challenges and ideas

The challenging idea of GOAL-Robots is to combine the mechanisms related to IMs with the power of goals. IMs will in particular guide robots in the autonomous discovery of new interesting events in the world caused by its own action. The robots will thus be driven to explore the environment by their curiosity and to self-generate increasingly complex goals, using them to learn numerous skills in an open-ended fashion.

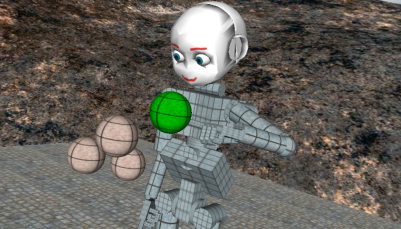

A simulated robot investigating some spheres that can turn on when touched. Credit: Laboratory of Computational Embodied Neuroscience, Institute of Cognitive Sciences and Technologies, Italian National Research Council.

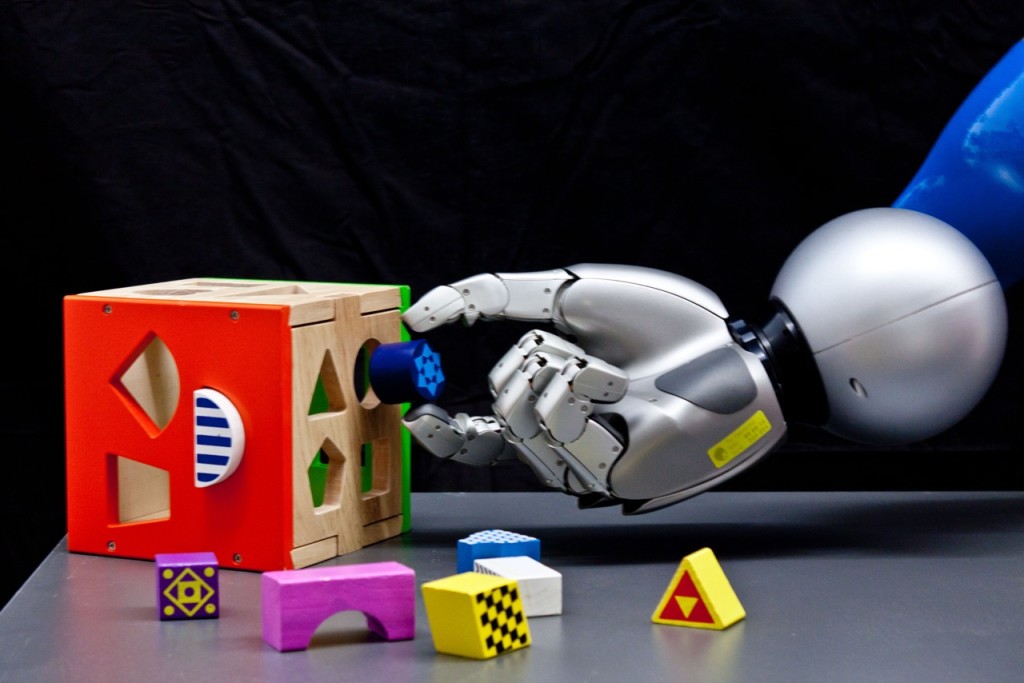

The open-ended process of competence acquisition requires sophisticated mechanisms and the integration of different architectural components. In particular, the robots will need to acquire new skills without impairing previously learned ones and, at the same time, to re-use previously acquired skills to speed up the acquisition of skills for new goals (knowledge transfer). Moreover, they will need to be able to compose previously acquired skills to form increasingly complex skills. These issues represent some of the most important current challenges of artificial intelligence. To face them, the project will use state-of-the-art algorithms to face both the processing of sensory information (e.g., on the basis of deep neural networks) and the organisation and exploitation of knowledge related to motor control (e.g., using dynamic movement primitives and echo-state neural networks).

Moreover, all the mechanisms related to the different components of the learning process will need to be integrated within a single architecture controlling the robots: the high-level goal-formation processes will be connected to motivational layers where, on the basis of IMs, the robot will form and select goals to focus on; goals will be gradually connected to the low-levels of the controllers so that the robot will be able to recall the learned skills to accomplish desired goals or to form more complex skills by composing them; knowledge transfer between different skills will be integrated with the avoidance of their interference, and so on. These mechanisms will be useful not only for the autonomous learning phase but also to allow an external user to exploit the knowledge and competence acquired by the robots.

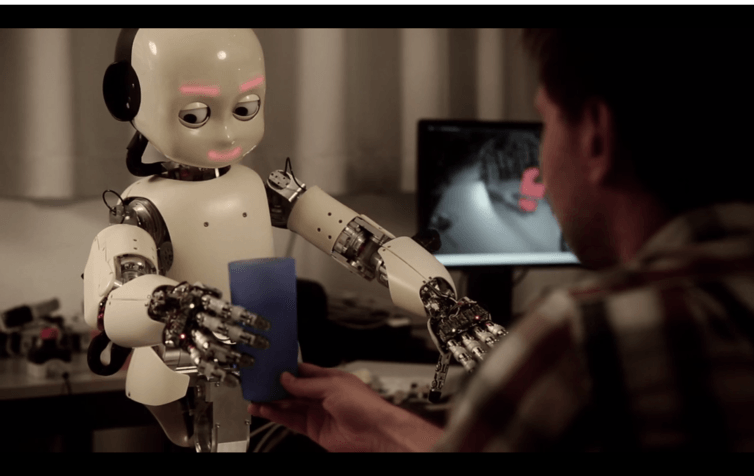

Each year the project will present a complex “robotic demonstrator” where sophisticated robotic platforms (such as iCub or Kuka robots) will be guided by the architectures developed by the project to solve increasingly challenging tasks. These demonstrators will not only show the advancements of the project but also aims to become general benchmarks for open-ended learning in autonomous robots.

The final demonstrator will aim to tackle the “futuristic” challenge that we posed at the beginning of this article: is it possible for a robot to show a versatility and adaptability similar to those of humans interacting with real world scenarios? In particular, the robots will be requested to: (a) observe an ordered scenario formed by several objects located in containers and shelves on a working plane, and (b) reproduce such state of the environment after a user misplaces and mixes the objects.

If GOAL-Robots maintains its promise, you won’t have to worry anymore about lazy friends: when they ask for your help, you will simply ask your artificial friends to help them for you!

tags: c-Research-Innovation, Horizon 2020